Abstract

Conventional manual ultrasound (US) imaging is a physically demanding procedure for sonographers. A robotic US system (RUSS) has the potential to overcome this limitation by automating and standardizing the imaging procedure. It also extends ultrasound accessibility in resource-limited environments with the shortage of human operators by enabling remote diagnosis. During imaging, keeping the US probe normal to the skin surface largely benefits the US image quality. However, an autonomous, real-time, low-cost method to align the probe towards the direction orthogonal to the skin surface without pre-operative information is absent in RUSS. We propose a novel end-effector design to achieve self-normal-positioning of the US probe. The end-effector embeds four laser distance sensors to estimate the desired rotation towards the normal direction. We then integrate the proposed end-effector with a RUSS system which allows the probe to be automatically and dynamically kept to normal direction during US imaging. We evaluated the normal positioning accuracy and the US image quality using a flat surface phantom, an upper torso mannequin, and a lung ultrasound phantom. Results show that the normal positioning accuracy is 4.17 ± 2.24 degrees on the flat surface and 14.67 ± 8.46 degrees on the mannequin. The quality of the RUSS collected US images from the lung ultrasound phantom was equivalent to that of the manually collected ones.

Index Terms—: Robotics and Automation in Life Sciences, Medical Robots and Systems, Sensor-based Control

I. Introduction

Ultrasound (US) imaging has been widely adopted for abnormality monitoring, obstetrics [1], guiding interventional [2], and radiotherapy procedures [3]. US is acknowledged for being cost-effective, real-time, and safe [4]. Nonetheless, the US examination is a physically demanding procedure. Sonographers needs to press the US probe firmly onto the patient’s body and fine-tune the probe’s image view in an un-ergonomic way [5]. More importantly, the examination outcomes are heavily operator-dependent. The information contained in the US images can be easily affected by i) the scan locations on the body; ii) the probe orientations at the scan location; iii) the contact force at the scan location [6]. Obtaining consistent examination outcomes requires highly skilled personnel with rich experience. Unfortunately, they are becoming a scarce medical resource nowadays around the globe [7]. Further, the close interaction between the sonographer and the patient increases the risk of infection if either party carries contagious diseases such as COVID-19 [8].

An autonomous robotic US system (RUSS) has been explored to tackle the issues with the conventional US. RUSS utilizes robot arms to manipulate the US probe. The sonographers are thereby relieved of the physical burdens. The diagnosis can be done remotely, eliminating the need for direct contact with patients [9]. The desired probe pose (position and orientation) and the applied force can be parameterized and executed by the robot arm with high motion precision. As a result, the examination accuracy and repeatability can be secured [10], [11]. Last but not least, the probe pose can be precisely localized, which enables 3D reconstruction of human anatomy with 2D US images [31].

Most autonomous RUSS works [12]–[15] adopt a 2-step-strategy: First, a scan trajectory formed by a series of probe poses is defined using preoperative data such as Magnetic Resonance Imaging (MRI) of the patient or a vision-based point cloud of the patient body. Second, the robot travels along the trajectory while the probe pose and applied force are continuously updated according to intraoperative inputs (e.g., force/torque sensing, real-time US images, etc.). Yet, owing to i) involuntary patient movements during scanning; ii) inevitable errors in scan trajectory to patient registration; iii) highly-deformable skin surface difficult to be measured preoperatively [11], the second step is of great significance to the successful acquisition of diagnostically meaningful US images. The ability to update probe positioning and orientation in real-time is preferred to enhance the efficiency and safety of the scanning process. In particular, keeping the probe to an appropriate orientation is critical to assure a good acoustic coupling between the transducer and the body. A properly oriented probe position offers a clearer visualization of pathological clues in the US images [16]. However, realizing real-time probe orientation adjustment is challenging and remains an open problem.

A. Related Works on Probe Normal Positioning

Maintaining the normal direction with respect to the skin surface is predominantly used as the default optimal probe orientation in RUSS imaging [17]–[20]. The orthogonal probe orientation against the patient body assures more reflected acoustic waves received. It also serves as a good starting point for searching for any anatomical landmark [16]. Preoperative normal angle estimation along a scan trajectory overlaid on a 3D patient body point cloud (obtained from an RGB-D camera) has been implemented in [18]–[22]. The normal vectors for each point in the point cloud can be estimated by fitting a plane to its nearest neighbors. Nevertheless, it is physically challenging to perform real-time normal-based orientation adjustment using this approach for the following reasons: i) The scanned surface may be occluded by the probe, the robot, or other obstacles in the environment, making it difficult to continuously detect the body; ii) Detection algorithms are needed to extract the skin surface from the camera scene, which can be computational-intensive.

To achieve normal positioning in real-time, Tsumura et al. proposed a gantry-like robot with a passive 2 degree-of-freedom (DoF) end-effector that guarantees the normal positioning of the US probe when pressed onto the body [24]–[25]. The end-effector is demonstrated to be useful in both obstetric US and lung US (LUS) examinations. During angle adjustments, there is no delay since the rotational motion is realized mechanically. However, the passive mechanism requires a wide tissue contact area and limits the flexibility of the platform. The US probe is “locked” to the normal direction by default, while the ability to actively tilt the probe to directions other than the normal angle is indispensable in some clinical practices such as liver US examinations [26]. Jiang et al. presented a RUSS that can dynamically rotate the probe to the normal direction for imaging forearm vasculature [20]. The normal positioning task is decomposed into in-plane normal positioning by centering the region with maximum confidence in the US confidence map [27], and out-of-plane normal positioning by executing a fan-shape motion and minimizing the force orthogonal to the probe’s long axis. Although this method allows an active and continuous adjustment, the normal positioning process is separated into two sequential steps, and the out-of-plane adjustment involves extra motion of the probe; hence could compromise the scanning speed. In summary, the real-time normal positioning of the probe is barely explored and is a roadblock toward a fully autonomous RUSS. A new real-time, active control method is desired.

B. Contributions

Despite the previous attempts, the effort of developing a sensing mechanism that is able to perceive the surface angle; thereby providing feedback to guide the robot motion in real-time, is missing. In this work, we aim to move one step forward to autonomous RUSS by addressing the essential problem of how to align the US probe towards the normal direction relative to the patient body in real-time. A novel robot end-effector is proposed where a US probe is encapsulated and surrounded by distance sensors that continuously detect the body surface near the probe. The required rotation adjustments towards perpendicularity can be computed based on the sensed distances from paired sensors. We mount the end-effector to a RUSS and demonstrate real-time tracking of the surface normal direction. A human-shared autonomy is implemented where the probe can be automatically landed on the designated location; the probe’s orientation and contact force are autonomously adjusted during imaging; the probe can be slid on the patient body and be rotated about its long axis via teleoperation.

Our contributions fold into two aspects: i) we propose a compact and cost-effective active-sensing end-effector (A-SEE) design that provides real-time information on the rotation adjustment required for achieving normal positioning. To the best of our knowledge, this is the first work to achieve simultaneous in-plane and out-of-plane probe orientation control on the fly without relying on a passive contact mechanism; ii) we integrate A-SEE with a RUSS (A-SEE-RUSS) and implement a complete US imaging workflow to demonstrate the A-SEE-enabled probe self-normal-positioning capability.

II. Materials and Methods

This section presents the implementation details of A-SEE and its integration with a RUSS. The proposed A-SEE-RUSS (shown in Fig. 1) performs preoperative probe landing pose identification and intraoperative probe self-normal-positioning with contact force adaptation. During imaging, the shared control scheme allows teleoperative sliding of the probe along the patient body surface, as well as rotating the probe about its axis. The aforementioned features are realized upon accomplishing four sub-tasks: Task 1) A-SEE fabrication, sensor calibration, and integration with the RUSS; Task 2) Self-normal-positioning via the 2-DoF rotational motion of the probe in A-SEE-RUSS; Task 3) US probe contact force control via the 1-DoF translational motion of the probe; Task 4) Joystick-based teleoperation enabling control of the remaining 3-DoF probe motion.

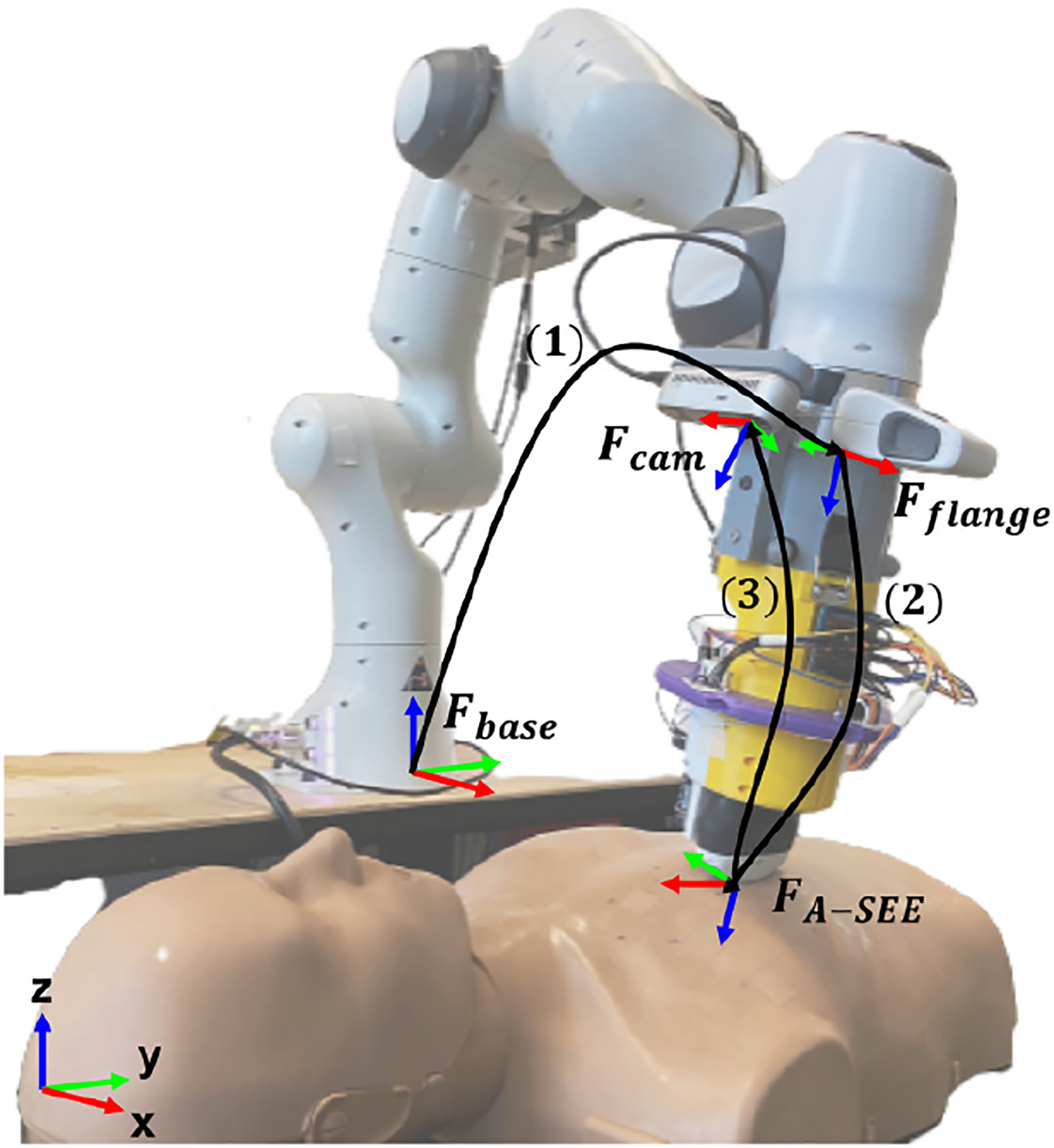

Fig. 1.

Coordinate frame convention for the proposed RUSS. Fbase stands for the robot base frame; Fflange is the flange frame to attach the end-effector; Fcam is the RGB-D camera’s frame; FA–SEE is the US probe tip frame; (1) is the transformation from Fbase to Fflange, denoted as ; (2) is the transformation from Fflange to FA–SEE, denoted as ; (3) is the transformation from FA–SEE to Fcam, denoted as .

A. Technical Approach Overview

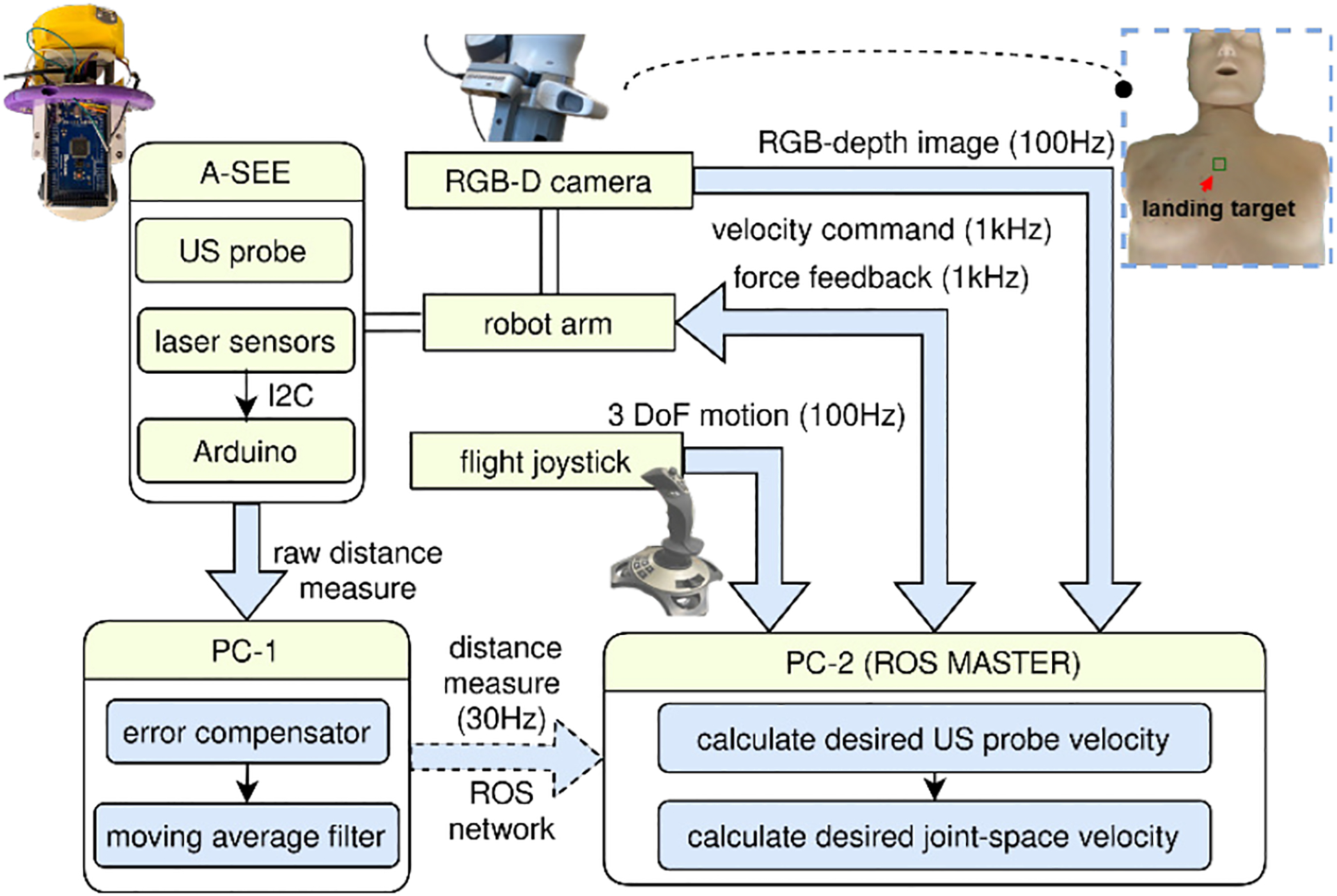

For Task 1), A-SEE consists of four time-of-flight (ToF) laser range finders (VL53L0X, STMicroelectronics, Switzerland) mounted to the sensor ring (see Fig. 2a). A wireless curvilinear US probe (C3HD, Clarius, Canada) is encircled by the sensors. We choose to use laser distance sensors because of the higher accuracy and angular resolution than other distance measuring apparatuses like ultrasonic sensors [29]. The sensors are interfaced with a microcontroller (MEGA2560, Arduino, Italy) which sends the measured distances to a PC (PC-1) through USB serial communication. A clamping mechanism connects A-SEE to a 7-DoF robotic manipulator (Panda, Franka Emika, Germany) together with an RGB-D camera (RealSense D435i, Intel, USA), forming the A-SEE-RUSS hardware. The subject will be captured by the camera when lying on the bed with the robot at its home configuration. The camera and the robot are connected to another PC (PC-2) which receives sensor distance measurements from PC-1 in real-time.

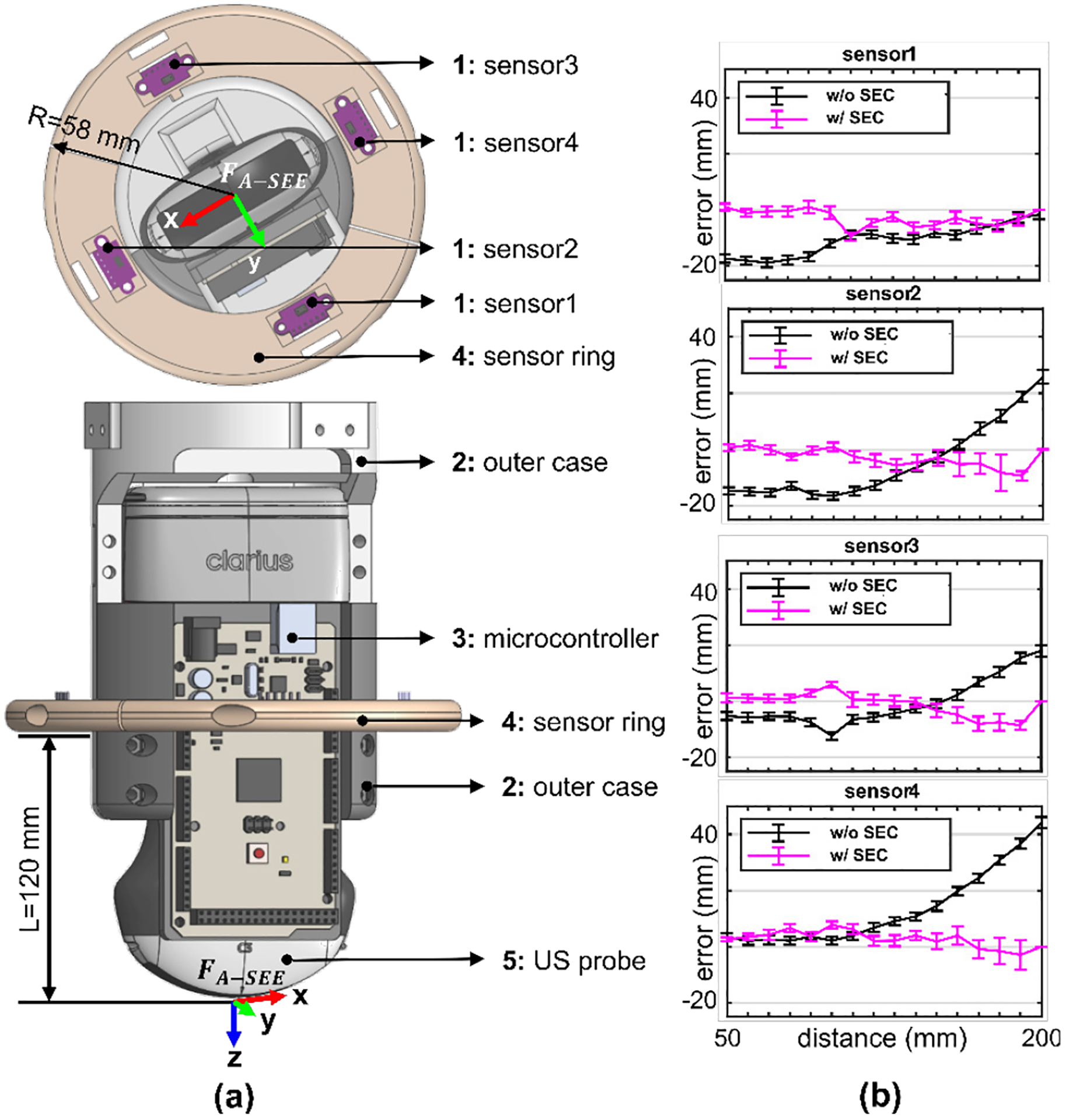

Fig. 2.

A-SEE design and sensor calibration. a) A-SEE CAD model and coordinate frame definition. Sensors are separated 90 degrees from one another. R is the radius of the sensor ring; L is the distance from the bottom of the sensor ring to the tip of the probe. b) Sensor distance measurement error before and after adding sensor error compensator.

The US imaging procedure using A-SEE-RUSS is organized in the following way: The operator first specifies a landing position on the patient body from the camera view. The 3D pose of the landing position relative to the robot’s coordinate frame can be approximated using the depth information camera and the robot pose measurement. The robot then moves the US probe above the landing pose. A force control strategy will be activated to gradually land the probe on the patient body and keep a constant contact force during imaging (for Task 3)). Once landed on the body, A-SEE-RUSS makes sure the probe is always held orthogonal by concurrently adjusting the in-plane and out-of-plane rotation based on the 4 distance readings (for Task 2)). Meanwhile, the operator can freely change the probe’s position and rotate the probe about its axis through a 3-DoF flight joystick (PXN-2113-SE, PXN, Japan) and collect US images (for Task 4)).

B. Sensor Calibration

Since the probe orientation is adjusted based on the sensor readings, the normal positioning performance depends largely on the distance sensing accuracy of the sensors. The purpose of sensor calibration is to model and compensate for the distance sensing error so that the accuracy can be enhanced. First, we conducted an experiment to test the accuracy of each sensor, where a planar object was placed at different distances (from 50 mm to 200 mm with 10 mm intervals measured by a ruler). The sensing errors were calculated by subtracting the sensor readings from the actual distance. The 50 to 200 mm calibration range is experimentally determined to allow 0 to 60 degrees arbitrary tilting of A-SEE on a flat surface without letting the sensor distance readings exceed this range. Distance sensing beyond this range will be rejected. The results of the sensor accuracy test are shown in Fig. 2b. Black curves indicate that the sensing error changes at different sensing distances with a distinctive distance-to-error mapping for each sensor. A sensor error compensator (SEC) is designed in the form of a look-up table that stores the sensing error versus the sensed distance data. SEC linearly interpolates the sensing error given arbitrary sensor distance input. The process of reading the look-up table is described by , where stores the raw sensor readings; stores the sensing errors to be compensated. The sensor reading with SEC applied is given by:

| (1) |

where dmin is 50 mm, dmax is 200 mm. With SEC, we repeated the same experiment above. The magenta curves show the sensing accuracy in Fig. 2b. The mean sensing error was 11.03 ± 1.61 mm before adding SEC and 3.19 ± 1.97 mm after adding SEC. A two-tailed t-test (95% confidence level) hypothesizing no significant difference in the sensing accuracy with and without SEC was performed. A p-value of 9.72 × 10−8 suggests SEC can considerably improve the sensing accuracy. Later in this paper, the effectiveness of SEC will be further demonstrated when testing the probe normal positioning accuracy with the robot arm.

C. US Probe Self-Normal-Positioning

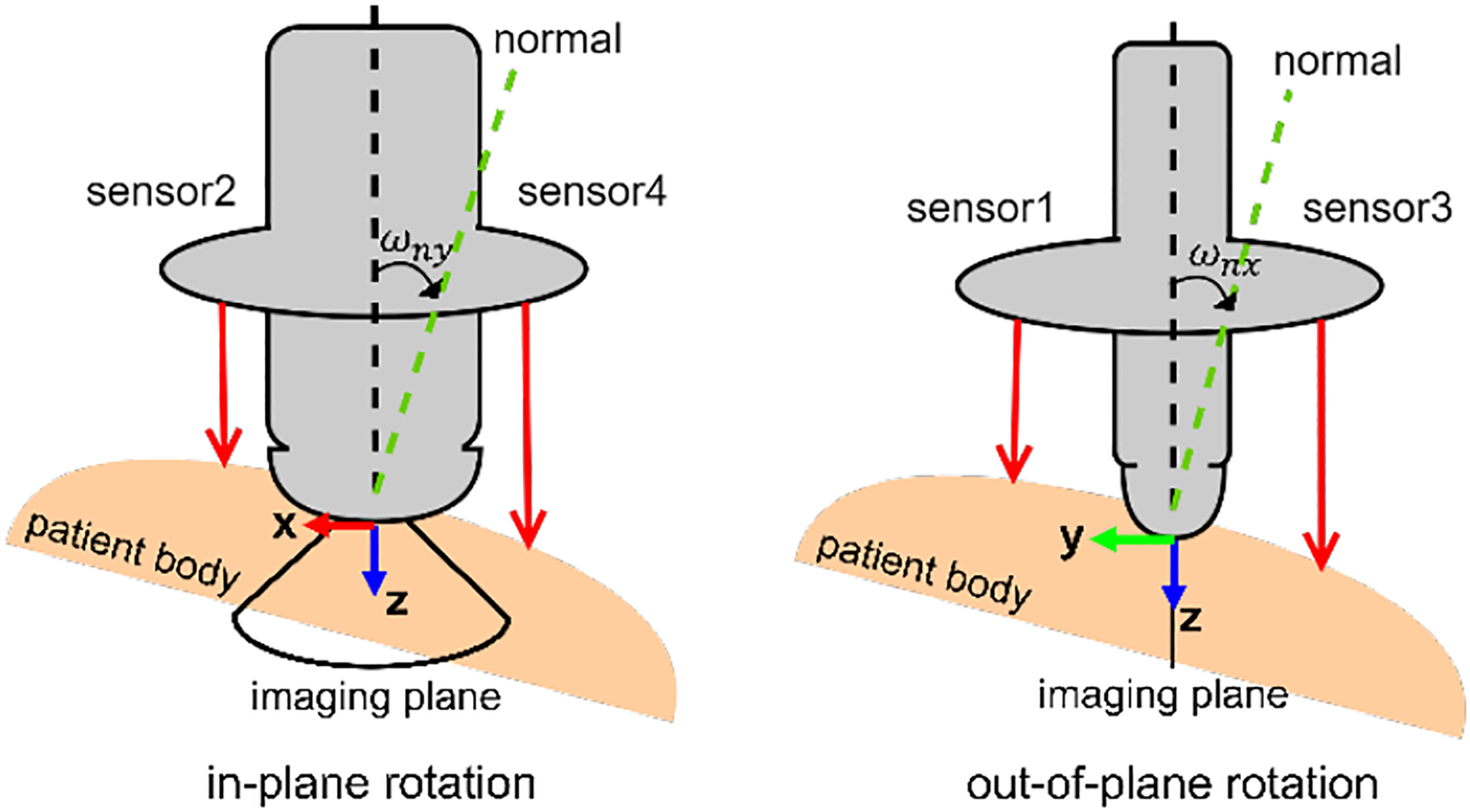

Having accurate distance readings from the sensors in real-time, A-SEE can be integrated with the robot to enable “spontaneous” motion that tilts the US probe towards the normal direction of the skin surface. A moving average filter is applied to the estimated distances to ensure motion smoothness. As depicted in Fig. 3, upon normal positioning of the probe, the distance differences between sensor1, and 3, sensor2, and 4 are supposed to be minimized. Thus, we address Task 2) by simultaneously applying in-plane rotation, which generates angular velocity about the y-axis of FA–SEE (ωny), and out-of-plane rotation, which generates angular velocity about the x-axis of FA–SEE (ωnx). The angular velocities about the two axes at timestamp t are given by a PD control law:

| (2) |

where Kp and Kd are empirically tuned control gains; d13(t) = d3(t) − d1(t), d24(t) = d4(t) − d2(t); Δd13 = d13(t) − d13(t − 1), Δd24 = d24(t) − d24(t − 1); d1 to d4 are the filtered distances from sensor 1 to 4, respectively; Δt is the control interval. ωnx and ωny are limited within 0.1 rad/s. The angular velocity adjustment rate can reach to 30Hz.

Fig. 3.

Schematic diagram of sensor-based probe normal positioning. Sensor2 and sensor4 are mounted in the US imaging plane; Sensor1 and sensor3 are mounted co-plane, orthogonal to the US imaging plane.

D. US Probe Contact Force Control

To prevent a loose contact between the probe and the skin that may cause acoustic shadows in the image, a force control strategy is necessary to stabilize the probe by pressing force at an adequate level throughout the imaging process. This control strategy is also responsible for landing the probe gently on the body for the patient’s safety. We formulate a force control strategy to adapt the linear velocity along the z-axis expressed in FA–SEE. The velocity adaptation is described by a two-stage process that manages the landing and the scanning motion separately: during landing, the probe velocity will decrease asymptotically as it gets closer to the body surface; during scanning, the probe velocity is altered based on the deviation of the measured force from the desired value. Therefore, the velocity at time stamp t is calculated as:

| (3) |

where w is a constant between 0 to 1 to maintain the smoothness of the velocity profile; v is computed by:

| (4) |

where is the vector of the four sensor readings after error compensation and filtering; is the robot measured force along the z-axis of FA–SEE, internally estimated from joint torque readings. It is then processed using a moving average filter; is the desired contact force; Kp1, Kp2 are the empirically given gains; is the single threshold to differentiate the landing stage from the scanning stage, which is set to be the length from the bottom of the sensor ring to the tip of the probe (120 mm).

E. Manual Probe Adjustments via Teleoperation

The combination of the self-normal-positioning and contact force control of the probe forms an autonomous pipeline that controls 3-DoF probe motion. A shared control scheme is implemented to give manual control of the translation along the x-, y-axis, and the rotation about the z-axis in concurrence with the three automated DoFs. A 3-DoF joystick is used as the input source, whose movements in the three axes are mapped to the probe’s linear velocity along the x-, y-axis (vtx, vty), and angular velocity about the z-axis (ωtz), expressed in FA–SEE.

F. The RUSS Imaging Workflow

An A-SEE-RUSS providing 6-DoF control of the US probe is built by incorporating self-normal-positioning, contact force control, and teleoperation of the probe. A block diagram of the integrated system is found in Fig. 4. To demonstrate the proposed A-SEE-RUSS system, we define a RUSS imaging workflow containing preoperative and intraoperative steps. In the preoperative step, the patient lies on the bed next to the robot with the robot at its home configuration, allowing the RGB-D camera to capture the patient body. The operator selects a region of interest in the camera view (top right corner in Fig. 4) as the initial probe landing position. By leveraging the camera’s depth information, the landing position in 2D image space is converted to representing the 3D landing pose above the patient body relative to Fcam. This is the same approach as we presented in [28]. The landing pose relative to Fbase is then obtained by:

| (5) |

where and are calibrated from the CAD model of the A-SEE-RUSS. The robot then moves the probe to the landing pose using a velocity-based PD controller [28]. In the intraoperative step, the probe will be gradually attached to the skin using the landing stage force control strategy. Once the probe is in contact with the body, the operator can slide the probe on the body and rotate the probe about its long axis via the joystick. Meanwhile, commanding robot joint velocities generates probe velocities in FA–SEE, such that the probe will be dynamically held in the normal direction and pressed with constant force. The desired probe velocities are formed as:

| (6) |

Transforming them to velocities expressed in Fbase yields:

| (7) |

where is the rotational component of ; [p] is the translational component of represented in skew-symmetric matrix.

Fig. 4.

Block diagram of A-SEE-RUSS. Blue arrows represent data transmission pipelines that are built under Robot Operating System (ROS) framework. White tubes represent rigid mechanical connections.

Lastly, the joint-space velocity command that will be sent to the robot for execution is obtained by:

| (8) |

where is the Moore-Penrose pseudo-inverse of the robot Jacobian matrix. During the scanning, the US images are streamed and displayed to the operator. The operator decides when to terminate the procedure. The robot will move back to its home configuration after completing the scanning. Readers may refer to the video contained in the supplementary material demonstrating the complete imaging procedure.

III. Experiment Setup

We designed experiments to validate the following aspects of the A-SEE-RUSS: i) the accuracy of self-normal-positioning on flat and uneven surfaces; ii) the quality of the US images collected using A-SEE-RUSS; iii) the contact force control performance during imaging.

A. Validate Self-Normal-Positioning

Two experiments were designed to evaluate the self-normal-positioning performance. The robot tracked the normal direction on a flat surface (expt. A1) and on an uneven surface (expt. A2). Expt. A1 evaluates the effectiveness of SEC and the accuracy of the self-normal-positioning under ideal cases, whereas expt. A2 assesses the feasibility of tracking the normal direction of a human body. The approach vector (z-axis) of the FA–SEE, and the ground truth normal of the scanned surface are recorded during experiments. The normal positioning error was used as the evaluation metric, defined as the angle between and . To estimate , four reflective optical tracking markers were attached to the end-effector at the same distance from the probe tip. These markers defined a rigid body frame (RBF1 in Fig. 5a), whose pose can be tracked in real-time using a motion capture system (Vantage, Vicon Motion Systems Ltd, UK). The z-axis of RBF1 aligned with . The method to estimate varied for each experiment and shall be explained independently. In both experiments, only the rotations about the x-, and y-axis of FA–SEE were enabled.

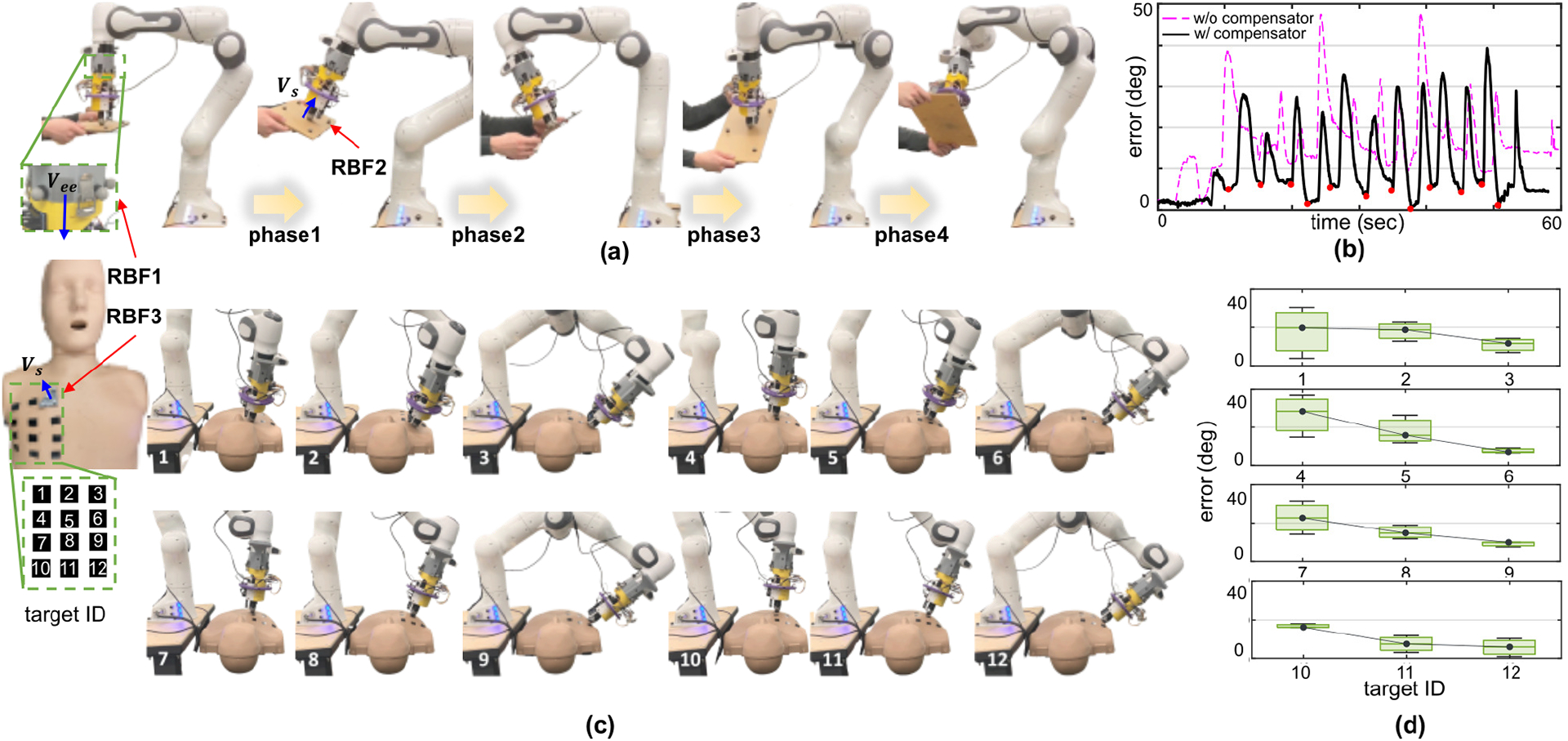

Fig. 5.

Self-normal-positioning experiment. a) Testing self-normal-positioning on a flat surface. Readers may refer to the downloadable supplementary material for the complete experiment video b) The recorded normal positioning error on the flat surface over time. c) Testing self-normal-positioning on a mannequin surface. d) The normal positioning error recorded at 12 targets on the mannequin.

In expt. A1, four optical markers on the flat surface formed a rigid body frame (RBF2 in Fig. 5a) with its z-axis perpendicular to the surface, representing . An operator held the plane against the US probe and changed its rotation in four phases. During each phase, the operator altered by tilting the plane to a certain angle. In the meantime, the robot began to track the new using the self-normal-positioning feature. Once the robot finished adjusting the angle, the operator moved on to the next phase. The four phases were cycled three times where and were recorded. The above process was repeated twice with SEC activated and disabled, respectively. The normal positioning errors were calculated for both cases. In the rest experiments, SEC was always turned on.

In expt. A2, we used LUS examination to test the self-normal-positioning on a human body alike surface. LUS procedure requires scanning both the anterior and lateral chests according to one of the recent clinical protocols [30]. Considering the large range of angle adjustment needed to cover the anterior to the lateral chest, LUS examination is a more challenging case than other examination types such as abdominal, cardiac, and spinal US, etc. Thus, it is suitable to verify the self-normal-positioning performance. An upper torso mannequin (Prestan CPR-AED Training Manikin, MCR Medical, USA) was used as the experiment subject. In [30], 12 targets were defined on the mannequin covering the left anterior to left lateral chest. As can be seen from Fig. 5c, targets 1, 4, 7, 10 were in the anterior region; targets 3, 6, 9, 12 were in the lateral region; targets 2, 5, 8, 11 fell in between. For each target, can be estimated by attaching a small pad with four optical trackers to the mannequin and tracking the z-axis of the rigid body frame (RBF3 in Fig. 5c) defined by the optical trackers. We manually configured the robot to land the US probe on each target. With the self-normal-positioning enabled, the robot automatically oriented the probe towards . was recorded upon the robot finished the adjustment. This process was repeated three times at each target. The normal positioning errors were calculated accordingly.

B. Validate US Image Quality

A user study was conducted to examine the A-SEE-RUSS’s ability in acquiring good quality images. The study was approved by the institutional research ethics committee at the Worcester Polytechnic Institute (No. IRB-21–0613). Written informed consent was given to the volunteers prior to all test sessions. We recruited three volunteers with different US imaging experiences. A LUS phantom capable of mimicking patient body texture and respiratory motion (COVID-19 Live Lung Ultrasound Simulator, CAE Healthcare™, USA) was used as the experiment subject. Realistic US images can be acquired from the phantom, which would be leveraged in the US quality assessment. Prior to the study, eight imaging targets were labeled on the phantom surface (see Fig. 6b). The phantom respiratory motion was enabled throughout the imaging acquisition. At the beginning of the study, the US probe was automatically landed near target 1. The volunteers then teleoperated the probe to the center of the target while A-SEE dynamically optimized the probe orientation. Ten images were sampled after the volunteer finished adjusting the probe position. The probe was teleoperated to the next target until all targets were covered. As the control group, each volunteer then manually placed the probe on the targets and was tilted to subjectively find the orientation giving the best image contrast. Ten images were collected from each target at the volunteers’ determined optimal viewing angle.

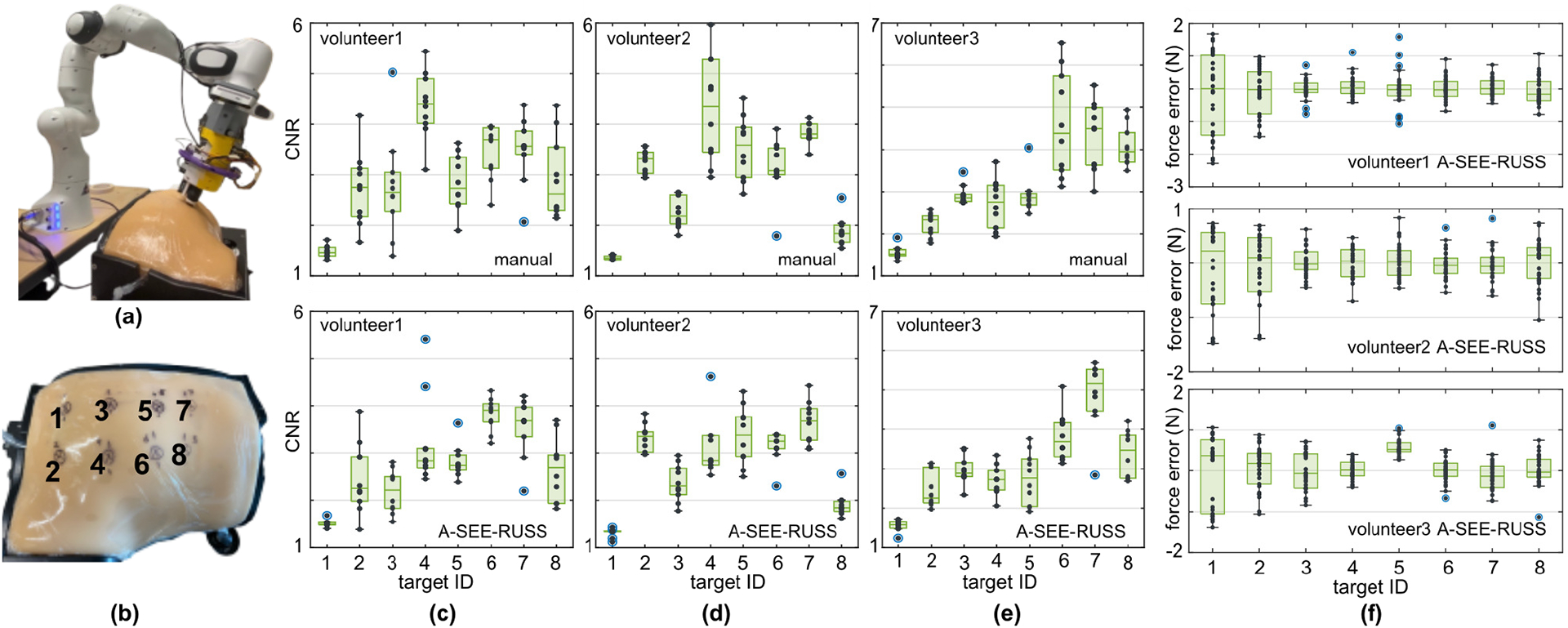

Fig. 6.

US image quality and contact force control user study. a) Experiment setup where the robot scans the LUS phantom. b) The eight pre-identified imaging targets on the lung phantom, labeled by black crosses for the study volunteers’ reference. c-e) The image quality (CNR) of the US images collected from eight targets (ten images from each target) using freehand scanning (top) and A-SEE-RUSS (bottom) by volunteer1 (professional sonographer), volunteer 2 (inexperienced operator), and volunteer 3 (inexperienced operator) respectively. f) The recorded force control error when scanning each of the targets under the phantom’s respiratory motion for the three volunteers.

Since the lung pleural line is often inspected during LUS [30], we used the visibility of this landmark, quantified by the contrast-noise-ratio (CNR), as the image quality measure. Given a rectangular region of interest (ROI) on the landmark, CNR is defined as

| (9) |

where μroi and σroi are the mean and the standard deviation of the pixel intensities in the ROI, μbg and σbg are the mean and the standard deviation of the pixel intensities in the image background. The CNR of the pleural line was calculated for the two sets of images collected using freehand scanning and using A-SEE-RUSS, respectively. The ROI area was fixed to ten-by-ten pixels when computing the CNR.

C. Validate Contact Force Control

In the above user study, we further verified the effectiveness of the force control strategy. The force measured at the tip of the probe by the robot was recorded at 30 Hz throughout the study. The desired contact force was empirically set to be a constant of 3.5 N. We use the force control error as the quantification metric, which is calculated by subtracting the measured force from the desired force.

IV. Results

A. Evaludation of Self-Normal-Positioning

The normal positioning error versus time from expt. A1 is shown in Fig. 5b. The error first peaked when the user rotated the plane, then decreased rapidly as the robot aligned the probe to the normal direction. The valley after each peak in the error curve is the minimum normal positioning error achieved, which are 4.17 ± 2.24 degrees with SEC, and 12.51 ± 2.69 degrees without SEC. A two-tailed t-test (95% confidence level) was performed hypothesizing no significant difference in the errors with and without SEC. The t-test returned a p-value of 6.33×10–8. Hence SEC is proved to boost the normal positioning accuracy by rejecting the hypothesis. This accuracy is comparable with the similar-purpose work in [20]. The time to travel from one peak to the next valley in the curve can be interpreted as the response speed of the normal positioning, which was 3.67 ± 0.84 seconds with SEC, and 3.71 ± 1.50 seconds without SEC. Another t-test (95% confidence level) on the response time with and without SEC returned a p-value of 0.67. Therefore, SEC does not affect the response time of self-normal-positioning. Overall, the response speed was sufficient to keep the normal direction while the operator teleoperates the probe during experiments in III.B. and III.C. Assigning more aggressive control gains in (2) will result in a shorter time for the orientation adjustments to cope with unexpected patient movements in actual clinical situations.

Fig. 5d shows the normal positioning errors when scanning a mannequin in expt. A2. The errors when scanning the anterior targets (1, 4, 7, 10), anterior-lateral targets (2, 5, 8, 11), and lateral targets (3, 6, 9, 12) were 28.76 ± 8.84 degrees, 17.80 ± 8.46 degrees, and 8.52 ± 3.85 degrees, respectively. The accuracy when scanning the lateral region was noticeably higher than the anterior targets. As the robot moved from the neck region (targets 1, 2, 3) towards the abdominal region (targets 10, 11, 12), however, no drastic change in the errors was observed. The mean error for all 12 targets was 14.67 ± 8.46 degrees, showing a generally acceptable performance of self-normal-positioning for the anterior targets and the lateral targets.

B. Evaluation of US Image Quality

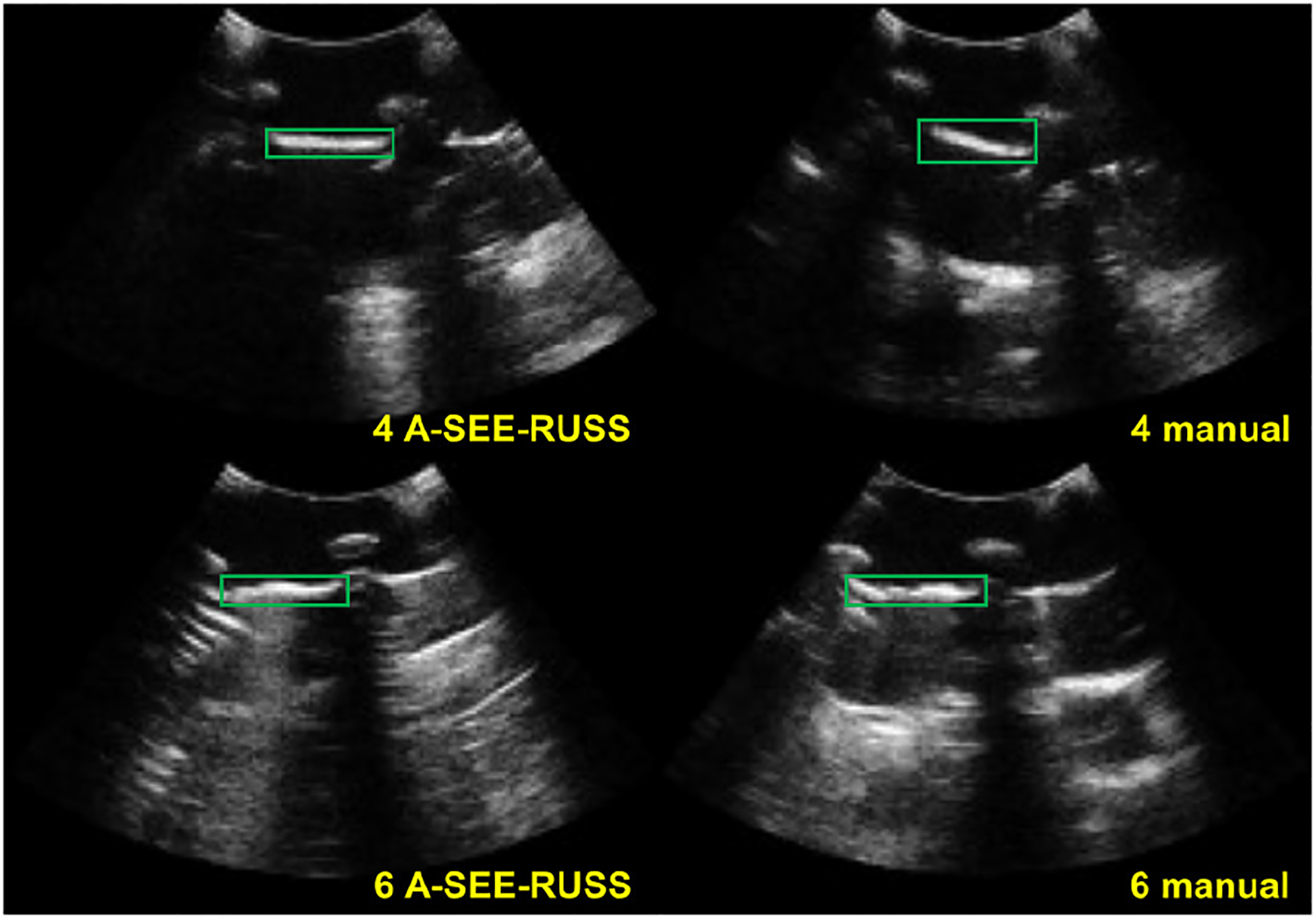

Fig. 6c–e show the CNR for all images acquired by the volunteers through different means. The mean CNR of all the manually obtained images are 3.01 ± 1.01, 3.00 ± 1.31, and 3.17 ± 1.17, respectively for each volunteer. The average CNR of all the robotically collected images are 2.78 ± 0.90, 2.81 ± 0.97, and 3.06 ± 1.07, respectively for each volunteer. The image quality is more consistent when using A-SEE-RUSS based on the reduced standard deviation in the CNR. Although the images collected using A-SEE-RUSS exhibit lower average CNR, t-tests (95% confidence level) giving p-values of 0.21, 0.28, and 0.53 indicate there is no significant difference statistically in the CNR. Therefore, A-SEE-RUSS and manual scan achieve equivalent image quality. Representative images obtained by volunteer 1 from targets 4 and 6 are shown in Fig. 7. The lung pleural lines are clearly visible in both the robotically and manually scanned images.

Fig. 7.

Representative US images from imaging target 4 and 6 acquired by volunteer 1 (professional sonographer) using freehand scanning and A-SEE-RUSS. At target 4, the CNR of the pleural line (bounded by green rectangles) in the manually collected image is higher than the one collected by A-SEE-RUSS. At target 6, it is the opposite.

C. Evaluation of Contact Force Control

The evaluation of contact force control and US image quality (IV.D.) was based on the LUS phantom to reproduce the patient respiratory. Fig. 6f shows the force control errors when each volunteer was imaging the targets. Positive errors suggest less contact force than the desired value, and negative errors indicate the opposite. The force errors mostly remained within ± 1.0 N, with a mean force error being 0.07 ± 0.49 N. The high standard deviation is caused by the simulated breathing. However, the contact force controller is able to stabilize the force around the desired setpoint with the presence of the respiratory motion.

V. Discussion and Conclusions

We present a novel end-effector, A-SEE, to achieve real-time probe self-normal-positioning capability when using a RUSS. The normal positioning does not require preoperative capturing of the patient body [18], nor extra probe motion to search for the normal direction [20]. This feature is valuable for extending existing RUSS implementations toward fully autonomous ones. The cost for building the A-SEE hardware is under $100 excluding the US probe (as of year 2022). Although we only demonstrated normal positioning, leaning the probe omnidirectionally based on distance sensing is also viable if the sonographer intends to view from different windows when imaging the liver or other organs. The normal positioning accuracy is evaluated to be 4.17 ± 2.24 degrees under the idea case (flat surface) and 14.67 ± 8.46 degrees under more realistic scenarios (mannequin). While the outcome from the ideal case is promising, the performance on a more sophisticated surface is compromised. This is because A-SEE cannot sense the surface within the probe outer case radius (35 mm). The uneven topographical landmarks, such as shoulder blades and pectoral muscles, are located in the anterior region near the neck of the mannequin, which explains the lower normal positioning accuracy near these regions (e.g., targets 1, 2, 4, 5). On the other hand, the lateral surface of the mannequin is smoother, bringing the normal positioning errors closer to the flat surface case. Optimizing the sensor mounting angle and reducing the outer case dimension will allow the laser to be emitted closer to the probe tip, which may mitigate this issue. Nevertheless, the orientation adjustment has been demonstrated with the proof-of-concept A-SEE design. Experiments on the contact force during scanning yield a control error mostly within 1.0 N, showing the effectiveness of the force control strategy under challenging conditions where the patient respiratory motion and the dynamic orientation adjustments coexist. Furthermore, the analysis of the US image quality implies that the A-SEE-RUSS obtains equivalent quality images compared with the freehand US. The experiment results have indicated the great potential of the proposed system.

One limitation of this work is the lack of evaluations on human patients in real clinical environments. While we demonstrated the self-normal-positioning on various surfaces in a lab environment, it is reported that the laser sensor performance can be vulnerable to ambient light [29]. In-vivo studies are needed to test the usability of our system in hospitals. The presented experiments simulated upper body imaging use cases. However, with the current sensor layout, sensors 2 and 4 may not be able to detect skin when imaging the limbs. For future work, we will miniaturize the A-SEE design to extend its applicability beyond upper body imaging. Leveraging the US image information to fine-tune the probe orientation [32] can also be beneficial when the body surface information is less useful (e.g., when the surface is highly curved). Experiments on human subjects will be conducted to elucidate the clinical value of our system.

Supplementary Material

Acknowledgments

The authors would like to thank Prof. Jeffrey C. Hill for assisting the user study.

This work is supported by the National Institutes of Health (DP5 OD028162)

References

- [1].Van Metter RL, Beutel J, and Kundel HL, Handbook of Medical Imaging. Bellingham, WA, USA: Spie Press, vol. 1, 2000. [Google Scholar]

- [2].Afshar M et al. , “Autonomous Ultrasound Scanning to Localize Needle Tip in Breast Brachytherapy,” 2020 Int. Symp. on Medical Robotics (ISMR), Nov. 2020. [Google Scholar]

- [3].Sen HT et al. , “System Integration and In Vivo Testing of a Robot for Ultrasound Guidance and Monitoring During Radiotherapy,” IEEE Trans. on Biomed. Eng, vol. 64, no. 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Hoskins PR, Martin K, and Thrush A, Diagnostic ultrasound: physics and equipment. CRC Press, 2019. [Google Scholar]

- [5].von Haxthausen F, Bottger S, Wulff D, Hagenah J, García-Vázquez V, and Ipsen S, “Medical robotics for ultrasound imaging: current systems and future trends,” Current Robotics Reports, vol. 2, Feb. 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Scorza A, Conforto S, D’Anna C, and Sciuto SA, “A comparative study on the influence of probe placement on quality assurance measurements in B-mode ultrasound by means of ultrasound phantoms,” Open Biomed. Eng. J, vol. 9, Jul. 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Shah S, Bellows BA, Adedipe AA, Totten JE, Backlund BH, and Sajed D, “Perceived barriers in the use of ultrasound in developing countries,” Crit. Ultrasound J, vol. 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Abramowicz JS and Basseal JM, “World federation for ultrasound in medicine and biology position statement: how to perform a safe ultrasound examination and clean equipment in the context of COVID-19,” Ultrasound in Medicine & Biology, vol. 46, no. 7, pp. 1821–1826, Jul. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Priester AM, Natarajan S, and Culjat MO, “Robotic ultrasound systems in medicine,” IEEE Trans. Ultrasonics, Ferroelect., Freq. Control, vol. 60, Mar. 2013. [DOI] [PubMed] [Google Scholar]

- [10].Salcudean SE, Moradi H, Black DG and Navab N, “Robot-assisted medical imaging: a review,” Proceedings of the IEEE, doi: 10.1109/JPROC.2022.3162840. [DOI] [Google Scholar]

- [11].Li K, Xu Y, and Meng MQ-H, “An overview of systems and techniques for autonomous robotic ultrasound acquisitions,” IEEE Trans. on Medical Robotics and Bionics, vol. 3, May 2021. [Google Scholar]

- [12].Virga S et al. , “Automatic force-compliant robotic ultrasound screening of abdominal aortic aneurysms,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems, pp. 508–513, 2016. [Google Scholar]

- [13].Ma G, Oca SR, Zhu Y, Codd PJ, and Buckland DM, “A Novel Robotic System for Ultrasound-guided Peripheral Vascular Localization,” IEEE Int. Conf. on Robotics and Automation (ICRA), May 2021. [Google Scholar]

- [14].Hennersperger C et al. , “Towards MRI-Based Autonomous Robotic US Acquisitions: A First Feasibility Study”, IEEE Trans. on Med. Imaging, vol. 36, 2017. [DOI] [PubMed] [Google Scholar]

- [15].Kamnski Jakub T., Rafatzand Khashayar, and Zhang Haichong K.. “Feasibilit of robot-assisted ultrasound imaging with force feedback for assessment of thyroid diseases,” Medical Imaging 2020: Image-Guided Procedures, Robotic Interventions, and Modeling, vol. 11315, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Boezaart A and Ihnatsenka B, “Ultrasound: basic understanding and learning the language,” Int. J. of Shoulder Surgery, vol. 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Kojcev R et al. , “On the reproducibility of expert-operated and robotic ultrasound acquisitions,” Int. J. of Computer Assisted Radiology and Surgery, vol. 12, Mar. 2017. [DOI] [PubMed] [Google Scholar]

- [18].Huang Q, Lan J, and Li X, “Robotic arm based automatic ultrasound scanning for three-dimensional imaging,” IEEE Trans. on Ind. Inform, vol. 15. [Google Scholar]

- [19].Yang C, Jiang M, Chen M, Fu M, Li J, and Huang Q, “Automatic 3-D imaging and measurement of human spines with a robotic ultrasound system,” IEEE Trans. on Instrumentation and Measurement, vol. 70. [Google Scholar]

- [20].Jiang Z et al. , “Automatic normal positioning of robotic ultrasound probe based only on confidence map optimization and force measurement,” IEEE Robotics and Automation Letters, vol. 5, no. 2, pp. 1342–1349, April 2020. [Google Scholar]

- [21].Al-Zogbi L et al. , “Autonomous robotic point-of-care ultrasound imaging for monitoring of COVID-19–induced pulmonary diseases,” Frontiers in Robotics and AI, vol. 8, May 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Suligoj F, Heunis CM, Sikorski J and Misra S, “RobUSt–an autonomous robotic ultrasound system for medical imaging,” IEEE Access, vol. 9. [Google Scholar]

- [23].Gao S, Ma Z, Tsumura R, Kaminski JT, Fichera L, and Zhang HK, “Augmented immersive telemedicine through camera view manipulation controlled by head motions,” Medical Imaging 2021: Image-Guided Procedures, Robotic Interventions, and Modeling, Feb. 2021. [Google Scholar]

- [24].Tsumura R et al. , “Tele-operative low-cost robotic lung ultrasound scanning platform for triage of COVID-19 patients,” IEEE Robotics and Automation Letters, vol. 6, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Tsumura R and Iwata H, “Robotic fetal ultrasonography platform with a passive scan mechanism,” Int. J. of Computer Assisted Radiology and Surgery, vol. 15, no. 8, pp. 1323–1333, Feb. 2020. [DOI] [PubMed] [Google Scholar]

- [26].Ipsen S, Wulff D, Kuhlemann I, Schweikard A, and Ernst F, “Towards automated ultrasound imaging—robotic image acquisition in liver and prostate for long-term motion monitoring,” Physics in Medicine & Biology, vol. 66, Apr. 2021. [DOI] [PubMed] [Google Scholar]

- [27].Yorozu Y, Chatelain P, Krupa A, and Navab N, “Confidence-driven control of an ultrasound probe,” IEEE Trans. on Robotics, vol. 33. [Google Scholar]

- [28].Ma X, Zhang Z, and Zhang HK, “Autonomous scanning target localization for robotic lung ultrasound imaging,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Konolige K, Augenbraun J, Donaldson N, Fiebig C, and Shah P, “A low-cost laser distance sensor,” IEEE Int. Conf. on Robotics and Automation, May 2008. [Google Scholar]

- [30].Lichtenstein DA, “BLUE-Protocol and FALLS-Protocol: Two applications of lung ultrasound in the critically ill,” Chest, vol. 147, 2015. [DOI] [PubMed] [Google Scholar]

- [31].Huang Q et al. , “Anatomical prior based vertebra modelling for reappearance of human spines,” Neurocomputing, vol. 500, 2022. [Google Scholar]

- [32].Jiang Z et al. , “Autonomous Robotic Screening of Tubular Structures Based Only on Real-Time Ultrasound Imaging Feedback,” IEEE Trans. on Ind. Electronics, vol. 69, no. 7, pp. 7064–7075. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.