Abstract

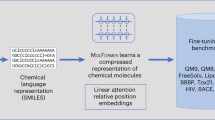

There is increasing adoption of artificial intelligence in drug discovery. However, existing studies use machine learning to mainly utilize the chemical structures of molecules but ignore the vast textual knowledge available in chemistry. Incorporating textual knowledge enables us to realize new drug design objectives, adapt to text-based instructions and predict complex biological activities. Here we present a multi-modal molecule structure–text model, MoleculeSTM, by jointly learning molecules’ chemical structures and textual descriptions via a contrastive learning strategy. To train MoleculeSTM, we construct a large multi-modal dataset, namely, PubChemSTM, with over 280,000 chemical structure–text pairs. To demonstrate the effectiveness and utility of MoleculeSTM, we design two challenging zero-shot tasks based on text instructions, including structure–text retrieval and molecule editing. MoleculeSTM has two main properties: open vocabulary and compositionality via natural language. In experiments, MoleculeSTM obtains the state-of-the-art generalization ability to novel biochemical concepts across various benchmarks.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All the datasets are provided on Hugging Face at https://huggingface.co/datasets/chao1224/MoleculeSTM/tree/main. Specifically for the release of PubChemSTM, we encountered a big challenge regarding the textual data license. As confirmed with the PubChem group, performing research on these data does not violate their license; however, PubChem does not possess the license for the textual data, which necessitates an extensive evaluation of the license for each of the 280 structure–text pairs in PubChemSTM. This has hindered the release of PubChemSTM. Nevertheless, we have (1) described the detailed preprocessing steps in Supplementary Section A.1, (2) provided the molecules with CID file (https://huggingface.co/datasets/chao1224/MoleculeSTM/blob/main/PubChemSTM_data/raw/CID2SMILES.csv) in PubChemSTM and (3) have also provided the detailed preprocessing scripts (https://github.com/chao1224/MoleculeSTM/tree/main/preprocessing/PubChemSTM). By utilizing these scripts, users can easily reconstruct the PubChemSTM dataset.

Code availability

The source code can be found on GitHub (https://github.com/chao1224/MoleculeSTM/tree/main) and Zenodo62. The scripts for pretraining and three downstream tasks are provided at https://github.com/chao1224/MoleculeSTM/tree/main/scripts. The checkpoints of the pretrained models are provided on Hugging Face at https://huggingface.co/chao1224/MoleculeSTM/tree/main. Beyond the methods described so far, to help users try our MoleculeSTM model, this release includes demos in notebooks (https://github.com/chao1224/MoleculeSTM). Furthermore, users can customize their own datasets by checking the datasets folder (https://github.com/chao1224/MoleculeSTM/tree/main/MoleculeSTM/datasets).

References

Sullivan, T. A tough road: cost to develop one new drug is $2.6 billion; approval rate for drugs entering clinical development is less than 12%. Policy Medicine https://www.policymed.com/2014/12/a-tough-road-cost-to-develop-one-new-drug-is-26-billion-approval-rate-for-drugs-entering-clinical-de.html (2019).

Patronov, A., Papadopoulos, K. & Engkvist, O. in Artificial Intelligence in Drug Design (ed. Heietz, A.) 153–176 (Springer, 2022).

Jayatunga, M. K., Xie, W., Ruder, L., Schulze, U. & Meier, C. AI in small-molecule drug discovery: a coming wave. Nat. Rev. Drug Discov. 21, 175–176 (2022).

Jumper, J. et al. Highly accurate protein structure prediction with alphafold. Nature 596, 583–589 (2021).

Rohrer, S. G. & Baumann, K. Maximum unbiased validation (MUV) data sets for virtual screening based on PubChem bioactivity data. J. Chem. Inf. Model. 49, 169–184 (2009).

Liu, S. et al. Practical model selection for prospective virtual screening. J. Chem. Inf. Model. 59, 282–293 (2018).

Duvenaud, D. K. et al. Convolutional networks on graphs for learning molecular fingerprints. In Advances in Neural Information Processing Systems Vol. 2 (eds Cortes, C. et al.) 2224–2232 (Curran Associates, 2015).

Liu, S., Demirel, M. F. & Liang, Y. N-gram graph: simple unsupervised representation for graphs, with applications to molecules. In Advances in Neural Information Processing Systems Vol. 32 (eds Wallach, H. et al.) 8464–8476 (Curran Associates, 2019).

Wu, Z. et al. MoleculeNet: a benchmark for molecular machine learning. Chem. Sci. 9, 513–530 (2018).

Jin, W., Barzilay, R. & Jaakkola, T. Hierarchical generation of molecular graphs using structural motifs. In International Conference on Machine Learning Vol. 119, 4839–4848 (PMLR, 2020).

Irwin, R., Dimitriadis, S., He, J. & Bjerrum, E. J. Chemformer: a pre-trained transformer for computational chemistry. Mach. Learn. Sci. Technol. 3, 015022 (2022).

Wang, Z. et al. Retrieval-based controllable molecule generation. In International Conference on Learning Representations (PMLR, 2023).

Liu, S. et al. GraphCG: unsupervised discovery of steerable factors in graphs. In NeurIPS 2022 Workshop: New Frontiers in Graph Learning (NeurIPS, 2022).

Krenn, M., Häse, F., Nigam, A., Friederich, P. & Aspuru-Guzik, A. Self-referencing embedded strings (SELFIES): a 100% robust molecular string representation. Mach. Learn. Sci. Technol. 1, 045024 (2020).

Xu, K., Hu, W., Leskovec, J. & Jegelka, S. How powerful are graph neural networks? In International Conference on Learning Representations (PMLR, 2019).

Schütt, K. T., Sauceda, H. E., Kindermans, P.-J., Tkatchenko, A. & Müller, K.-R. SchNet—a deep learning architecture for molecules and materials. J. Chem. Phys. 148, 241722 (2018).

Satorras, V. G., Hoogeboom, E. & Welling, M. E(n) equivariant graph neural networks. In International Conference on Machine Learning Vol. 139, 9323–9332 (2021).

Atz, K., Grisoni, F. & Schneider, G. Geometric deep learning on molecular representations. Nat. Mach. Intell. 3, 1023–1032 (2021).

Ji, Y. et al. DrugOOD: out-of-distribution dataset curator and benchmark for AI-aided drug discovery—a focus on affinity prediction problems with noise annotations. In Proc. AAAI Conference on Artificial Intelligence Vol. 37, 8023–8031 (2023).

Irwin, J. J., Sterling, T., Mysinger, M. M., Bolstad, E. S. & Coleman, R. G. ZINC: a free tool to discover chemistry for biology. J. Chem. Inf. Model. 52, 1757–1768 (2012).

Hu, W. et al. Strategies for pre-training graph neural networks. In International Conference on Learning Representations (PMLR, 2020).

Liu, S., Guo, H. & Tang, J. Molecular geometry pretraining with SE(3)-invariant denoising distance matching. In International Conference on Learning Representations (PMLR, 2022).

Larochelle, H., Erhan, D. & Bengio, Y. Zero-data learning of new tasks. In Proc. AAAI Conference on Artificial Intelligence Vol. 2, 646–651 (AAAI, 2008).

Radford, A. et al. Learning transferable visual models from natural language supervision. In International Conference on Machine Learning Vol. 139, 8748–8763 (PMLR, 2021).

Nichol, A. et al. GLIDE: towards photorealistic image generation and editing with text-guided diffusion models. In International Conference on Machine Learning Vol. 162, 16784–16804 (PMLR, 2022).

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C. & Chen, M. Hierarchical text-conditional image generation with clip latents. Preprint at https://arxiv.org/abs/2208.11126 (2022).

Patashnik, O., Wu, Z., Shechtman, E., Cohen-Or, D. & Lischinski, D. StyleCLIP: text-driven manipulation of StyleGAN imagery. In Proc. IEEE/CVF International Conference on Computer Vision 2085–2094 (IEEE, 2021).

Li, S. et al. Pre-trained language models for interactive decision-making. In Advances in Neural Information Processing Systems Vol. 35 (eds Koyejo, S. et al.) 31199–31212 (Curran Associates, 2022).

Fan, L. et al. MineDojo: building open-ended embodied agents with internet-scale knowledge. In Advances in Neural Information Processing Systems Vol. 35 (eds Koyejo, S. et al.) 18343–18362 (Curran Associates, 2022).

Zeng, Z., Yao, Y., Liu, Z. & Sun, M. A deep-learning system bridging molecule structure and biomedical text with comprehension comparable to human professionals. Nat. Commun. 13, 862 (2022).

Liu, S. et al. Pre-training molecular graph representation with 3D geometry. In International Conference on Learning Representations (PMLR, 2022).

Beltagy, I., Lo, K. & Cohan, A. SciBERT: pretrained language model for scientific text. In Proc. 2019 Conference on Empirical Methods in Natural Language Processing (eds Inui, K. et al.) 3615–3620 (ACL, 2019).

Oord, A.V., Li, Y. & Vinyals, O. Representation learning with contrastive predictive coding. Preprint at https://arxiv.org/abs/1807.03748 (2018).

Kim, S. et al. PubChem in 2021: new data content and improved web interfaces. Nucleic Acids Res. 49, D1388–D1395 (2021).

Hughes, J. P., Rees, S., Kalindjian, S. B. & Philpott, K. L. Principles of early drug discovery. Br. J. Pharmacol. 162, 1239–1249 (2011).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing System Vol. 30 (eds von Luxburg, U. et al.) 6000–6010 (Curran Associates, 2017).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. 2019 Association for Computational Linguistics (eds Burstein, J. et al.) 4171–4186 (ACL, 2019).

Gu, X., Lin, T.-Y., Kuo, W. & Cui, Y. Open-vocabulary object detection via vision and language knowledge distillation. In International Conference on Learning Representations (PMLR, 2022).

Wishart, D. S. et al. DrugBank 5.0: a major update to the drugbank database for 2018. Nucleic Acids Res. 46, D1074–D1082 (2018).

Mendez, D. et al. ChEMBL: towards direct deposition of bioassay data. Nucleic Acids Res. 47, D930–D940 (2018).

Jensen, J. H. A graph-based genetic algorithm and generative model/Monte Carlo tree search for the exploration of chemical space. Chem. Sci. 10, 3567–3572 (2019).

Talley, J. J. et al. Substituted pyrazolyl benzenesulfonamides for the treatment of inflammation. US patent 5,760,068 (1998).

Dahlgren, D. & Lennernäs, H. Intestinal permeability and drug absorption: predictive experimental, computational and in vivo approaches. Pharmaceutics 11, 411 (2019).

Guroff, G. et al. Hydroxylation-induced migration: the NIH shift. Recent experiments reveal an unexpected and general result of enzymatic hydroxylation of aromatic compounds. Science 157, 1524–1530 (1967).

Bradley, A. P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 30, 1145–1159 (1997).

Sun, F.-Y., Hoffmann, J., Verma, V. & Tang, J. InfoGraph: unsupervised and semi-supervised graph-level representation learning via mutual information maximization. In International Conference on Learning Representations (PMLR, 2020).

Wang, Y., Wang, J., Cao, Z. & Farimani, A. B. Molecular contrastive learning of representations via graph neural networks. Nat. Mach. Intell. 4, 279–287 (2022).

Lo, K., Wang, L. L., Neumann, M., Kinney, R. & Weld, D. S. S2ORC: the semantic scholar open research corpus. In Proc. Association for Computational Linguistics (eds Jurafsky, D. et al.) 4969–4983 (ACL, 2020).

Sterling, T. & Irwin, J. J. ZINC 15—ligand discovery for everyone. J. Chem. Inf. Model. 55, 2324–2337 (2015).

Axelrod, S. & Gomez-Bombarelli, R. GEOM, energy-annotated molecular conformations for property prediction and molecular generation. Sci. Data 9, 185 (2022).

Aggarwal, S. Targeted cancer therapies. Nat. Rev. Drug Discov. 9, 427–428 (2010).

Guney, E. Reproducible drug repurposing: when similarity does not suffice. In Pacific Symposium on Biocomputing (eds Altaman, R. B. et al.) 132–143 (World Scientific, 2017).

Ertl, P., Altmann, E. & McKenna, J. M. The most common functional groups in bioactive molecules and how their popularity has evolved over time. J. Med. Chem. 63, 8408–8418 (2020).

Böhm, H.-J., Flohr, A. & Stahl, M. Scaffold hopping. Drug Discov. Today Technol. 1, 217–224 (2004).

Hu, Y., Stumpfe, D. & Bajorath, J. Recent advances in scaffold hopping: miniperspective. J. Med. Chem. 60, 1238–1246 (2017).

Drews, J. Drug discovery: a historical perspective. Science 287, 1960–1964 (2000).

Gomez, L. Decision making in medicinal chemistry: the power of our intuition. ACS Med. Chem. Lett. 9, 956–958 (2018).

Leo, A., Hansch, C. & Elkins, D. Partition coefficients and their uses. Chem. Rev. 71, 525–616 (1971).

Bickerton, G. R., Paolini, G. V., Besnard, J., Muresan, S. & Hopkins, A. L. Quantifying the chemical beauty of drugs. Nat. Chem. 4, 90–98 (2012).

Ertl, P., Rohde, B. & Selzer, P. Fast calculation of molecular polar surface area as a sum of fragment-based contributions and its application to the prediction of drug transport properties. J. Med. Chem. 43, 3714–3717 (2000).

Butina, D. Unsupervised data base clustering based on daylight’s fingerprint and Tanimoto similarity: a fast and automated way to cluster small and large data sets. J. Chem. Inf. Comput. Sci. 39, 747–750 (1999).

Liu, S. et al. Multi-modal molecule structure-text model for text-based editing and retrieval. Zenodo https://doi.org/10.5281/zenodo.8303265 (2023).

Acknowledgements

This work was done during S.L.’s internship at NVIDIA Research. We thank the insightful comments from M. L. Gill, A. Stern and other team members from AIAlgo and Clara team at NVIDIA. We also thank the kind help from T. Dierks, E. Bolton, P. Thiessen and others from PubChem for confirming the PubChem license.

Author information

Authors and Affiliations

Contributions

S.L., W.N., C.W., Z.Q., C.X. and A.A. conceived and designed the experiments. S.L. performed the experiments. S.L. and C.W. analysed the data. S.L., C.W. and J.L. contributed analysis tools. S.L., W.N., C.W., J.L., Z.Q., L.L., J.T., C.X. and A.A. wrote the paper. J.T., C.X. and A.A. contributed equally to advising this project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Rocío Mercado and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Jacob Huth, in collaboration with the Nature Machine Intelligence team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Sections A–E, Figs. 1–4 and Tables 1–25.

Source Data Fig. 2

Source data for Fig. 2.

Source Data Fig. 4

Source data for Fig. 4.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, S., Nie, W., Wang, C. et al. Multi-modal molecule structure–text model for text-based retrieval and editing. Nat Mach Intell 5, 1447–1457 (2023). https://doi.org/10.1038/s42256-023-00759-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00759-6