Abstract

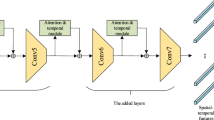

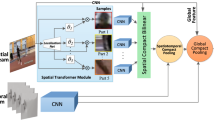

Two-stream convolutional networks have shown strong performance in action recognition. However, both spatial and temporal features in two-stream are learned separately. There has been almost no consideration for the different characteristics of the spatial and temporal streams, which are performed on the same operations. In this paper, we build upon two-stream convolutional networks and propose a novel spatial–temporal injection network (STIN) with two different auxiliary losses. To build spatial–temporal features as the video representation, the apparent difference module is designed to model the auxiliary temporal constraints on spatial features in spatial injection network. The self-attention mechanism is used to attend to the interested areas in the temporal injection stream, which reduces the optical flow noise influence of uninterested region. Then, these auxiliary losses enable efficient training of two complementary streams which can capture interactions between the spatial and temporal information from different perspectives. Experiments conducted on the two well-known datasets—UCF101 and HMDB51—demonstrate the effectiveness of the proposed STIN.

Similar content being viewed by others

References

He, K., et al.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. Adv Neural Inf. Process. Syst. (2014)

Tran, D., et al.: Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision (2015)

Wang, L., et al.: Temporal segment networks: towards good practices for deep action recognition. In: European Conference on Computer Vision (2016)

Wang, H., et al.: Action recognition by dense trajectories (2011)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: Proceedings of the IEEE International Conference on Computer Vision (2013)

Lan, Z., et al.: Beyond Gaussian pyramid: multi-skip feature stacking for action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015)

Wang, Y., et al.: Spatiotemporal pyramid network for video action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Yang, K., et al.: IF-TTN: information fused temporal transformation network for video action recognition (2019)

Wang, Y., et al.: Reversing two-stream networks with decoding discrepancy penalty for robust action recognition (2018)

Zhao, Y., Xiong, Y., Lin, D.: Recognize actions by disentangling components of dynamics. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2018)

Wang, X., et al.: Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2018)

Shou, Z., et al.: Dmc-net: generating discriminative motion cues for fast compressed video action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2019)

Ioffe, S., Szegedy, C. Batch normalization: accelerating deep network training by reducing internal covariate shift (2015)

Girdhar, R., Ramanan, D. Attentional pooling for action recognition. Adv. Neural Inf. Process. Syst. (2017)

Tran, A., Cheong, L.-F.: Two-stream flow-guided convolutional attention networks for action recognition. In: Proceedings of the IEEE International Conference on Computer Vision (2017)

Soomro, K., Zamir, A.R., Shah, M.: UCF101: a dataset of 101 human actions classes from videos in the wild (2012)

Kuehne, H., et al.: HMDB: a large video database for human motion recognition. In: 2011 International Conference on Computer Vision (2011)

Zach, C., Pock, T., Bischof, H.: A duality based approach for realtime TV-L 1 optical flow. In: Joint Pattern Recognition Symposium (2007)

Wang, L., Qiao, Y., Tang, X.: MoFAP: a multi-level representation for action recognition. Int. J. Comput. Vis. 119(3), 254–271 (2016)

Wang, L., Qiao, Y., Tang, X.: Action recognition with trajectory-pooled deep-convolutional descriptors. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015)

Sharma, S., Kiros, R., Salakhutdinov, R. Action recognition using visual attention (2015)

Russakovsky, O., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Peng, X., et al.: Bag of visual words and fusion methods for action recognition: comprehensive study and good practice. Comput. Vis. Image Underst. 150, 109–125 (2016)

Varol, G., Laptev, I., Schmid, C.: Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1510–1517 (2017)

Huang, S., Lin, X., Karaman, S., et al.: Flow-distilled IP two-stream networks for compressed video action recognition. In: Computer Vision and Pattern Recognition (2019)

Cao, D., Xu, L., Chen, H., et al.: Action recognition in untrimmed videos with composite self-attention two-stream framework. In: Computer Vision and Pattern Recognition (2019)

Du, Y., Yuan, C., Li, B., et al.: Interaction-aware spatio-temporal pyramid attention networks for action classification. In: European Conference on Computer Vision, pp. 388–404 (2018)

Deep draw. https://github.com/auduno/deepdraw (2016)

Acknowledgements

This work is supported by the grants from the Natural Science Foundation of Shandong Province (No. ZR20-20MF136), Key Research and Development Plan of Shandong Province (No. 2019GGX101015), and the Fundamental Research Funds for the Central Universities (No. 20CX05018A).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cao, H., Wu, C., Lu, J. et al. Spatial–temporal injection network: exploiting auxiliary losses for action recognition with apparent difference and self-attention. SIViP 17, 1173–1180 (2023). https://doi.org/10.1007/s11760-022-02324-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02324-x