Abstract

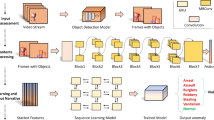

Intelligent video surveillance systems have been used recently for automatic monitoring of human interactions. Although they play a significant role in reducing security concerns, there are many challenges for distinguishing between normal and abnormal behaviors such as crowded environments and camera viewpoint. In this paper, we propose a novel deep violence detection framework based on the specific features derived from handcrafted methods. These features are related to appearance, speed of movement, and representative image and fed to a convolutional neural network (CNN) as spatial, temporal, and spatiotemporal streams. The spatial stream trained the network with each frame in the video to learn environment patterns. The temporal stream contained three consecutive frames to learn motion patterns of violent behavior with a modified differential magnitude of optical flow. Moreover, in spatio-temporal stream, we introduced a discriminative feature with a novel differential motion energy image to represent violent actions more interpretable. This approach covers different aspects of violent behavior by fusing the results of these streams. The proposed CNN network is trained with violence-labeled and normal-labeled frames of 3 Hockey, Movie, and ViF datasets which comprised both crowded and uncrowded situations. The experimental results showed that the proposed deep violence detection approach outperformed state-of-the-art works in terms of accuracy and processing time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Jafri, R., Ali, S.A., Arabnia, H.R., Fatima, S.: Computer vision-based object recognition for the visually impaired in an indoors environment: a survey. Vis. Comput. 30, 1197–1222 (2014)

Vishwakarma, S., Agrawal, A.: A survey on activity recognition and behavior understanding in video surveillance. Vis. Comput. 29, 983–1009 (2013)

Mitra, S., Acharya, T.: Gesture recognition: a survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 37, 311–324 (2007)

Varol, G., Laptev, I., Schmid, C.: Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40, 1510–1517 (2017)

Finn, C., Goodfellow, I., Levine, S.: Unsupervised learning for physical interaction through video prediction. In: Advances in Neural Information Processing Systems, pp. 64–72 (2016)

Tripathi, R.K., Jalal, A.S., Agrawal, S.C.: Suspicious human activity recognition: a review. Artif. Intell. Rev. 50, 283–339 (2018)

Hao, T., Wu, D., Wang, Q., Sun, J.S.: Multi-view representation learning for multi-view action recognition. J. Vis. Commun. Image Represent. 48, 453–460 (2017)

Zhang, Y., Dong, L., Li, S., Li, J.: Abnormal crowd behavior detection using interest points. In: International Symposium on Broadband Multimedia Systems and Broadcasting, pp. 1–4 (2014)

Li, W., Mahadevan, V., Vasconcelos, N.: Anomaly detection and localization in crowded scenes. IEEE Trans. Pattern Anal. Mach. Intell. 36, 18–32 (2013)

Mahadevan, V., Li, W., Bhalodia, V., Vasconcelos, N.: Anomaly detection in crowded scenes. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 1975–1981 (2010)

Zhang, T., Jia, W., Yang, B., Yang, J., He, X., Zheng, Z.: MoWLD: a robust motion image descriptor for violence detection. Multimed. Tools Appl. 76, 1419–1438 (2017)

Berlin, S.J., John, M.: Spiking neural network based on joint entropy of optical flow features for human action recognition. Vis. Comput. 1–15 (2020).

Zhu, S., Hu, J., Shi, Z.: Local abnormal behavior detection based on optical flow and spatio-temporal gradient. Multimed. Tools Appl. 75, 9445–9459 (2016)

Gnanavel, V.K., Srinivasan, A.: Abnormal event detection in crowded video scenes. In: Proceedings of the 3rd International Conference on Frontiers of Intelligent Computing: Theory and Applications (Ficta), pp. 441–448 (2015).

Mu, C., Xie, J., Yan, W., Liu, T., Li, P.: A fast recognition algorithm for suspicious behavior in high definition videos. Multimed. Syst. 22, 275–285 (2016)

Nguyen, V.D., Le, M.T., Do, A.D., Duong, H.H., Thai, T.D., Tran, D.H.: An efficient camera-based surveillance for fall detection of elderly people. In: IEEE Conference on Industrial Electronics and Applications, pp. 994–997 (2014)

Aslan, M., Sengur, A., Xiao, Y., Wang, H., Ince, M.C., Ma, X.: Shape feature encoding via fisher vector for efficient fall detection in depth-videos. Appl. Soft Comput. 37, 1023–1028 (2015)

Vishwakarma, D.K., Dhiman, C.: A unified model for human activity recognition using spatial distribution of gradients and difference of Gaussian kernel. Vis. Comput. 35, 1595–1613 (2019)

Wang, J., Xu, Z.: Crowd Anomaly Detection for Automated Video Surveillance (2015)

Ryoo, M.S., Rothrock, B., Fleming, C., Yang, H.J.: Privacy-preserving human activity recognition from extreme low resolution. In: Thirty-First AAAI Conference on Artificial Intelligence (2017)

Saravanakumar, S., Vadivel, A., Ahmed, C.S.: Multiple human object tracking using background subtraction and shadow removal techniques. In: International Conference on Signal and Image Processing, pp. 79–84 (2010)

Mendez, C.G.M., Mendez, S.H., Solis, A.L., Figueroa, H.V.R., Hernandez, A.M.: The effects of using a noise filter and feature selection in action recognition: an empirical study. In: International Conference on Mechatronics, Electronics and Automotive Engineering (ICMEAE), pp. 43–48 (2017)

Dapogny, A., Bailly, K., Dubuisson, S.: Confidence-weighted local expression predictions for occlusion handling in expression recognition and action unit detection. Int. J. Comput. Vis. 126, 255–271 (2018)

Stratou, G., Ghosh, A., Debevec, P., Morency, L.P.: Effect of illumination on automatic expression recognition: a novel 3D relightable facial database. In: Face and Gesture, pp. 611–618 (2011)

Nazir, S., Yousaf, M.H., Nebel, J.C., Velastin, S.A.: A bag of expression framework for improved human action recognition. Pattern Recogn. Lett. 103, 39–45 (2018)

Shen, M., Jiang, X., Sun, T.: Anomaly detection based on nearest neighbor search with locality-sensitive B-tree. Neurocomputing 289, 55–67 (2018)

Yu, G., Goussies, N.A., Yuan, J., Liu, Z.: Fast action detection via discriminative random forest voting and top-k subvolume search. IEEE Trans. Multimed. 13, 507–517 (2011)

Ehsan, T.Z., Mohtavipour, S.M.: Vi-Net: a deep violent flow network for violence detection in video sequences. In: 11th International Conference on Information and Knowledge Technology (IKT), pp. 88–92 (2020).

Berlin, S.J., John, M. (2020) Particle swarm optimization with deep learning for human action recognition. Multimed. Tools Appl. 1–23 (2020)

Wang, L., Huynh, D.Q., Koniusz, P.: A comparative review of recent kinect-based action recognition algorithms. IEEE Trans. Image Process. 29, 15–28 (2019)

Jalal, A., Kamal, S., Azurdia-Meza, C.A.: Depth maps-based human segmentation and action recognition using full-body plus body color cues via recognizer engine. J. Electr. Eng. Technol. 14, 455–461 (2019)

Sevilla-Lara, L., Liao, Y., Güney, F., Jampani, V., Geiger, A., Black, M.J.: On the integration of optical flow and action recognition. In: German Conference on Pattern Recognition, pp. 281–297 (2018)

Zin, T.T., Kurohane, J.: Visual analysis framework for two-person interaction. In: IEEE 4th Global Conference on Consumer Electronics (GCCE), pp. 519–520 (2015)

Chen, Y., Zhang, L., Lin, B., Xu, Y., Ren, X.: Fighting detection based on optical flow context histogram. In: Second International Conference on Innovations in Bio-inspired Computing and Applications, pp. 95–98 (2011).

Colque, R.V.H.M., Caetano, C., de Andrade, M.T.L., Schwartz, W.R.: Histograms of optical flow orientation and magnitude and entropy to detect anomalous events in videos. IEEE Trans. Circuits Syst. Video Technol. 27, 673–682 (2016)

Ehsan, T.Z., Nahvi, M.: Violence detection in indoor surveillance cameras using motion trajectory and differential histogram of optical flow. In: 8th International Conference on Computer and Knowledge Engineering (ICCKE), pp. 153–158 (2018).

Hassner, T., Itcher, Y., Kliper-Gross, O.: Violent flows: Real-time detection of violent crowd behavior. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 1–6 (2012).

Laptev, I.: On space-time interest points. Int. J. Comput. Vis. 64, 107–123 (2005)

Dollár, P., Rabaud, V., Cottrell, G., Belongie, S.: Behavior recognition via sparse spatio-temporal features. In: IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, pp. 65–72 (2005).

Dawn, D.D., Shaikh, S.H.: A comprehensive survey of human action recognition with spatio-temporal interest point (STIP) detector. Vis. Comput. 32, 289–306 (2016)

De Souza, F.D., Chavez, G.C., do Valle Jr, E.A., Araújo, A.D.A.: Violence detection in video using spatio-temporal features. In: 23rd SIBGRAPI Conference on Graphics, Patterns and Images, pp. 224–230 (2010).

Mabrouk, A.B., Zagrouba, E.: Spatio-temporal feature using optical flow based distribution for violence detection. Pattern Recogn. Lett. 92, 62–67 (2017)

Serrano, I., Deniz, O., Espinosa-Aranda, J.L., Bueno, G.: Fight recognition in video using hough forests and 2D convolutional neural network. IEEE Trans. Image Process. 27, 4787–4797 (2018)

Khan, S.U., Haq, I.U., Rho, S., Baik, S.W., Lee, M.Y.: Cover the violence: a novel deep-learning-based approach towards violence-detection in movies. Appl. Sci. 9, 4963–4976 (2019)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4489–4497 (2015)

Ullah, F.U.M., Ullah, A., Muhammad, K., Haq, I.U., Baik, S.W.: Violence detection using spatiotemporal features with 3D convolutional neural network. Sensors 19, 2472–2486 (2019)

Xia, Q., Zhang, P., Wang, J., Tian, M., Fei, C.: Real time violence detection based on deep spatio-temporal features. In: Chinese Conference on Biometric Recognition, pp. 157–165 (2018)

Zhou, P., Ding, Q., Luo, H., Hou, X.: Violent interaction detection in video based on deep learning. J. Phys. Conf. Ser. 844 (2017)

Sudhakaran, S., Lanz, O.: Learning to detect violent videos using convolutional long short-term memory. In: 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1–6 (2017).

Meng, Z., Yuan, J., Li, Z. (2017) Trajectory-pooled deep convolutional networks for violence detection in videos. In: International Conference on Computer Vision Systems, pp. 437–447 (2017).

Poynton, C.: Digital video and HD: Algorithms and Interfaces. Elsevier (2012).

Meinhardt-Llopis, E., Pérez, J.S., Kondermann, D.: Horn-schunck optical flow with a multi-scale strategy. Image Process. Online 3, 151–172 (2013)

Horn, B.K., Schunck, B.G.: Determining optical flow. Tech. Appl. Image Underst. 281, 319–331 (1981)

Bobick, A.F., Davis, J.W.: The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 23, 257–267 (2001)

François, C.: Deep Learning with Python. Manning Publications Company (2017)

Su, W., Boyd, S., Candes, E.: A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights. In: Advances in Neural Information Processing Systems, pp. 2510–2518 (2014).

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Nievas, E.B., Suarez, O.D., García, G.B., Sukthankar, R.: Violence detection in video using computer vision techniques. In: International Conference on Computer Analysis of Images and Patterns, pp. 332–339 (2011)

Serrano, G.I., Deniz, S.O., Bueno, G.G., Kim, T.K.: Fast fight detection. PLoS One, 10, e0120448 (2015)

Deniz, O., Serrano, I., Bueno, G., Kim, T.K.: Fast violence detection in video. In: International Conference on Computer Vision Theory and Applications (VISAPP), vol. 2, pp. 478–485 (2014)

Zhou, P., Ding, Q., Luo, H., Hou, X.: Violence detection in surveillance video using low-level features. PLoS One 13, e0203668 (2018)

Li, H., Wang, J., Han, J., Zhang, J., Yang, Y., Zhao, Y.: A novel multi-stream method for violent interaction detection using deep learning. Measurement Control 53, 796–806 (2020)

Carneiro, S.A., da Silva, G.P., Guimaraes, S.J.F., Pedrini, H.: Fight detection in video sequences based on multi-stream convolutional neural networks. In: IEEE SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), pp. 8–15 (2019).

Halder, R., Chatterjee, R.: CNN-BiLSTM model for violence detection in smart surveillance. SN Comput. Sci. 1, 1–9 (2020)

Asad, M., Yang, J., He, J., Shamsolmoali, P., He, X.: Multi-frame feature-fusion-based model for violence detection. Vis. Comput. 1–17 (2020)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mohtavipour, S.M., Saeidi, M. & Arabsorkhi, A. A multi-stream CNN for deep violence detection in video sequences using handcrafted features. Vis Comput 38, 2057–2072 (2022). https://doi.org/10.1007/s00371-021-02266-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02266-4