Abstract

The growth rate of a population has been an important aspect in ecological and biologic applications. Since a non-linear stochastic behavior is linked to this type of systems, the inference of the model parameters is a challenging task. Approximate Bayesian Computation (ABC) can be used for leading the intractability of the likelihood function caused by the model characteristics. Recently, some methods based on Hilbert Space Embedding (HSE) have been proposed in the context of ABC; nevertheless, the relevance of the observations and simulations are not contemplated. Here, we develop a Sparse HSE-based distance, termed SHSED, to compare distributions associated with two random variables through sparse estimations of the densities in a Reproducing Kernel Hilbert Space (RKHS). Namely, SHSED highlights relevant information using a sparse weighted representation of data within an ABC-based inference. Our method improves the inference accuracy of a Ricker map-based population model in comparison with other state-of-the-art ABC-based approaches.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In population dynamics, the growth rate of a particular population is a widely known field of interest, e.g., ecology and biology applications. Several discrete-time methods have been proposed to model the population growth for single-species with different characteristics. However, the Ricker map is one of the most important ones, since it stands for a general approach to discretize almost any continuous model of population dynamics while taking into account the stochastic behavior proper to nature. Besides, it is capable not only of stability but also of limits cycles and chaos [12]. Given an observed data from a population, the goal is to find the parameters of the growth population model, i.e., Ricker model. Since this model is stochastic, traditional techniques, such as least squares, are not appropriate for leading the uncertainty, demanding for a Bayesian approach. It is well-known that in all Bayesian-based statistical inference tasks, the likelihood function performs an important role as long as it states the probability of the observed data under a particular given statistical model. Then, using the Bayes theorem, we can update a priori knowledge about the set of needed parameters into a posterior distribution. For straightforward models, it is possible to derive an analytic expression for the likelihood function, and the evidence can be computed with relative ease; nonetheless, for complex models, to find an exact formula for the likelihood function is often intractable [9].

To deal with this intractability, free-likelihood techniques like Approximate Bayesian Computation (ABC) have emerged. The idea behind ABC is to assess an auxiliary model with different parameter values drawn from a prior distribution to obtain simulations that are compared to the observed data [10]. The most basic ABC technique uses a rejection of samples using a comparison between summary statistics of the observed and simulated data. In particular, ABC methods based on the rejection of samples present a high dependence on the choice of sufficient summary statistics [7]. A poor selection of summary statistics can lead to an additional bias that can be difficult to quantify, thus, unless the selected summary statistics are sufficient, inference results in a partial posterior rather than the desired full one [5].

To avoid the selection of summary statistics, ABC methods based on kernel embeddings have been proposed, where probability density functions are set over the observed and simulated data to be compared in a RKHS. Authors in [5] proposed to use empirical approximations for the probability measures embedded in the RKHS, standing for a comparison based uniquely on the characteristic kernel, leading to an approach that considers one free parameter. On the other hand, authors in [13] proposed to use Parzen-window density estimation to approximate the probability measures, producing a robust estimator regarding the number of samples required in ABC. However, the relevance of the samples is not considered, which is crucial for highly dynamic models, i.e., the Ricker model.

In this work, we introduce a Sparse Hilbert Space Embedding-based distance, named SHSED, to compare distributions based on sparse estimations of the densities in RKHSs. In this sense, SHSED highlights relevant information employing a Sparse Kernel Density Estimation of probability measures associated with the observed and simulated data in the context of ABC. Obtained results show that our proposed technique improves the accuracy in terms of the relative error when inferring highly dynamic models as the Ricker map, in comparison to state-of-the-art ABC-based methods.

The remainder of this paper is organized as follows: Sect. 2 describes the mathematical background of SHSED. Section 3 describes the experimental set-up and the obtained result. Finally, the conclusions are outlined in Sect. 4.

2 Sparse Hilbert Space Embedding-Based Distance for Approximate Bayesian Computation

Let us consider a situation where a generative model can be simulated through a conditional probability sampling from \(p(x|\theta ),\) where \(x{{\mathrm{\,\in \,}}}\mathcal {X}\) is a random variable with domain \(\mathcal {X}\) and \(\theta {{\mathrm{\,\in \,}}}\Theta \) holds the parameters. Besides, the computation of the likelihood \(p(y|\theta )\) for the observed data \(y{{\mathrm{\,\in \,}}}\mathcal {X}\) is intractable. Since the posterior \(p(\theta |y)\propto p(y|\theta )\zeta (\theta )\) cannot be calculated, being \(\zeta (\theta )\) a prior over \(\theta ,\) neither exact nor sampled posterior are feasible. Therefore, Approximate Bayesian Computation (ABC)-based techniques aim to solve such an inference issue by computing the likelihood from simulation [5]. In particular, a straightforward ABC-based approach comprises the rejection of simulated data x according to the distance function \(\mathrm{{d}}_{\mathcal {X}}:\mathcal {X}\,\times \,\mathcal {X}\rightarrow \mathbb {R}^+.\) So, an approximate posterior can be estimated as follows: \(\hat{p}(\theta |y;\epsilon )\propto \hat{p}(y|\theta ;\epsilon )\zeta (\theta ),\) where \(\hat{p}(y|\theta ;\epsilon ){{\mathrm{\,=\,}}}\int _{\Omega \left( y;\epsilon \right) }{p(x|\theta )}dx,\) \(\Omega \left( y;\epsilon \right) {{\mathrm{\,=\,}}}\{x:\mathrm{{d}}_{\mathcal {X}}(x,y)<\epsilon \},\) and \(\epsilon {{\mathrm{\,\in \,}}}\mathbb {R}^+.\) However, applying a distance directly on \(\mathcal {X}\) is often difficult because of the large amount of features and/or observations. Moreover, fixing the \(\epsilon \) value is crucial to achieve an accurate ABC estimation. Alternative strategies includes a feature mapping \(s{{\mathrm{\,=\,}}}\vartheta (x)\) before calculating the distance, where \(s{{\mathrm{\,\in \,}}}\mathcal {S}\) is a summary statistics space and \(\vartheta :\mathcal {X}\rightarrow \mathcal {S}\) [4]; nonetheless, such summary statistics often leak information for complex models. Then, to code complex relations among samples, the approximate likelihood \(\hat{p}(y|\theta ;\epsilon )\) can be computed as the convolution of the true likelihood \(p(y|\theta )\) and the kernel function \(\kappa :\mathcal {X}\times \mathcal {X}\rightarrow \mathbb {R},\) which imposes a constraint to the rejection of samples as the inner product \(\kappa (x,y){{\mathrm{\,=\,}}}\,\langle \phi (x),\phi (y)\rangle _{\mathcal {H}}\) in the RKHS, \(\mathcal {H},\) where \(\phi :\mathcal {X}\rightarrow \mathcal {H}\). Although various kernel functions can be tested, the Gaussian function is preferred since it aims at finding \(\mathcal {H}\) with universal approximating ability, not to mention its mathematical tractability. Thus, the Gaussian kernel in the ABC context yields: \(\kappa _G\left( {\mathrm{{d}}_{\mathcal {X}}(x,y)};\sigma \right) {{\mathrm{\,=\,}}}\exp \left( -{\mathrm{{d}}^2_{\mathcal {X}}(x,y)}/{2\sigma ^2}\right) \), where \(\sigma {{\mathrm{\,\in \,}}}\mathbb {R}^+\) stands for the kernel bandwidth.

Here, we introduce a Hilbert Space Embedding-based distance (HSED) for enhancing the Gaussian-based similarity computation in nonlinear stochastic systems exhibiting dynamic observations. Thus, let us assume that both the observed and a simulated data can be modeled as a pair of random variables \(Y\backsim P_Y\) and \(X\backsim P_X,\) respectively, given the marginal probability distributions \(P_Y\) and \(P_X.\) Besides, let \(\{x^{(i)}\}^{Q_x}_{i{{\mathrm{\,=\,}}}1}\backsim P_X\) and \(\{y^{(i)}\}^{Q_y}_{i{{\mathrm{\,=\,}}}1}\backsim P_Y\) be a pair of independent and identically distributed sample sets of size \(Q_x\) and \(Q_y\). Our HSED is defined as follows [8]: \(d^2_{\mathcal {H}}\left( P_X,P_Y\right) {{\mathrm{\,=\,}}}\left\| \hat{\mu }[P_X]-\hat{\mu }[P_Y]\right\| ^2_{\mathcal {H}}\), where \(\hat{\mu }[P_X],\hat{\mu }[P_Y]{{\mathrm{\,\in \,}}}\mathcal {H}\) are the embedding approximations of \({\mu }[P_X]{{\mathrm{\,=\,}}}\pmb {E}_{X}\left\{ \phi (X)\right\} \) and \({\mu }[P_Y]{{\mathrm{\,=\,}}}\pmb {E}_{Y}\left\{ \phi (Y)\right\} \), through weighted averaging as: \(\hat{\mu }[P_X]{{\mathrm{\,=\,}}}\sum \nolimits ^{Q_x}_{i{{\mathrm{\,=\,}}}1}\hat{f}(x^{(i)})\kappa _G(\mathrm{{d}}_{e}(x^{(i)},\cdot );\sigma _K)\), with \(\hat{f}(x^{(i)})= {\varvec{\alpha }}^\top {\varvec{a}}_i^{(\sigma _X)}\) and \(\hat{\mu }[P_Y] {{\mathrm{\,=\,}}}\sum \nolimits ^{Q_y}_{i{{\mathrm{\,=\,}}}1}{\hat{g}(y^{(i)})\kappa _G({\mathrm{{d}}_{e}}(y^{(i)},\cdot );\sigma _K)}\), with \(\hat{g}(y^{(i)}){{\mathrm{\,=\,}}}{\varvec{\beta }}^\top {\varvec{b}}_i^{(\sigma _Y)}\); \(\mathrm{{d}_e}\) stands for the Euclidean distance, \({\varvec{\alpha }}{{\mathrm{\,\in \,}}}\,[0,1]^{Q_x},\) \({\varvec{\beta }}{{\mathrm{\,\in \,}}}\,[0,1]^{Q_y},\) \(||{\varvec{\alpha }}||_1\,{{\mathrm{\,=\,}}}1,\) \(||{\varvec{\beta }}||_1\,{{\mathrm{\,=\,}}}1\) and \(\hat{f}\) and \(\hat{g}\) are the approximated densities associated with \(P_X\) and \(P_Y\), respectively. Moreover, \({\varvec{a}}_i^{(\sigma _X)}\) and \({\varvec{b}}_i^{(\sigma _Y)}\) are the i-th column vectors of the matrices \({\varvec{A}}^{(\sigma _X)}\in \mathbb {R}^{Q_x\times Q_x},\) \({\varvec{B}}^{(\sigma _Y)}{{\mathrm{\,\in \,}}}\mathbb {R}^{Q_y\times Q_y}\) holding elements \(a^{\sigma _X}_{ij}= \kappa _G({\mathrm{{d}}_{e}}(x^{(i)},x^{(j)});\sigma _X),\) and \({b}^{(\sigma _Y)}_{ij}=\kappa _G({\mathrm{{d}}_{e}}(y^{(i)},y^{(j)});\sigma _Y),\) respectively, and \(\sigma _K,\sigma _X,\sigma _Y{{\mathrm{\,\in \,}}}\mathbb {R}^+\). Bearing in mind the convolution properties for Gaussian functions [6], the HSED is rewritten as:

where \(\sigma _{KX}{{\mathrm{\,=\,}}}\sqrt{\sigma ^2_K+2\sigma _X^2},\) \(\sigma _{KY}{{\mathrm{\,=\,}}}\sqrt{\sigma ^2_K+2\sigma _Y^2},\) \(\sigma _{L}{{\mathrm{\,=\,}}}\sqrt{\sigma ^2_K+\sigma _X^2+\sigma _Y^2},\) and the matrix \({\varvec{L}}^{(\sigma _L)}{{\mathrm{\,\in \,}}}\,\mathbb {R}^{Q_x \times Q_y}\) holds elements \(l^{(\sigma _L)}_{i,j}{{\mathrm{\,=\,}}}\kappa _G({\mathrm{{d}}_{e}}(x^{(i)},y^{(j)});\sigma _L).\) The HSED in Eq. (1) highlights relevant samples concerning the weight values \({\varvec{\alpha }},{\varvec{\beta }}\) to reject or accept a given sample in ABC. In turn, the probability density functions are estimated using informative samples through sparseness in the weighting coefficients. In this regard, a constrained quadratic optimization problem is formulated based on a Integrated Mean Squared Error, \(\varepsilon ({\varvec{\alpha }}) {{\mathrm{\,=\,}}}\int \nolimits _X{(f(x^{(i)})- \hat{f}(x^{(i)}))^2 dx}\), as follows [3]:

The optimization problem in Eq. (2) can be solved using a Sequential Minimal Optimization (SMO) algorithm. Likewise, the \({\varvec{\beta }}\) weights values are fixed through the minimization of \(\varepsilon ({\varvec{\beta }})\). Therefore, our Sparse HSED (SHSED) focuses relevant observations in \(\hat{d}_{\mathcal {H}}^2(P_X,P_Y)\) based on the sparse weights \({\varvec{\alpha }},{\varvec{\beta }}\). In practice, given N samples \(\{x_n^{(i)}\backsim P_{X_n}\}^N_{n{{\mathrm{\,=\,}}}1}\) from \(p(x|\theta _n),\) with \(\theta _n\backsim \zeta (\theta ),\) and the observation \(y\backsim P_Y,\) a weighted sample set \(\Psi {{\mathrm{\,=\,}}}\left\{ (\theta _n,w_n)\right\} ^N_{n{{\mathrm{\,=\,}}}1}\) is calculated by fixing:

which can be employed to approximate \(p(\theta |y)\) using posterior expectation as follows: \(\hat{p}(\theta |y){{\mathrm{\,=\,}}}\sum \nolimits ^N_{n{{\mathrm{\,=\,}}}1}{w_n\kappa _G(\mathrm{{d}}_e(\theta ,\theta _n);\sigma _\theta )},\) with \(\sigma _\theta {{\mathrm{\,\in \,}}}\mathbb {R}^+\). Algorithm 1 summarizes an ABC approach based on the introduced SHSED.

3 Experiments and results

To evaluate the SHSED performance, we consider two statistical inference task: a toy problem that comprises a Poisson mixture model and a nonlinear ecological dynamic system termed the Ricker model [12]. As benchmark, the straightforward ABC Rejection and two state-of-the-art ABC methods based on HSE are studied. The former, Maximum Mean Discrepancy (MMD)-based ABC [5], uses empirical density approximations: \(\hat{f}(x^{(i)}){{\mathrm{\,=\,}}}1/Q_x\sum _{j{{\mathrm{\,=\,}}}1}^{Q_x}\delta (x^{(i)}-x^{(j)}),\) \(\hat{g}(y^{(i)})=1/Q_y \sum _{j{{\mathrm{\,=\,}}}1}^{Q_y}\delta (y^{(i)}-y^{(j)})\), being \(\delta (\cdot )\) the Dirac delta function. The latter, Parzen-based ABC [13], uses traditional Parzen window estimators as follows: \(\hat{f}(x^{(i)})=1/Q_x\sum _{j{{\mathrm{\,=\,}}}1}^{Q_x}\kappa _G(\mathrm{{d}}_e(x^{(i)},x^{(j)});\sigma _X),\) \(\hat{g}(y^{(i)}){{\mathrm{\,=\,}}}1/Q_y\sum _{j{{\mathrm{\,=\,}}}1}^{Q_y}\kappa _G(\mathrm{{d}}_e(y^{(i)},y^{(j)});\sigma _Y).\) Besides, as quantitative assessment, the following relative error is used: \(\mathcal {E}_{\theta ^{(z)}} = 100\times {||\theta ^{(z)} -\sum \nolimits ^N_{n{{\mathrm{\,=\,}}}1}{w_n\hat{\theta }_n^{(z)}}||}/{||{\theta }^{(z)}||}\), where \(\theta ^{(z)}\) is the value of the z-th target parameter and \(\hat{\theta }_n^{(z)}\) is the n-th ABC-based approximation with weight \(w_n.\)

Poisson mixture model results. We consider a finite Poisson mixture model of the form: \(p(x|\varvec{\lambda }, {\varvec{\pi }}){{\mathrm{\,=\,}}}\sum _{c{{\mathrm{\,=\,}}}1}^{C} \pi _c{\exp (-\lambda _c)\lambda _c^x}/{x!},\) where \({\varvec{\pi }}{{\mathrm{\,=\,}}}\,\{\pi _c\}_{c{{\mathrm{\,=\,}}}1}^C\) are the mixing coefficients holding the condition \(\sum _{c{{\mathrm{\,=\,}}}1}^{C}\pi _c{{\mathrm{\,=\,}}}1\), \({\varvec{\lambda }}{{\mathrm{\,=\,}}}\,\{\lambda _c\}_{c{{\mathrm{\,=\,}}}1}^C\) are the number of average events for each Poisson density (\(\lambda _1<\lambda _2<\cdots <\lambda _C\)), and C is the number of components [11]. For concrete testing, we aim to estimate the posterior \(p({\varvec{\pi }}|{\varvec{\lambda }}, x)\), with \(C{{\mathrm{\,=\,}}}2\) and \({\varvec{\lambda }}{{\mathrm{\,\in \,}}}\,\{1,8\}\). Moreover, a Dirichlet distribution is imposed over \({\varvec{\pi }}\) as prior [11], that is, \({\varvec{\pi }}\backsim \mathrm{{Dirichlet}}(1,1)\). Initially, we generated ten samples for \({\varvec{\pi }}\) from the prior, then, 50 observations are computed for each of them using the model. Figure 1 shows the obtained inference results by fixing \(\sigma _X{{\mathrm{\,=\,}}}\sigma _Y{{\mathrm{\,=\,}}}0.33\) and \(\sigma _K{{\mathrm{\,=\,}}}2.154\). As seen, the proposed SHSED extracts relevant information from simulations when suitable values for \(\sigma _X\) and \(\sigma _Y\) are selected. In fact, Fig. 1(b) shows how sample \(\pi _6\) is the farthest from the true values of \({\varvec{\pi }}\) but its associated simulation \(x_6\) in Fig. 1(a) is the closest from the observed data; nevertheless, Fig. 1(c) shows that our sparse approach assigns a high value for the distance, leading to a low weight. On the other hand, the proposed SHSED approach assigned high weights to the samples \(\pi _3\), \(\pi _4,\) and \(\pi _5,\) which exihibit close distance values to the true parameters in \({\varvec{\pi }}\) but distant dependencies with simulations \(x_3\), \(x_4,\) and \(x_5\) concerning the observed data.

Now, to test the robustness of the ABC-based estimations regarding the number of simulations, we build an observed dataset holding 50 samples drawn from the Poisson mixture model, with \({\varvec{\pi }}{{\mathrm{\,\in \,}}}\,\{0.3,0.7\}\). Afterward, to generate the simulated data, we calculated candidates from the prior and drew 50 samples from the model to compute the posterior. This procedure is repeated 100 times. The number of simulations (N) is increased from 10 to 300 using a step size of 10. For the ABC rejection, we use the Euclidean distance to compare the observed and simulated data with \(\epsilon {{\mathrm{\,=\,}}}10\); this threshold was defined empirically. For the MMD, the Parzen, and the SHSED-based ABC methods, we use a soft threshold value of \(\epsilon {{\mathrm{\,=\,}}}0.158\). Figures 1(d) and (e) show the error bar curves of the posterior distribution for the mixing coefficients. As seen, the SHSED improves the posterior estimation leading to the lowest errors and deviations for \(\sigma _X{{\mathrm{\,=\,}}}\sigma _Y{{\mathrm{\,=\,}}}0.406\). Our sparse-based weighting favors the identification of relevant structures in an RKHS before computing the dependencies between observed and simulated data within an ABC framework. Additionally, note that for low kernel bandwidth values in Eq. (2), the SHSED approach converges to the MMD and Parzen-based ones, since the weights \({\varvec{\alpha }}\) and \({\varvec{\beta }}\) get closer to \(1/Q_x\) and \(1/Q_y\), respectively.

Poisson mixture model results. (a) Euclidean distance between the observed and simulated data. (b) Euclidean distance between candidates sampled from the prior and the true values of the parameters. (c) Different distances based on HSE between distributions associated with the observed and simulated data. (d) and (e) Relative error bar curves for the posterior of the mixing coefficients: \(\pi _1\) and \(\pi _2\) respectively.

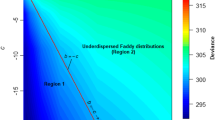

Ricker model results. This nonlinear ecological dynamic system can be modeled as follows [1]: \(\ln (M^{(t)}){{\mathrm{\,=\,}}}\ln (r)+\ln (M^{(t-1)})-M^{(t-1)}+e^{(t)}\), where \(M^{(t)}{{\mathrm{\,\in \,}}}\mathbb {R}\) is the size of some animal population at time t, \(e^{(t)}\backsim \mathcal {N}(0,\sigma _e^2)\), being \(\sigma _e{{\mathrm{\,\in \,}}}\mathbb {R}^+\) the standard deviation of the innovations, and \(\ln (r)\) is related to the growth rate parameter of the model (\(r{{\mathrm{\,\in \,}}}\mathbb {R}^+\)). Additionally, the observation y is a time series drawn from a Poisson distribution: \(y\backsim \mathrm{{Poisson}}(\phi M^{(t)})\), with \(y{{\mathrm{\,\in \,}}}\mathbb {N}\), where \(\phi \) is a scale parameter [2]. Thus, the Ricker model is completely parametrized by \({\varvec{\theta }}{{\mathrm{\,=\,}}}\,[\ln (r),\phi ,\sigma _e]\). In this experiment, we draw 50 samples from the model with \({\varvec{\theta }}{{\mathrm{\,=\,}}}\,[3.8,10,0.3]\) by fixing the following priors [1]: \(\ln (r)\backsim \mathcal {N}(4,0.5)\); \(\phi \backsim \mathcal {X}^2(10)\); \(\sigma _e\backsim \mathrm{{invgamma}}(3,1.3)\). The kernel bandwidth for the ABC rejection approach is fixed empirically as \(\epsilon {{\mathrm{\,=\,}}}75\), meanwhile for the MMD, the Parzen and, the SHSED, we set \(\epsilon {{\mathrm{\,=\,}}}0.158\). Besides, we use \(\sigma _X{{\mathrm{\,=\,}}}\sigma _Y{{\mathrm{\,=\,}}}0.0158\) to compute the sparse weights and \(\sigma _K{{\mathrm{\,=\,}}}2.5\). According to the results in Figs. 2(a) to (c), the MMD-based posteriors are almost identical to the ones obtained by the Parzen-based approach. Furthermore, notice how the posterior distribution for \(\ln (r)\) and \(\sigma _e\) parameters obtained by our approach has their maximum closer to the true value in comparison to the benchmarks, and the rejection-based ABC has the worst performance. In the case of \(\phi \), it can be seen that the results obtained by the SHSED are close enough to the other ABC techniques based on HSED.

Lastly, to test the stability of the inference, we repeated 100 times the procedure to approximate the posterior of the model parameters concerning the relative error assessment. Obtained results in Figs. 2(d) to (f) reveals how the proposed SHSED reaches the lowest uncertainty for the \(\ln (r)\) and \(\phi \) inferences. In the case of \(\sigma _e\), since the true value is low (\(\sigma _e=0.3\)), a small change in the form of the posterior produces a substantial change in the expected value; hence, relevant changes in the index error are gathered. Remarkably, the lowest uncertainty for \(\sigma _e\) is related to the ABC rejection method due to the smoother form of the posterior in comparison to other HSED methods; nonetheless, SHSED achieves the best overall performance (see Fig. 2(g)).

Ricker model results. (a)–(c) Different ABC-based estimated posteriors for \(\{\ln r,\; \phi , \; \sigma _e\}\) with \(\sigma _\theta =\{0.253, \;2.190, \;0.082\}\), respectively. (d)–(f) Relative error boxplots for the posterior of the Ricker model parameters: \(\ln r,\; \phi ,\) and \(\sigma _e,\) respectively. (g) Overall performance of the different ABC methods in terms of the mean relative error.

4 Conclusions

We introduce a novel Sparse Hilbert Space Embedding-based distance, termed SHSED, to compute data dependencies within Approximate Bayesian Computation (ABC) inference frameworks. Remarkably, our SHSED compares distributions associated with two random variables through sparse estimations of the densities in RKHSs. To test the introduced approach, we consider two statistical inference tasks: a Poisson mixture model and a nonlinear ecological dynamic system, termed the Ricker model. Attained results demonstrated how our proposal outperforms state-of-the-art ABC-based inference approaches, including the well-known ABC rejection and ABC alternatives based on HSE. Indeed, SHSED highlights relevant information from dynamic observations and simulations before their quantitive comparison in an RKHS. As future work, authors plan to develop an automatic selection of the RKHS regarding the input data dynamics. Moreover, applying ABC approaches using HSE for multivariate data is a research line of interest.

References

Golchi, S., Campbell, D.A.: Sequentially constrained Monte Carlo. Comput. Stat. Data Anal. 97, 98–113 (2016)

Gutmann, M.U., Corander, J.: Bayesian optimization for likelihood-free inference of simulator-based statistical models. J. Mach. Learn. Res. 17(125), 1–47 (2016). http://jmlr.org/papers/v17/15-017.html

Hong, X., Chen, S.: A fast algorithm for sparse probability density function construction. In: 2013 18th International Conference on DSP, pp. 1–6. IEEE (2013)

Joyce, P., Marjoram, P., et al.: Approximately sufficient statistics and Bayesian computation. Stat. Appl. Genet. Mol. Biol. 7(1), 26 (2008)

Park, M., Jitkrittum, W., Sejdinovic, D.: K2-ABC: approximate Bayesian computation with kernel embeddings. arXiv preprint arXiv:1502.02558 (2015)

Principe, J.C.: Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives. Springer Science & Business Media, Heidelberg (2010). https://doi.org/10.1007/978-1-4419-1570-2

Robert, C.P., Cornuet, J.M., Marin, J.M., Pillai, N.S.: Lack of confidence in approximate bayesian computation model choice. Proc. Nat. Acad. Sci. 108(37), 15112–15117 (2011)

Smola, A., Gretton, A., Song, L., Schölkopf, B.: A hilbert space embedding for distributions. In: Hutter, M., Servedio, R.A., Takimoto, E. (eds.) ALT 2007. LNCS (LNAI), vol. 4754, pp. 13–31. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-75225-7_5

Toni, T., Welch, D., Strelkowa, N., Ipsen, A., Stumpf, M.P.: Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems. J. R. Soc. Interface 6(31), 187–202 (2009)

Turner, B.M., Van Zandt, T.: A tutorial on approximate Bayesian computation. J. Math. Psychol. 56(2), 69–85 (2012)

Viallefont, V., Richardson, S., Green, P.J.: Bayesian analysis of poisson mixtures. J. Nonparametric Stat. 14(1–2), 181–202 (2002)

Wood, S.N.: Statistical inference for noisy nonlinear ecological dynamic systems. Nature 466(7310), 1102–1104 (2010)

Zuluaga, C.D., Valencia, E.A., Álvarez, M.A., Orozco, Á.A.: A parzen-based distance between probability measures as an alternative of summary statistics in approximate Bayesian computation. In: Murino, V., Puppo, E. (eds.) ICIAP 2015. LNCS, vol. 9279, pp. 50–61. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-23231-7_5

Acknowledgments

Research under grants provided by the project “Desarrollo de una plataforma para el cálculo de confiabilidad en la operación interdependiente de los sistemas de gas natural y sector eléctrico de Colombia que permita evaluar alternativas de inversión y regulación para optimizar los costos de operación” with code: 1110-745-58696, funded by Colciencias. Moreover, author W. González-Vanegas was supported by Colciencias under the 706 agreement, “Jóvenes Investigadores e Innovadores-2015”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

González-Vanegas, W., Alvarez-Meza, A., Orozco-Gutierrez, Á. (2018). Sparse Hilbert Embedding-Based Statistical Inference of Stochastic Ecological Systems. In: Mendoza, M., Velastín, S. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2017. Lecture Notes in Computer Science(), vol 10657. Springer, Cham. https://doi.org/10.1007/978-3-319-75193-1_31

Download citation

DOI: https://doi.org/10.1007/978-3-319-75193-1_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-75192-4

Online ISBN: 978-3-319-75193-1

eBook Packages: Computer ScienceComputer Science (R0)