Abstract

The sclera is the part of the eye surround the iris, it is white and presents blood vessels that can be used for biometric recognition. In this paper, we propose a new method for sclera segmentation in face images. The method is divided into two steps: (1) the eye location and the (2) sclera segmentation. Eyes are located using Color Distance Map (CDM), Histogram of Oriented Gradients (HOG) descriptor and Random Forest (RF). The sclera is segmented by Image Foresting Transform (IFT). The first step has an accuracy of 95.95%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The location of the eye and the extraction of its features, as iris, corners, and sclera, are an important area for computer vision and machine learning. It can be used in applications such as facial recognition, safety control, and driver behavior analysis [1].

Several works have been produced in eye location field. A great number of these researches are based on the physical properties of eyes, as in [2, 3]. The work [4] uses template matching for eye detection by founding the correlation of a template eye T with various overlapping regions of the face image.

The segmentation of sclera has been studied mainly in the biometrics systems field. The approach implemented in [5], uses Fuzzy C-means clustering, a clustering method which divides one cluster of data into two or more related clusters. Abhijit [6] uses CDM, to segment the skin around the sclera, and saturation level at HSV color space, to applied a threshold based on the intensity of its pixels.

This paper proposes a new process for human eye detection in face images and sclera segmentation using HOG descriptor (Histogram of oriented gradients), Random Forest and IFT (Image Foresting Transform).

The organization of the paper is as follows: in Sect. 2, the methodology proposed in this article is presented. In Sect. 3, we introduce results and, lastly, we present the conclusions in Sect. 4.

2 Methodology

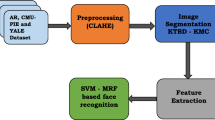

The approach proposed in this paper is shown in Fig. 1. The method starts with the preprocessing to reduce lighting problems present in the images. The eye candidates are located based on the Color Distance Map (CDM) [7], and its features are extracted and selected by Histogram of Oriented Gradients (HOG) [9], and Best First (BF) [11], respectively. The classification is performed by Random Forest. The found eyes have its sclera segmented with Image Foresting Transform (IFT) [13]. The classifier was selected by Auto-Weka tool [16] based on the performance obtained.

2.1 Eye Location

In this section, we present the approach used to locate eyes in images of faces. The method starts with the preprocessing to reduce lighting problems, next the skin is segmented, followed by the detection of eye candidate and finally by the classification.

Preprocessing. To reduce or eliminate lighting problem in the images, the Color Badge [8] is applied. Color Badge is a novel tone mapping operator based on the Light Random Sprays Retinex algorithm. It converts high dynamic range images in low dynamic range images.

Skin Segmentation and Detection of Eye Candidates. The second step in the eye localization is the skin segmentation. In this stage, a mask (Fig. 2a) is created by classifying each pixel into skin or not-skin labels using the Color Distance Map (CDM) [7]. The mask is composed of two maps where natural lighting and flash lighting conditions are extracted. The maps are defined by Eqs. 1 and 2.

where R, G, and B are the red, green, and blue component values of a RGB image. Next, noise removal (Fig. 2b), hole filling with successive closing and opening operations, and the largest skin-color region extraction (Fig. 2d) are applied. The following step is to apply arithmetic operations methods to extract the eye candidates. Using Fig. 2c and the mask (Fig. 2d) obtained before, the operation described in Eq. 3 is implemented.

The remaining sets of pixels will have their mass center located. These centers will be considered the Eye candidates(Fig. 2f) and will have their features extracted with the HOG descriptor.

The skin segmentation process (a) image, (b) segmented skin, (c) noise removal, (d) largest skin region, (e) Eq. 3 result and (f) ROI based on centers in original image. The black labels were used to preserve the identity of the individuals.

Feature Extraction and Selection. The HOG descriptor was introduced by Dalal and Triggs [9] as features for pedestrian recognition, although, it has demonstrated that it is capable of describing other objects [10].

The HOG algorithm result is a discrete group of features that describe the image. The number of cells and orientation bins defines the number of features. The configuration used on this work, defined empirically, was: window size of 16\(\,\times \,\)16 and cell size of 8\(\,\times \,\)8. Generating 144 attributes. Every eye candidate has its features extracted with HOG.

With HOG features extracted, we perform a feature selection to retain just the best features, the algorithm used for feature selection was the Best First (BF) [11]. BF algorithm searches the space of attribute subsets by exploring the most promising set with a backtracking facility [20]. The parameters used on BF were defined by the Auto-Weka.

Classification. The candidates classification were made using Random Forest (RF). RF is an ensemble learning algorithm for classification. It works by building a set of predictors trees where each tree is dependent on the values of a random vector sampled [19]. The RF implemented on WEKA [20] was used to generate the model for the classification. The dataset used was [12]. The Auto-Weka tool were used [16] to estimate the parameters.

2.2 Sclera Segmentation

Using the ROI (Region of interest) of the eye region obtained from the previous step, the sclera is segmented with IFT (Image Foresting Transform). IFT is a tool created to transform an image processing problem into a minimum-cost path forest problem using a graph derived from the image [13]. It works by creating a minimum-cost path that connects each node of the graph to a seed based on the similarity between neighbors. Building a forest that covers the whole image. The pixel of the image has 3 attributes: the cost of the path between seed and pixel, its predecessor pixel on the path, and the label of its seed. That way if a new minimum path is located, the label, cost, and predecessor are changed, [13]. With the set of seeds defined the IFT can separate foreground pixels from background pixels. To place the seeds in the sclera and the rest of the eye, the iris of the eye must be located.

The algorithm is executed in the saturation channel of the color model HSV, Fig. 3.

Iris Location. The location of the iris is obtaining using Hough Transform to find circumferences on the gray scale image. The circle found by the Hough Transform has its center and radius refined by two additional steps, this procedure is based on [17].

With the approximate location of iris center obtained, the first step is the circumference shift. It consists in modifying the center of the found circumference to its nearest neighbors in order to obtain a periphery with the lowest intensity of their pixels. An example of this is step is show in Fig. 4a, where the circumference in yellow is the first location of the iris, the red circle is the location after the circumference shift application.

The second step is to increase and decrease the radius of the circle looking for the radius where the intensity of the pixels are smaller. An example of this is step is show in Fig. 4b, where the circumference in yellow is the first radius of the iris, the red circle is the radius after the radius shift application.

Seeds Placement. The background seeds were placed on the border of the image. The sclera seeds were obtain based on an adaptation of [14], that uses the eye geometry to find the location of the sclera. The 2 seeds corresponding to the sclera are placed on the right and left side of the iris at a distance of 1.1 and at 25 horizontal degrees of the found radius, this is elucidated in Fig. 5, where the black point indicates the 2 seeds to be used. With the background and foreground pixels defined, the regions will grow until the image is complete segmented.

3 Results and Discussion

A number of experiments were performed to measure the reliability of all steps of the proposed method. The following sections explain the image databases used as well as the tests applied using the proposed method.

3.1 Image Databases

Two image databases were utilized. The dataset presented in [12] was used for the location of the eyes, as well as for the sclera segmentation. Images have a dimension 2048\(\,\times \,\)1536 pixels and contains faces of 45 individuals in 5 different poses (225 Images). This dataset was used in a strabismus research and is composed of individuals who have ocular deviations. The UBIRIS.v2 [15] database was used only on the sclera segmentation because it contains exclusively eye images. This dataset simulates un-constrained conditions with realistic noise factors (200 Images).

3.2 Experiments on the Eye Localization Method

The detection of the eye candidates were measured based on the percent of eyes on the dataset that were not considered a candidate. The database contains 450 eyes in its 225 images, of those, 97.7% were found by the method. Figure 6a show an example of an eye lost by the algorithm, where the blue rectangle indicates correctly found candidates.

The eye location had an accuracy of 98.13%, a precision of 98.1% and a recall of 98.1%. Incorrect classifications are shown in Fig. 6b–c. The false-negative and false-positive were obtained mainly at candidates where the format of the eye is not totally comprehended inside the ROI. Figure 6b shows false-negative samples, while Fig. 6c shows false-positive samples, where the red rectangles correspond to candidates classified as non-eye, while the green rectangles correspond to candidates classified as eye.

3.3 Experiments on the Sclera Segmentation Method

To evaluate the method for sclera segmentation we compare the area present on the scleras segmented manually and automatically. The segmentation was measure based on [18] that defines 2 Equations to calculate the precision and recall, Eq. 4. Example of segmented scleras are shown in Fig. 7.

where NPAM = Number of pixels retrieved in the sclera region by the automatically segmented mask, NPRS = Number of pixels retrieved in the automatically segmented mask, and NRMS = Number of pixels in the sclera region in the manually segmented mask.

The method had 86.02% of precision and 84.15% of recall on the UBIRISv2 database, and 80.39% of precision and 79.95% of recall on the database presented in [12]. Analyzing the results obtained in conjunction with the results present in [18], an improvement can be observed in relation to the best segmentation presented, which was 85.21% for precision and 80.21% for recall. Several papers also use the UBIRISv2 database to perform sclera segmentation, but the purpose of those articles consists mostly of biometric recognition and do not present measures focused on the evaluation of the quality of sclera segmentation, as [15].

4 Conclusions

In this paper, a new eye location and sclera segmentation method have been proposed. The method performs the detection of eyes, iris and realizes a segmentation of the sclera in face images.

The results for the eye location method using the [12] showed that the location method has a high accuracy and it is robust in the image of faces without a straight point for gaze reference. The sclera segmentation method was tested in both the [12] and the UBIRIS.v2 database. The segmented scleras show that the method tends to segment the most white parts of the sclera and tends to lose scleras where the white appearance is not present or is less evident.

The results shown that the variation of the illumination in the images compromised the segmentation in the two databases.

For future work, it is important to present more robust preprocessing techniques, in order to reduce the influence of lighting on segmentation. The number of seeds used for the sclera segmentation can also be evaluated, as that is no limit number for seeds.

Our research group acknowledges financial support from FAPEMA (GrantNumber: UNIVERSAL-01082/16), CNPQ (GrantNumber: 423493/2016-7) and FAPEMA/CAPES.

References

Khosravia, M.H., Safabakhsh, R.: Human eye sclera detection and tracking using a modified time-adaptive self-organizing map. Pattern Recognit. 41, 2571–2593 (2008)

Zhu, Z., Ji, Q., Fujimura, K., Lee, K.: Combining Kalman filtering and mean shift for real time eye tracking under active IR illumination. In: International Conference on Pattern Recognition (2002)

Haro, A., Flickner, M., Essa, I.: Detecting and tracking eyes by using their physiological properties, dynamics, and appearance. In: IEEE International Conference on Computer Vision and Pattern Recognition (2000)

Bhoi, N., Mohanty, M.N.: Template matching based eye detection in facial image. Int. J. Comput. Appl. (0975–8887) (2010)

Das, A., Pal, U., Ballester, M.A.F., Blumenstein, M.: A new efficient and adaptive sclera recognition system. In: Computational Intelligence in Biometrics and Identity Management (CIBIM) (2014)

Alkassar, S., Woo, W.L., Dlay, S.S., Chambers, J.A.: A novel method for sclera recognition with images captured on-the-move and at-a-distance. In: Biometrics and Forensics (IWBF) (2016)

Abdullah-Al-Wadud, M., Chae, O.: Skin segmentation using color distance map and water-flow property. In: Information Assurance and Security, ISIAS 2008. Kyung Hee University, Seoul (2008)

Banić, N., Lončarić, S.: Color badger: a novel retinex-based local tone mapping operator. In: Elmoataz, A., Lezoray, O., Nouboud, F., Mammass, D. (eds.) ICISP 2014. LNCS, vol. 8509, pp. 400–408. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07998-1_46

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection for image classification. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2005), San Diego, CA, USA, vol. 1, pp. 886–893 (2005)

Fleyeh, H., Roch, J.: Benchmark evaluation of HOG descriptors as features for classification of traffic signs. J. Digit. Imag. (2013)

Liu, H., Yu, L.: Toward integrating feature selection algorithms for classification and clustering. IEEE Trans. Knowl. Data Eng. 17(4), 491–502 (2005)

De Almeida, J.D.S., Silva, A.C., Teixeira, J.A.M., Paiva, A.C., Gattass, M.: Computer-aided methodology for syndromic strabismus diagnosis (2015)

de Miranda, P.A.V.: Image segmentation by the image foresting transform (2013)

Das, A., Pal, U., Ballester, M.A.F., Blumenstein, M.: Sclera recognition using dense-SIFT. In: Intelligent Systems Design and Applications (ISDA) (2013)

Proenca, H., Filipe, S., Santos, R., Oliveira, J., Alexandre, L.: The UBIRIS.v2: a database of visible wavelength images captured on-the-move and at-a-distance. IEEE Trans. PAMI 32, 1529–1535 (2010)

Thornton, C., Hutter, F., Hoos, H., Leyton-Brown, K.: The auto-WEKA: combined selection and hyperparameter optimization of classification algorithms. In: Proceedings of KDD (2013)

Zheng, Z., Yang, J., Yang, L.: A robust method for eye features extraction on color image. Pattern Recognit. Lett. 26, 2252–2261 (2005)

Das, A., Pal, U., Ferrer, M.A., Blumenstein, M.: SSRBC 2016: sclera segmentation and recognition benchmarking competition (2016)

Gislason, P.O., Benediktsson, J.A., Sveinsson, J.R.: Random forests for land cover classification. Pattern Recognit. Lett. 27, 294–300 (2006)

Weka 3: Data Mining Software in Java. http://www.cs.waikato.ac.nz/ml/weka/

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Fialho Pinheiro, J., Sousa de Almeida, J.D., Braz Junior, G., Cardoso de Paiva, A., Corrêa Silva, A. (2018). Sclera Segmentation in Face Images Using Image Foresting Transform. In: Mendoza, M., Velastín, S. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2017. Lecture Notes in Computer Science(), vol 10657. Springer, Cham. https://doi.org/10.1007/978-3-319-75193-1_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-75193-1_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-75192-4

Online ISBN: 978-3-319-75193-1

eBook Packages: Computer ScienceComputer Science (R0)