Abstract

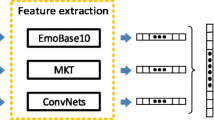

In consideration of the ever-growing available multimedia data, annotating multimedia content automatically with feeling(s) expected to arise in users is a challenging problem. In order to solve this problem, the emerging research field of video affective analysis aims at exploiting human emotions. In this field where no dominant feature representation has emerged yet, choosing discriminative features for the effective representation of video segments is a key issue in designing video affective content analysis algorithms. Most existing affective content analysis methods either use low-level audio-visual features or generate hand-crafted higher level representations based on these low-level features. In this work, we propose to use deep learning methods, in particular convolutional neural networks (CNNs), in order to learn mid-level representations from automatically extracted low-level features. We exploit the audio and visual modality of videos by employing Mel-Frequency Cepstral Coefficients (MFCC) and color values in the RGB space in order to build higher level audio and visual representations. We use the learned representations for the affective classification of music video clips. We choose multi-class support vector machines (SVMs) for classifying video clips into four affective categories representing the four quadrants of the Valence-Arousal (VA) space. Results on a subset of the DEAP dataset (on 76 music video clips) show that a significant improvement is obtained when higher level representations are used instead of low-level features, for video affective content analysis.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Bengio, Y.: Learning deep architectures for ai. Foundations and trends® in Machine Learning 2(1), 1–127 (2009)

Bengio, Y., Courville, A., Vincent, P.: Representation learning: A review and new perspectives (2013)

Bezdek, J.C.: Pattern recognition with fuzzy objective function algorithms. Kluwer Academic Publishers (1981)

Canini, L., Benini, S., Migliorati, P., Leonardi, R.: Emotional identity of movies. In: 2009 16th IEEE International Conference on Image Processing (ICIP), pp. 1821–1824. IEEE (2009)

Cui, Y., Jin, J.S., Zhang, S., Luo, S., Tian, Q.: Music video affective understanding using feature importance analysis. In: Proceedings of the ACM International Conference on Image and Video Retrieval, pp. 213–219. ACM (2010)

Eggink, J., Bland, D.: A large scale experiment for mood-based classification of tv programmes. In: 2012 IEEE International Conference onMultimedia and Expo (ICME), pp. 140–145. IEEE (2012)

Hanjalic, A., Xu, L.: Affective video content representation and modeling. IEEE Transactions on Multimedia, 143–154 (2005)

Irie, G., Hidaka, K., Satou, T., Kojima, A., Yamasaki, T., Aizawa, K.: Latent topic driving model for movie affective scene classification. In: Proceedings of the 17th ACM International Conference on Multimedia, pp. 565–568. ACM (2009)

Irie, G., Satou, T., Kojima, A., Yamasaki, T., Aizawa, K.: Affective audio-visual words and latent topic driving model for realizing movie affective scene classification. IEEE Transactions on Multimedia 12(6), 523–535 (2010)

Ji, S., Xu, W., Yang, M., Yu, K.: 3d convolutional neural networks for human action recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 221–231 (2013)

Koelstra, S., Muhl, C., Soleymani, M., Lee, J.-S., Yazdani, A., Ebrahimi, T., Pun, T., Nijholt, A., Patras, I.: Deap: A database for emotion analysis; using physiological signals. IEEE Transactions on Affective Computing 3(1), 18–31 (2012)

Li, T., Chan, A.B., Chun, A.: Automatic musical pattern feature extraction using convolutional neural network. In: Proc. Int. Conf. Data Mining and Applications (2010)

Malandrakis, N., Potamianos, A., Evangelopoulos, G., Zlatintsi, A.: A supervised approach to movie emotion tracking. In: 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2376–2379. IEEE (2011)

Plutchik, R.: The nature of emotions human emotions have deep evolutionary roots, a fact that explain their complexity and provide tools for clinical practice. American Scientist 89(4), 344–350 (2001)

Schmidt, E.M., Scott, J., Kim, Y.E.: Feature learning in dynamic environments: Modeling the acoustic structure of musical emotion. In: International Society for Music Information Retrieval, pp. 325–330 (2012)

Soleymani, M., Chanel, G., Kierkels, J., Pun, T.: Affective characterization of movie scenes based on multimedia content analysis and user’s physiological emotional responses. In: Tenth IEEE International Symposium on Multimedia, ISM 2008, pp. 228–235. IEEE (2008)

Srivastava, R., Yan, S., Sim, T., Roy, S.: Recognizing emotions of characters in movies. In: 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 993–996. IEEE (2012)

Valdez, P., Mehrabian, A.: Effects of color on emotions. Journal of Experimental Psychology: General 123(4), 394 (1994)

Wimmer, M., Schuller, B., Arsic, D., Rigoll, G., Radig, B.: Low-level fusion of audio and video feature for multi-modal emotion recognition. In: 3rd International Conference on Computer Vision Theory and Applications, VISAPP, vol. 2, pp. 145–151 (2008)

Wu, T.-F., Lin, C.-J., Weng, R.C.: Probability estimates for multi-class classification by pairwise coupling. The Journal of Machine Learning Research 5, 975–1005 (2004)

Xu, M., Jin, J.S., Luo, S., Duan, L.: Hierarchical movie affective content analysis based on arousal and valence features. In: Proceedings of the 16th ACM International Conference on Multimedia, pp. 677–680. ACM (2008)

Xu, M., Wang, J., He, X., Jin, J.S., Luo, S., Lu, H.: A three-level framework for affective content analysis and its case studies. Multimedia Tools and Applications (2012)

Yazdani, A., Kappeler, K., Ebrahimi, T.: Affective content analysis of music video clips. In: Proceedings of the 1st International ACM Workshop on Music Information Retrieval with User-Centered and Multimodal Strategies, pp. 7–12. ACM (2011)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Acar, E., Hopfgartner, F., Albayrak, S. (2014). Understanding Affective Content of Music Videos through Learned Representations. In: Gurrin, C., Hopfgartner, F., Hurst, W., Johansen, H., Lee, H., O’Connor, N. (eds) MultiMedia Modeling. MMM 2014. Lecture Notes in Computer Science, vol 8325. Springer, Cham. https://doi.org/10.1007/978-3-319-04114-8_26

Download citation

DOI: https://doi.org/10.1007/978-3-319-04114-8_26

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-04113-1

Online ISBN: 978-3-319-04114-8

eBook Packages: Computer ScienceComputer Science (R0)