Abstract

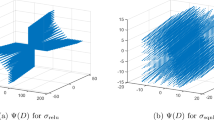

We attack the classical neural network training problem by successive piecewise linearization, applying three different methods for the global optimization of the local piecewise linear models. The methods are compared to each other and steepest descent as well as stochastic gradient on the regression problem for the Griewank function.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Arora, S., Cohen, N., Golowich, N., Hu, W.: A convergence analysis of gradient descent for deep linear neural networks. CoRR, abs/1810.02281 (2018)

Bagirov, A., Karmitsa, N., Mäkelä, M.: Introduction to Nonsmooth Optimization: Theory, Practice and Software. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-08114-4

Bölcskei, H., Grohs, P., Kutyniok, G., Petersen, P.: Optimal approximation with sparsely connected deep neural networks. ArXiv:abs/1705.01714 (2019)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60, 223–311 (2018)

Fourer, R., Kernighan, B.W.: AMPL: a modeling language for mathematical programming (2003)

Glorot, X., Bordes, A., Bengio, Y.: Deep sparse rectifier neural networks. J. Mach. Learn. Res. 15, 315–323 (2011)

Griewank, A.: On stable piecewise linearization and generalized algorithmic differentiation. Optim. Methods Softw. 28(6), 1139–1178 (2013)

Griewank, A., Walther, A.: First and second order optimality conditions for piecewise smooth objective functions. Optim. Methods Softw. 31(5), 904–930 (2016)

Griewank, A.: Generalized descent of global optimization. J. Optim. Theory Appl. 34, 11–39 (1981)

Griewank, A., Walther, A.: Finite convergence of an active signature method to local minima of piecewise linear functions. Optim. Methods Softw. 34, 1035–1055 (2019)

Gupte, A., Ahmed, S., Cheon, M., Dey, S.: Solving mixed integer bilinear problems using MILP formulations. SIAM J. Optim. 23(2), 721–744 (2013)

Wright, S.J.: Coordinate descent algorithms. Math. Program. 151, 3–34 (2015)

Kakade, S.M., Lee, J.D.: Provably correct automatic sub-differentiation for qualified programs. ArXiv:abs/1809.08530 (2018)

Kärkkäinen, T., Heikkola, E.: Robust formulations for training multilayer perceptrons. Neural Comput. 16, 837–862 (2004)

Scholtes, S.: Introduction to Piecewise Differentiable Equations. Springer, New York (2012). https://doi.org/10.1007/978-1-4614-4340-7

Yarotsky, D.: Error bounds for approximations with deep ReLU networks. Neural Netw. Off. J. Int. Neural Netw. Soc. 94, 103–114 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Griewank, A., Rojas, Á. (2019). Treating Artificial Neural Net Training as a Nonsmooth Global Optimization Problem. In: Nicosia, G., Pardalos, P., Umeton, R., Giuffrida, G., Sciacca, V. (eds) Machine Learning, Optimization, and Data Science. LOD 2019. Lecture Notes in Computer Science(), vol 11943. Springer, Cham. https://doi.org/10.1007/978-3-030-37599-7_64

Download citation

DOI: https://doi.org/10.1007/978-3-030-37599-7_64

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-37598-0

Online ISBN: 978-3-030-37599-7

eBook Packages: Computer ScienceComputer Science (R0)