Abstract

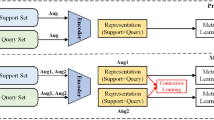

Previous works on meta-learning either relied on elaborately hand-designed network structures or adopted specialized learning rules to a particular domain. We propose a universal framework to optimize the meta-learning process automatically by adopting neural architecture search technique (NAS). NAS automatically generates and evaluates meta-learner’s architecture for few-shot learning problems, while the meta-learner uses meta-learning algorithm to optimize its parameters based on the distribution of learning tasks. Parameter sharing and experience replay are adopted to accelerate the architectures searching process, so it takes only 1-2 GPU days to find good architectures. Extensive experiments on Mini-ImageNet and Omniglot show that our algorithm excels in few-shot learning tasks. The best architecture found on Mini-ImageNet achieves competitive results when transferred to Omniglot, which shows the high transferability of architectures among different computer vision problems.

X. Zheng and P. Wang—These authors contributed equally to this work

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Andrychowicz, M., et al.: Learning to learn by gradient descent by gradient descent. CoRR abs/1606.04474 (2016). http://arxiv.org/abs/1606.04474

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. arXiv preprint arXiv:1703.03400 (2017)

Graves, A., Wayne, G., Danihelka, I.: Neural turing machines. CoRR abs/1410.5401 (2014). http://arxiv.org/abs/1410.5401

Hazan, E., Klivans, A., Yuan, Y.: Hyperparameter optimization: a spectral approach. arXiv preprint arXiv:1706.00764 (2017)

Lake, B.M., Salakhutdinov, R., Tenenbaum, J.B.: Human-level concept learning through probabilistic program induction. Science 350(6266), 1332–1338 (2015)

Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: International Conference on Learning Representations (ICLR) (2017)

Li, L., Jamieson, K., DeSalvo, G., Rostamizadeh, A., Talwalkar, A.: Hyperband: a novel bandit-based approach to hyperparameter optimization. arXiv preprint arXiv:1603.06560 (2016)

Long, L., Wang, W., Wen, J., Zhang, M., Lin, Q., Ooi, B.C.: Object-level representation learning for few-shot image classification. CoRR abs/1805.10777 (2018). http://arxiv.org/abs/1805.10777

Loshchilov, I., Hutter, F.: CMA-ES for hyperparameter optimization of deep neural networks. arXiv preprint arXiv:1604.07269 (2016)

Mishra, N., Rohaninejad, M., Chen, X., Abbeel, P.: Meta-learning with temporal convolutions. CoRR abs/1707.03141 (2017). http://arxiv.org/abs/1707.03141

Munkhdalai, T., Yu, H.: Meta networks. CoRR abs/1703.00837 (2017). http://arxiv.org/abs/1703.00837

Nichol, A., Schulman, J.: Reptile: a scalable metalearning algorithm. arXiv preprint arXiv:1803.02999 (2018)

Pham, H., Guan, M.Y., Zoph, B., Le, Q.V., Dean, J.: Efficient neural architecture search via parameter sharing. arXiv preprint arXiv:1802.03268 (2018)

Real, E., Aggarwal, A., Huang, Y., Le, Q.V.: Regularized evolution for image classifier architecture search. arXiv preprint arXiv:1802.01548 (2018)

Real, E., et al.: Large-scale evolution of image classifiers. arXiv preprint arXiv:1703.01041 (2017)

Santoro, A., Bartunov, S., Botvinick, M.: One-shot learning with memory-augmented neural networks. CoRR (2016). http://arxiv.org/abs/1605.06065

Schaul, T., Quan, J., Antonoglou, I., Silver, D.: Prioritized experience replay. CoRR abs/1511.05952 (2015). http://arxiv.org/abs/1511.05952

Shin, R., Packer, C., Song, D.: Differentiable neural network architecture search (2018)

Snoek, J., Larochelle, H., Adams, R.P.: Practical bayesian optimization of machine learning algorithms. In: Advances in Neural Information Processing Systems, pp. 2951–2959 (2012)

Sung, F., Yang, Y., Zhang, L., Xiang, T., Torr, P.H., Hospedales, T.M.: Learning to compare: Relation network for few-shot learning. arXiv preprint arXiv:1711.06025 (2017)

Sutton, R.S.: Policy gradient methods for reinforcement learning with function approximation. In: Advances in Neural Information Processing Systems, vol. 12, pp. 1057–1063 (1999)

Vinyals, O., Blundell, C., Lillicrap, T.P.: Matching networks for one shot learning. CoRR abs/1606.04080 (2016). http://arxiv.org/abs/1606.04080

Zoph, B., Le, Q.V.: Neural architecture search with reinforcement learning. arXiv preprint arXiv:1611.01578 (2016)

Zoph, B., Vasudevan, V., Shlens, J., Le, Q.V.: Learning transferable architectures for scalable image recognition. arXiv preprint arXiv:1707.07012 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Zheng, X., Wang, P., Wang, Q., Shi, Z., Xu, F. (2019). Efficient Automatic Meta Optimization Search for Few-Shot Learning. In: Lin, Z., et al. Pattern Recognition and Computer Vision. PRCV 2019. Lecture Notes in Computer Science(), vol 11859. Springer, Cham. https://doi.org/10.1007/978-3-030-31726-3_19

Download citation

DOI: https://doi.org/10.1007/978-3-030-31726-3_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-31725-6

Online ISBN: 978-3-030-31726-3

eBook Packages: Computer ScienceComputer Science (R0)