Plan for and set up content caching

Content caching is primarily affected by two main factors: connectivity and hardware configurations.

Plan your content cache for best performance

You get the best performance from your content cache by connecting it to your network using Gigabit Ethernet. The content cache can serve hundreds of clients concurrently, which can saturate a Gigabit Ethernet port. Therefore, in most small to medium scale deployments, the performance bottleneck is usually the bandwidth of your local network.

To determine whether your Mac is the performance bottleneck when a large number of clients are accessing the content cache simultaneously, check the processor usage for the AssetCache process in Activity Monitor (open Activity Monitor, choose View > All Processes, then click CPU). If the processor usage is constantly at or near the maximum, you may want to add additional content caches to distribute the requests across multiple computers.

Also, if your Mac is in an environment where clients download large amounts of a wide variety of content, be sure to set the cache size limit high enough. This prevents the content cache from deleting cached data frequently, which may necessitate downloading the same content multiple times, thereby using more internet bandwidth.

Set up your content cache

The following are best practices for content caching:

Allow all Apple push notifications.

Don’t use manual proxy settings.

Don’t use a proxy to accept client requests and pass them to content caches.

Bypass proxy authentication for content caches.

Specify a TCP port for caching.

Manage intersite caching traffic.

Block rogue cache registration by enforcing the MDM restriction “Prevent content caching” on all Mac computers.

Use multiple content caches

You can use multiple content caches for your network. Content caches on the same network are called peers, and they share content with each other. If you have more than one, you can specify peer and parent relationships for the content caches. Content caching uses these relationships to determine which content cache is queried to fulfill a content request.

You can also arrange your content caches in a hierarchy. The content caches at the top of the hierarchy are called parents, and they provide content to their children.

Sample network configurations for content caches

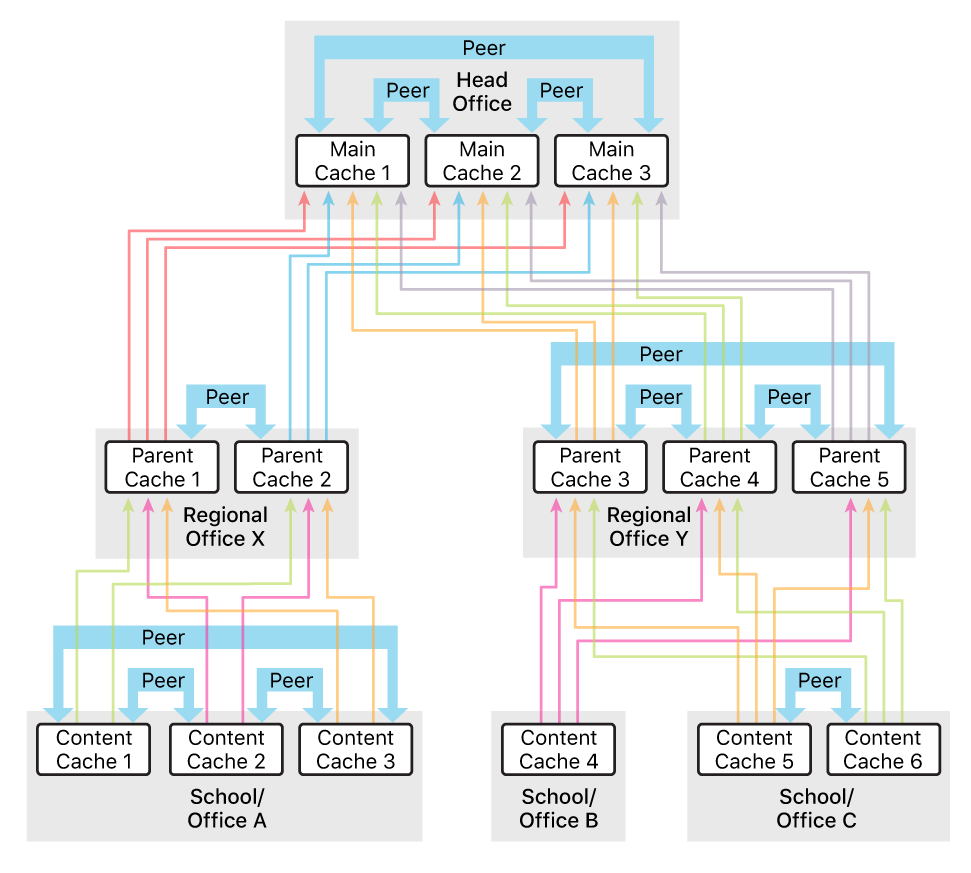

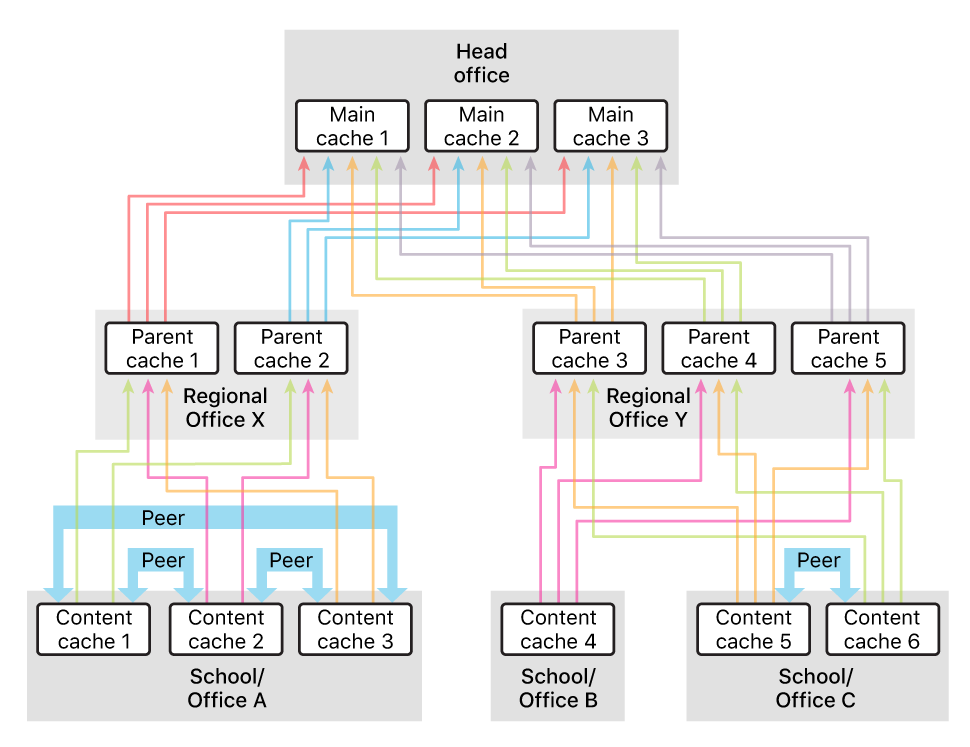

In the examples below, the network is organized into a three-level hierarchy that has multiple tiers of parent content caches; the content differs in how the peer content caches are defined. On the left side, peers are defined at each level of the hierarchy. On the right side, peers are defined only at the lowest level of the hierarchy.

Here’s an example configuration using more peers than parents:

Here’s an example configuration using more parents than peers:

You might choose a configuration matching the first example to maximize sharing among caches. If one of the content caches in a location is unavailable, another might already have the same content cached. Content caches 1–6 and parent caches 1–5 can use the parent selection policies first-available, random, round-robin, or sticky-available.

You might choose a configuration matching the second example to maximize the total size of the cache. Parent caches 1–5 don’t share content with each other, and neither do main caches 1–3. Content caches 1–6 and parent caches 1–5 can use the parent selection policy url-path-hash.