Abstract

This paper presents a novel evaluationary approach to extract color-texture features for image retrieval application namely Color Directional Local Quinary Pattern (CDLQP). The proposed descriptor extracts the individual R, G and B channel wise directional edge information between reference pixel and its surrounding neighborhoods by computing its grey-level difference based on quinary value (−2, −1, 0, 1, 2) instead of binary and ternary value in 0°, 45°, 90°, and 135° directions of an image which are not present in literature (LBP, LTP, CS-LBP, LTrPs, DExPs, etc.). To evaluate the retrieval performance of the proposed descriptor, two experiments have been conducted on Core-5000 and MIT-Color databases respectively. The retrieval performances of the proposed descriptor show a significant improvement as compared with standard local binary pattern LBP, center-symmetric local binary pattern (CS-LBP), Directional binary pattern (DBC) and other existing transform domain techniques in IR system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

With the radical expansion of the digitization in the living world, it has become imperative to find a method to browse and search images efficiently from immense database. In general, three types of approaches for image retrieval are, text-based, content-based and semantic based. In recent times, web-based search engines such as, Google, Yahoo, etc., are being used extensively to search for images based on text keyword searching. Here, any image needs to be indexed properly before retrieving by text-based approach. Such an approach is highly tiresome and also unrealistic to handle by human annotation. Hence, more efficient search mechanism called “content based image retrieval” (CBIR) is required. Image retrieval has become a thrust area in the field of medicine, amusement and science etc.. The search in content based approach is made by analyzing the actual content of the image rather using metadata such as, keywords, tags or descriptions associated with an image. Hence, system can filter images based on their content would provide better indexing and return more accurate results. The effectiveness of a CBIR approach is greatly depends on feature extraction, which is its prominent step. The CBIR employs visual content of an image such as color, texture, shape and faces etc., to index the image database. Hence these features can be further classified as general (texture, color and shape) and domain specific (fingerprints, human faces) features. In this paper, we mainly focused on low-level features; the feature extraction method used in this paper is an effective way of integrating low-level features into whole. Widespread literature survey on CBIR is accessible in [1–4].

The concept of color is one of the significant feature in the field of content-based image retrieval (CBIR), if it is maintained semantically intact and perceptually oriented way. In addition, color structure in visual scenery changes in size, resolution and orientation. Color histogram [5] based image retrieval is simple to implement and has been well used and studied in CBIR system. However, the retrieval performance of these descriptors is generally limited due to inadequacy in discrimination power mainly on immense data. Therefore, several color descriptors have been proposed to exploit special information, including compact color central moments and color coherence vector etc. reported in the literature [6, 7].

Texture is one of the most important characteristic of an image. Texture analysis has been extensively used in CBIR systems due to its potential value. Texture analysis and retrieval has gained wide attention in the field of medical, industrial, document analysis and many more. Various algorithms have been proposed for texture analysis, such as, automated binary texture feature [8], Wavelet and Gabor Wavelet Correlogram [9, 10], Rotated Wavelet and Rotated Complex Wavelet filters [11–13], Multiscale Ridgelet Transform [14] etc.. In practice texture features can be combined with color features to improve the retrieval accuracy. One of the most commonly used method is to combining texture features with color features; these include wavelets and color vocabulary trees [15] and Retrieval of translated, rotated and scaled color textures [16] etc..

In addition to the texture features, the local image features extraction attracting increasing attention in recent years. A visual content descriptor can either be local or global. A local descriptor uses the visual features of regions or objects to describe the image, where as the global descriptor uses the visual features of the whole image. Several local descriptors have been described in the literature [17–29], where the local binary pattern (LBP) [17] is the most popular local feature descriptor.

The main contributions of the proposed descriptor are given as follows. (a) A new color-texture descriptor is proposed, it extracts texture (DLQP) features from an individual R, G and B color channels. (b) To reduce the feature vector length of the proposed descriptor, the color-texture features were extracted from horizontal and vertical directions only.

The organization of this paper is as follows, In Section “Introduction”, introduction is presented. The local patterns with proposed descriptor are presented in Section “Local patterns with proposed Descriptor”. Section “Experimental results and discussions”, presents the retrieval performances of proposed descriptor and other state-of-the art techniques on two bench mark datasets (Corel-5000 and MIT-Color). Based on the above work Section “Conclusions” concludes this paper.

Local patterns with proposed Descriptor

The concept of LBP [17], LTP [21] and DBC [29] has been utilized to extract texture features (DLQP) from individual color channels (R, G and B) to generate a new color-texture feature called CDLQP.

Local binary patterns (LBP)

The concept of LBP was derived from the general definition of texture in a local neighborhood. This method was successful in terms of speed and discriminative performance [17].

In a given 3 × 3 pixel pattern, the LBP value is calculated by comparing its center pixel value with its neighborhoods as shown below:

where N stands for the number of neighbors, R is the radius of the neighborhood, p c denotes the grey value of the centre pixel and p i is the grey value of its neighbors.

The LBP encoding procedure from a given 3 × 3 pattern is illustrated in Figure 1.

Local ternary patterns (LTP)

The local ternary pattern (LTP) operator, introduced by Tan and Triggs [20] extends LBP to 3-valued codes called LTP.

where, τ is user-specified threshold.

After computing local pattern LP (LBP or LTP) for each pixel (i, j), the whole image is represented by building a histogram as follows:

where the size of input image is N1 × N2.

Directional binary code (DBC)

The directional binary code (DBC) was proposed by Baochang et al. [30]. DBC encodes the directional edge information as follows.

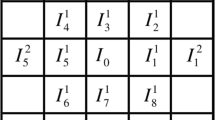

In a given an image I, the first-order derivative is calculated, I'(g i ) along 0°, 45°, 90° and 135° directions. The detailed calculation of DBC in red color channel along 0° direction is shown in Figure 1.

The directional edges are obtained by,

The DBC is defined (α = 0°, 45°, 90° and 135°) as follows:

Proposed descriptor

Color Directional Local Quinary Pattern (CDLQP)

In this section, the procedure to generate a new color-texture feature (CDLQP) descriptor is explained. Let I i be the ith plain (color space) of the image (e.g., Red color component from the “RGB” color space), where i = 1,2,3. The DLQP feature is computed independently from each (R, G and B) color channels.

For a given image I, the first-order derivatives of 0°, 45°, 90° and 135° directions are calculated using Eq. (6).

The directional edges were obtained by Eq. (9). The local quinary values were obtained by Eq. (10).

where, τ2, τ1 are the upper and lower threshold parameter respectively.

The Nth-order CDLQP is defined (α= 0°, 45°, 90° and 135°) as follows:

DLQP is a quinary (−2, −1, 0, 1, 2) pattern, which is further converted into four binary patterns such as, two upper patterns (UP) and two lower patterns (LP). The detailed representation of these four patterns is shown in Figure 2. Finally, the whole image is represented by building a histogram supported by Eq. (12).

where

The size of the input image is N1 × N2.

In this brief, to reduce the feature vector length color-texture features were extracted from horizontal and vertical directions only.

The details of the proposed color-texture descriptor is given as follows. The steps for extracting 0° degree information is shown in Figure 1. Figure 2 and Eq. (10) explain the procedure to calculate the quinary pattern. The generated quinary pattern is further coded into two upper (A & B) and two lower (C& D) binary patterns which are shown in Figure 2. The two upper (A & B) patterns were obtained by retaining 2 by 1 and replacing 0 for −2, −1, 1 and 0 for A pattern. Likewise, pattern B was obtained by retaining 1 by 1 and replacing 0 for other values. A similar procedure was followed for other two lower patterns.

From the Figure 2, “-11, 3, −14, 8, 5, 5, −2, −4, −1” texture information are obtained when first-order derivative applied in 0° direction. Further, the derivatives are coded in to quinary pattern “-2, 1, −2, 2, 2, 2, −1, −2, −1” using upper and lower thresholds (τ1 = 2 & τ2 = 1). Finally, the quinary pattern was converted into four binary patterns (two UP and two LP). The entire operation was applied on individual color channels to generate color-texture features.

Proposed system framework for image retrieval

Figure 3 illustrates the proposed image retrieval system frame work and algorithm for the same is given below.

Algorithm: The proposed algorithm involves following steps

Input: Image; Output: Retrieval Result

-

1.

Separate RGB color components from an image.

-

2.

Calculate the directional edge information on each color space.

-

3.

Compute the local quinary value for each pixel.

-

4.

Construct the CDLQP histogram for each pattern.

-

5.

Construct the feature vector.

-

6.

Compare the query image with images in the database using Eq. (16).

-

7.

Retrieve the images based on the best matches.

Query matching

The retrieval performance of any descriptor not only depends on feature extraction approach, but also on good similarity metrics. In this paper four types of similarity distance measures are used as discussed below:

where Q is query image, Lg is feature vector length, T is image in database; fI,i is ith feature of image I in the database, fQ,i is ith feature of query image Q.

Advantages of proposed methods

-

1.

A new color-texture descriptor is proposed, it extracts texture (DLQP) features from an individual R, G and B color channels.

-

2.

To reduce the feature vector length of the proposed descriptor, the color-texture features were extracted from horizontal and vertical directions only.

-

3.

To verify the retrieval performances of CDLQP, two extensive experiments have been conducted on Corel-5000 and MIT-Color databases respectively.

-

4.

The retrieval performances show a significant improvement nearly 10.78% in terms of ARP on Corel-5000 database and 9.12% improvement on MIT-Color database in terms of ARR as compared with LBP.

Experimental results and discussions

In image retrieval, various datasets are used for several purposes; these includes Corel dataset, MIT dataset and Brodtz texture dataset etc.. The Corel dataset is the most popular and commonly used dataset to test the retrieval performance, MIT-Color dataset used for texture and color feature analysis and Brodtz dataset used for texture analysis. In this paper, to verify the retrieval performances of the proposed descriptor Corel-5000 and MIT-Color datasets are used respectively.

In these experiments, each image in the database is used as the query image. The retrieval performance of the proposed method is measured in terms of recall, precision, average retrieval rate (ARR) and average retrieval precision (ARP) as given in Eq. (17) - Eq. (21) [26]

The recall is defined for a query image I q is given in Eq. (17).

where, NG is the number of relevant images in the database, ‘n’ is the number of top matches considered, f3(x) is the category of ' x ', Rank(I i , I q ) returns the rank of image I i (for the query image I q ) among all images in the database (|DB|).

Similarly, the precision is defined, as follows:

The average retrieval rate (ARR) and average retrieval precision (ARP) are defined in Eq. (20) and Eq. (21) respectively.

where, |DB| is the total number of images in the database.

Where N1 is the number of relevant images (Number of images in a group), N C is a number of groups and N 2 is Total number of images to retrieve. The results obtained are discussed in the following subsections.

Experiment on Corel-5000 database

To verify the performances of the proposed descriptor the Corel 5000 database [30] is used. It comprises 5000 images of 50 different categories; each category has 100 images, either in size 187 × 126 or 126 × 187. The Corel database is a collection of various contents ranging from natural images, animals to outdoor sports. In this experiment the retrieval performances of the proposed descriptor is calculated in terms of precision, average retrieval precision (ARP), recall, average retrieval rate (ARR). Table 1 illustrates the retrieval performances of the proposed descriptor on Corel-5000 database in terms of ARP and ARR. Figure 4(a) and (b) shows category wise retrieval performance of the proposed method with other existing methods. Figure 4(c) and (d) shows entire database retrieval performance on Corel-5000 dataset. Table 2 illustrates retrieval performance of the proposed descriptor with different distance measures. From Figure 4, it is observed that the proposed method shows less retrieval performance on categories 1, 25, 26, 27 and 50 as compared to the other existing methods. The reason behind this is, the categories 1, 25, 26, 27 and 50 contain the distinct color information within the categories. However, the overall (average) performance of the proposed method shows a significant improvement as compared to the existing methods in terms of precision, recall, average and average retrieval rate on Corel-5 K database. From Table 1 and Figure 4 it is evident that the proposed method outperforms than other existing methods on Corel-5000 database. From the Table 2 and Figure 5 it is clear that d1 distance measure show better retrieval rate than other existence distance measures. From this experiment it is observed that the proposed descriptor shows 10.78% improvement as compared to LBP. The query result of the proposed method on Corel-5000 database is shown in Figure 6 (top left image is the query image). Finally, from above discussion and observations it is clear that the proposed descriptor show a significant improvement as compared to other existing methods in terms of their evaluation measures on Corel-5000 database.

Experiment on MIT-Color database

In this experiment, we first demonstrate about MIT-Color dataset [31]. Further, we describe the retrieval performances of the proposed descriptor. MIT-Color dataset consists of 40 different textures with size 512 × 512. Further, these textures are divided into sixteen 128 × 128 non-overlapping sub images, thus creating a database of 640 (40 × 16) images. The sample images of this database are shown in Figure 7. The retrieval performance of the proposed descriptor and other state-of-the-art techniques are shown in Figure 8. From the Figure 8 it is clear that the proposed descriptor has shown significant improvement around 9.12% as compared to LBP in terms of ARR. From Figure 9 it is clear that d1 distance measure show better retrieval rate than other existence distance measures. From these observations it is concluded that the proposed descriptor yields better retrieval rate than other state-of-art techniques. Further, the query result of the proposed descriptor on the MIT-Color database is shown in Figure 10 (top left image is the query image).

Conclusions

A novel evaluationary color-texture descriptor namely Color Directional Local Quinary Pattern (CDLQP) is proposed for image retrieval application. CDLQP extracts the texture features from individual R, G and B color channels using directional edge information in a neighborhood with gray-level differences between the pixels by a quinary value instead of a binary and ternary one. The extensive and comparative experiment has been conducted to evaluate our color-texture features for IR on two public natural databases namely, Corel-5000 and MIT-Color dataset. Experimental results of the proposed descriptor CDLQP show a significant improvement as compared to other state-of-the art techniques in IR system.

Authors’ informations

Santosh Kumar Vipparthi was born in 1985 in India. He received the B.E and M.Tech degrees in Electrical, Systems Engineering from Andhra University, IIT-BHU, India in 2007 and 2010 respectively. Currently he is pursuing the Ph.D. degree in the Department of Electrical Engineering at Indian Institute of Technology BHU, Varanasi, India. His major interests are image retrieval and object tracking.

Shyam Krishna Nagar was born in 1955 in India. He received the Ph.D degree in Electrical engineering from Indian Institute of Technology Roorkee, Roorkee, India, in 1991. He is currently working as Professor in Department of Electrical Engineering, Indian Institute of Technology BHU, Varanasi, Uttar Pradesh, India. His fields of interest are includes digital image processing, digital control, model order reduction and discrete event systems.

References

Rui Y, Huang TS: Image retrieval: Current techniques, promising directions and open issues. J. Vis. Commun. Image Represent 1999, 10: 39–62. 10.1006/jvci.1999.0413

Smeulders AWM, Worring M, Santini S: A. Gupta, and R. Jain, Content-based image retrieval at the end of the early years. IEEE Trans Pattern Anal Mach Intell 2000, 22(12):1349–1380. 10.1109/34.895972

Kokare M, Chatterji BN, Biswas PK: A survey on current content based image retrieval methods. IETE J. Res. 2002, 48(3):261–271.

Ying L, Dengsheng Z, Guojun L, Wei-Ying M: A survey of content-based image retrieval with high-level semantics. Elsevier J. Pattern Recognition 2007, 40: 262–282. 10.1016/j.patcog.2006.04.045

Swain MJ, Ballar DH: Indexing via color histograms. Proc. 3rd Int. Conf. Computer Vision, Rochester Univ., NY 1991, 11–32.

Pass G, Zabih R, Miller J: Comparing images using color coherence vectors. In Proc. 4th. ACM Multimedia Conf, Boston, Massachusetts, US; 1997:65–73.

Stricker M, Oreng M: Similarity of color images. In Proc. Storage and Retrieval for Image and Video Databases, SPIE; 1995:381–392.

Smith JR, Chang SF: Automated binary texture feature sets for image retrieval. In Proc. IEEE Int. Conf. Acoustics, Speech and Signal Processing, Columbia Univ., New York; 1996:2239–2242.

Moghaddam HA, Khajoie TT, Rouhi AH, Saadatmand MT: Wavelet Correlogram: A new approach for image indexing and retrieval. Elsevier J. Pattern Recognition 2005, 38: 2506–2518. 10.1016/j.patcog.2005.05.010

Moghaddam HA, Saadatmand MT: Gabor wavelet Correlogram Algorithm for Image Indexing and Retrieval. 18th Int. Conf. Pattern Recognition, K.N. Toosi Univ. of Technol., Tehran, Iran 2006, 925–928.

Subrahmanyam M, Maheshwari RP, Balasubramanian R: A Correlogram Algorithm for Image Indexing and Retrieval Using Wavelet and Rotated Wavelet Filters. International Journal of Signal and Imaging Systems Engineering 2011, 4(1):27–34. 10.1504/IJSISE.2011.039182

Kokare M, Biswas PK, Chatterji BN: Texture image retrieval using rotated Wavelet Filters. Elsevier J. Pattern recognition letters 2007, 28: 1240–1249. 10.1016/j.patrec.2007.02.006

Kokare M, Biswas PK, Chatterji BN: Texture Image Retrieval Using New Rotated Complex Wavelet Filters. IEEE Trans. Systems, Man, and Cybernetics 2005, 33(6):1168–1178. 10.1109/TSMCB.2005.850176

Gonde AB, Maheshwari RP, Balasubramanian R: Multiscale Ridgelet Transform for Content Based Image Retrieval. IEEE Int. Advance Computing Conf, Patial, India; 2010.

Subrahmanyam M, Maheshwari RP, Balasubramanian R: Expert system design using wavelet and color vocabulary trees for image retrieval. International Journal of Expert systems with applications. 2012, 39: 5104–5114. 10.1016/j.eswa.2011.11.029

Cheng-Hao Y, Shu-Yuan C: Retrieval of translated, rotated and scaled color textures. Pattern Recognition 2003, 36: 913–929. 10.1016/S0031-3203(02)00124-3

Ojala T, Pietikainen M, Harwood D: A comparative study of texture measures with classification based on feature distributions. Pattern Recognition 1996, 29(1):51–59. 10.1016/0031-3203(95)00067-4

Ojala T, Pietikainen M, Maenpaa T: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence 2002, 24(7):971–987. 10.1109/TPAMI.2002.1017623

Guo Z, Zhang L, Zhang D: Rotation invariant texture classification using LBP variance with global matching. Pattern Recognition 2010, 43(3):706–719. 10.1016/j.patcog.2009.08.017

Zhenhua G, Zhang L, Zhang D: A completed modeling of local binary pattern operator for texture classification. IEEE Transactions on Image Processing 2010, 19(6):1657–1663. 10.1109/TIP.2010.2044957

Tan X, Triggs B: Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Tans. Image Proc. 2010, 19(6):1635–1650. 10.1109/TIP.2010.2042645

Subrahmanyam M, Maheshwari RP, Balasubramanian R: Directional local extrema patterns: a new descriptor for content based image retrieval. Int. J. Multimedia Information Retrieval 2012, 1(3):191–203. 10.1007/s13735-012-0008-2

Subrahmanyam M, Maheshwari RP, Balasubramanian R: Local Tetra Patterns: A New Feature Descriptor for Content Based Image Retrieval. IEEE Trans. Image Process 2012, 21(5):2874–2886. 10.1109/TIP.2012.2188809

Subrahmanyam M, Maheshwari RP, Balasubramanian R: Local Maximum Edge Binary Patterns: A New Descriptor for Image Retrieval and Object Tracking. Signal Processing 2012, 92: 1467–1479. 10.1016/j.sigpro.2011.12.005

Subrahmanyam M, Maheshwari RP, Balasubramanian R: Directional Binary Wavelet Patterns for Biomedical Image Indexing and Retrieval. Journal of Medical Systems 2012, 36(5):2865–2879. 10.1007/s10916-011-9764-4

Vipparthi S, Nagar SK: Directional Local Ternary Patterns for Multimedia Image Indexing and Retrieval. Int. J. Signal and Imaging Systems Engineering 2013, Article in: press.

Takala V, Ahonen T, Pietikainen M: Block-Based Methods for Image Retrieval Using Local Binary Patterns. LNCS 2005, 3450: 882–891.

Marko H, Pietikainen M, Cordelia S: Description of interest regions with local binary patterns. Pattern Recognition 2009, 42: 425–436. 10.1016/j.patcog.2008.08.014

Baochang Z, Zhang L, Zhang D, Linlin S: Directional binary code with application to PolyU near-infrared face database. Pattern Recognition Letters 2010, 31: 2337–2344. 10.1016/j.patrec.2010.07.006

Available:. [Online].

Available:. [Online].

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

All authors declare that they have no competing interests.

Authors’ contributions

All authors VSK and NSK work together in conception, implementation, write and apply the proposed methods in this paper then they read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Vipparthi, S.K., Nagar, S.K. Color Directional Local Quinary Patterns for Content Based Indexing and Retrieval. Hum. Cent. Comput. Inf. Sci. 4, 6 (2014). https://doi.org/10.1186/s13673-014-0006-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13673-014-0006-x