1. Introduction

1.1. Monitoring Urban Areas with Remote Sensing: A Copernicus Land Mission

Understanding the urban growth phenomenon is among the major issues that public services have to deal with. Today, more than half of the world population lives in urban areas, and it is estimated that this will reach up to two-thirds by 2025 [

1]. In the context of globalization and climate change, not only the urbanization process is fast, but the consequences due to the increase of imperviousness poses serious challenges related to the indication of risks (floods, heat wave events, pollution, etc.).

In Europe, the European Commission conducts projects to monitor urban areas in order to serve regional funding and policy making. Among those, the High Resolution Layer Imperviousness Degree (HRL IMD), as well as the Urban Atlas, which are part of the Copernicus Land missions, are based on remote sensing data. One of the objectives of the Copernicus program developed by the European Commission is to provide information products for monitoring the growth of urban areas in order to assess whether policies to reduce urban sprawl are effective.

Among those, the HRL IMD, as well as the Urban Atlas (

http://land.copernicus.eu/) are key products based on remote sensing data. The strength of these Copernicus Land products relies on the use of high resolution (spatial/temporal) remote sensing data, which provide homogeneity, repetitiveness and objectivity over the whole of Europe, whereas compiling available local and/or national datasets throughout Europe in order to compare cities would be difficult to achieve since the data would originate from different materials and methods.

The HRL IMD consists of a m grid covering all of Europe, including Turkey, and aims at representing the degree of imperviousness within each grid cell from 0% to 100%. This layer had already been produced in 2006, 2009 and 2012 and is key for monitoring urban sprawl. The 2015 update will soon be produced and will coincide with an unprecedented coverage of remote sensing data that can be used as input for its production. Previous updates were primarily based on a semi-automated approach (mainly due to the limited availability of input data), and the increased availability of remotely-sensed data can increase the level of automation to produce and update such a large dataset. In addition, because of cloud coverage in a large part of Europe, this amount of images can ensure cloud-free and uniform results at a spatial resolution less than or equal to 20 m to fit the HRL IMD specification. Large datasets are also critical to characterize vegetation phenology false positives to reduce potential confusion between induced agricultural bare soil and artificial surfaces. All of these contributions expected with the use of many data require the design of innovative and efficient methods to process such an amount of data in order to maintain Copernicus Land products’ data production costs down.

The substantial increase in remotely-sensed data availability is primarily due to the launch of Sentinel-2A in 2015, which was designed specifically to meet the needs of the Copernicus program. The full Sentinel-2 constellation (including Sentinel-2A and -2B) will have a revisit cycle of less than five days globally (whereas Landsat-8 is around 16 days) and will provide 13 bands from visible to Short Wave Infrared (SWIR) associated with three spatial resolutions from 10 to 60 m. The multi-temporal resolution ensures a better monitoring of land use and cover with better opportunities to obtain cloudless mosaics; the wide spectral resolution facilitates the thematic identification of land cover [

2], while the high spatial resolution allows for the identification of small objects, such as individual houses or landscapes structures [

3].

However, because the launch of Sentinel 2A is very recent, few studies exist on how it could be used for monitoring urban areas [

4]. Therefore, in this paper, a method based on exploiting Sentinel-2’s unique properties is proposed to update the Copernicus High Resolution Imperviousness Layer. However, the large amount of data generated raises methodological questions to deal with reliable, uncertain and contradictory information. These issues are discussed below.

1.2. Updating Copernicus Products: Change Detection and Data Fusion

Two main approaches are possible to detect structural changes in remote sensing data: directly detect changes from a pair of raw data or performing single classifications that are compared to highlight altered regions. The first approach is often preferred since a single process has the advantage of preventing errors from being accumulated through the two classification steps. However, in the context of the “online” updating of urban areas where classified regions in the first image are already available, the comparison with a new classification issued from the new image appears more rational. This is the process chosen in this paper. Here, in order to prevent from the accumulation or errors issued from independent classifications, we propose to accurately perform this step using specific data fusion rules. Before describing our approach, we first review briefly the main change detection approaches:

Image to image comparison: A naive way to detect changes is to rely on differences, either computed from the input images or on features derived from the images. Relying on the simple difference of raw data is limited (because of known problems related to differences in terms of acquisition), and a substantial number of techniques has been proposed to provide reliable measurements [

5,

6,

7,

8], for example statistical hypothesis tests [

9] or statistics on the spatial relations between points [

10,

11]. From the feature differences, the detection of changes can also be performed using supervised learning techniques [

12]. The reader can refer to [

13] for more details on associated approaches.

Post-classification comparison: Classification plays a key role in change detection, as it often enables one to refine the results. Strategies range from the classification of the various objects in the images [

14,

15,

16] (in this case, the change is immediate by comparison of the two classified data) to post-classifications or to classification of the change features [

17,

18,

19]. As for the first situation, the quality of the result is strongly related to the segmentation. In [

20], the authors have found that post-classification usually underestimates the areas of land cover change, but where the change was detected, it was overestimated in magnitude. Therefore, classifying change features seems a more appealing choice. In that context, one can mention the work of [

21], where the authors use an unsupervised classification mixed with a visual interpretation of aerial photographs to detect land cover changes. We refer the readers to the complete reviews in [

22,

23,

24,

25] for an overview of the change detection issue.

Though well performing techniques exist, only a few of them specifically take into account the problem of handling accurately the errors issued from independent classifications. In [

26], the authors propose to fuse the classification results in order to minimize the omission or the commission errors. The approach introduced in this paper relies on the same idea. More precisely, we use the Dempster–Shafer fusion theory, since this framework is especially adapted to deal with uncertainty that can be derived according to previous classification performances (this theory is presented in

Section 3.4). In addition, our process deals with cloud coverage, enabling a continuous growth of the area covered by our approach.

Classifications of single remote sensing images generally contain errors due to constraints originating from both the data and the algorithms used. This inaccuracy (or uncertainty associated with classification results) depends on:

the characteristics of the sensor:

the efficiency of the classification. Though in general a simple algorithm such as the maximum likelihood can be expected to be less efficient than a more complex one, such as Support Vector Machine (SVM) or Random Forest (RF), no general rule can be extracted;

the accuracy of the labeled data used to train and test the classifiers.

By taking into account all images issued from existing satellites, a large number of data can be acquired on a given area. However, because of the different nature of associated images, their classification may provide results associated with various levels of quality. Although selecting the best result among all available classifications would seem a rational approach, combining them by taking into account their qualities should make it possible to reach a higher level of accuracy. This is the idea behind the concept of data fusion. A large number of techniques is available to fuse data, and grouping them into families is a difficult task. Nevertheless, one can roughly distinguish the two main groups of techniques based on:

probability theory, which aims at determining the state of a variable given various observations. For these families, we mainly find the Kalman filter and other data assimilation techniques depending on the presence of models for sensors (see, for example, [

27,

28]);

evidence theory, where each decision is represented with a belief function associated with uncertainties (or in some approaches, a paradox). In these families, we find Dempster–Shafer Theory (DST) and its variants [

29] or the Dezert–Smarandache paradoxical approach [

30].

In a remote sensing context, the Dempster–Shafer Theory has mainly been applied to facilitate the decision process for the classification in urban environments [

31,

32], but also in other various contexts (sea breeze front identification, forestry monitoring or more generally, land cover [

33,

34,

35,

36]). Though widely used in remote sensing data, the DST has only rarely been applied in the context of large dataset classification (such as a country or continent) where information availability issues are critical (in terms of repetitiveness, cloud cover, acquisition window, etc). As will be shown later, this idea is explored in this paper where, by fusing individual classifications acquired at different dates, urban classifications are generated combining both an accuracy greater than the individual ones and cloud-free coverage. It is also proposed to combine Sentinel-2 with Landsat-8 images and to evaluate the potential of our approach as a multi-source fusion.

1.3. Highlights of the Paper

In this paper, it is proposed to exploit Sentinel-2 data to design a fully-automatic technique that updates imperviousness over Europe by ensuring:

a more precise quality than existing products;

an associated degree of confidence;

less sensitivity to cloud coverage.

As will be shown in this paper, the gain in terms of quality is due to the use of Dempster’s fusion rule that accurately deals with uncertainties issued from all classifications. It also enables one to give a degree of confidence related to all estimations. As for the last point, because of the cloud coverage all over member states’ territories, the production of cloud-free data requires a huge amount of images to ensure uniform results at a spatial resolution less than or equal to 20 m to fit the specification of Copernicus products.

2. Study Areas and Data

2.1. Study Areas

We choose to validate our approaches on two cities of different sizes with different urban planning policies: Prague (over one million inhabitants, 6900 km

2), the capital of the Czech Republic, and Rennes (over 200,000 inhabitants, 2500 km

2), a mid-sized city in France. The areas are delimited by the contour of the Copernicus Former Urban Areas (FUA) of the Urban Atlas dataset and are visible in

Figure 1. In practice, their internal organization is different: while Rennes is mainly composed of medium-sized buildings, individual houses and industrial/commercial areas, Prague is larger and embeds a large historical center connected with big avenues together with old and high level apartments and some modern skyscrapers in its periphery. Therefore, these characteristics are complementary and illustrate the variety of situations one encounters in practice.

2.2. Data

We took a pair of Sentinel-2 images for each study site, the time shift between the two acquisitions being about a week. This is too short to observe significant vegetation changes, but these two images remain interesting to overcome gap filling issues (mainly because of clouds).

To simulate more complete series of Sentinel-2, we also added some Landsat-8 images, since this sensor is at the moment the most similar in terms of specifications (spectral and spatial resolution). Landsat-8 has 11 bands from coastal blue to middle infra-red and has spatial resolution varying from 15 m to 60 m. Images acquired in spring (or early summer) and autumn were selected. All characteristics of the data are visible in

Table 1. This time period is more suitable to take into account different seasonal agricultural practices and therefore to evaluate the ability of our approach to detect urban changes while being insensible to agricultural ones. These Landsat images are first used as a comparison with the Sentinel-2 dataset. In a second step, they are included in the classification fusion process to assess the potentialities of Sentinel-2 in a multi-sensor approach.

3. Method

The overall approach is composed of six steps:

Data preparation: extracting the cloud mask and texture descriptors of all images

Supervised data learning: sample data from all images and build classification models

Map production: classify each datum from models of the previous stage

Classification fusion: combine all classifications

Change detection: detect changes on temporal data

Accuracy assessment: validate our products

The workflow is presented in

Figure 2, and each step is detailed in the followings sections.

3.1. Data Preparation

The data preparation step consists mainly of two operations: cloud mask detection and computation of the texture descriptor for each image. As they are classified independently, no specific spectral processing, nor radiometric corrections are required on each datum.

3.1.1. Cloud Mask Detection

We perform cloud detection in a semi-automatic way through

k-means classification on Band 1 (coastal blue), Band 8A (near-infrared) and Band 10 (water vapor), which are the most interesting to highlight cloud. Because of the differences in these bands, clouds and shadows fall in distinct clusters that are manually labeled as clouds or shadows. Here, we perform a manual labeling since we have few images, but in an operational context, automatic multi-temporal approaches could be used, such as MTCD (Multi-Temporal Cloud Detection [

37]). Their final shapes are delineated after a morphological opening in order to prevent from border effects.

This method has the advantages of being simple, not requiring atmospheric correction and providing efficient results. An example in

Figure 3 presents the result of the cloud mask delineation over Prague. Even though the cloud opacity is low, we observe in this image that clouds are accurately detected.

Concerning Landsat-8 images, cloud masks were directly downloaded from the USGS servers. These cloud masks rely on the Fmask (Function of mask) method, which is dedicated to Landsat missions and extracts both clouds and shadows [

38].

3.1.2. Computation of the Texture Descriptor

To characterize the content of high or very high resolution remote sensing images, texture descriptors are powerful, especially in urban areas, as proven by numerous studies [

39,

40,

41,

42,

43,

44]. Among the existing techniques, the so-called PANTEXmethod [

44], which relies on the analysis of the Grey Levels’ Coocurrence Matrices (GLCM) and the computation of associated indexes [

45], has the properties of being simple and efficient. To be invariant by rotation and less sensitive to small edges that appear in rural or natural landscapes, PANTEX keeps the minimum value of the energy computed on GLCM in various directions (10 in practice [

44]). This enables one to highlight local contrast variation, which is a particularly crucial criteria in an urban area. PANTEX has already proven its efficiency in urban areas at a global level [

46], and an example of PANTEX criteria is visible in

Figure 4. In practice, PANTEX criteria are computed in the blue band (10-m resolution) in the case of Sentinel-2 and in the panchromatic one (15 m resolution) with Landsat-8.

3.2. Supervised Data Learning

This supervised data learning step aims at sampling data and building a classification model per image that will be applied in the further step (

Section 3.3). Two classes, urban areas and the rest of the landscape, are foreseen in the classification output. To extract learning points inside urban areas, we use the Copernicus High Resolution Layer Imperviousness Degree 2012 (HRL IMD 2012) as a reference. It is indeed assumed that urban areas in 2012 are still labeled as urban regions in the future. Indeed, urban areas grow slowly and on some small areas compared to the entire arable land available. More quantitatively, given that urban areas represent just over 1% of the overall land in Europe and they grew by about 0.6% per year between 1990 and 2000 [

47], the probability of sampling changes remains below 2%. Changes selected in a random sampling can then be considered as noise in the data source and should be neglected. This enables one to provide a large number of samples over the entire area on which a classification model can be designed.

In practice, a maximum sampling of 1,000,000 pixels over the image is performed. The number of samples is not equal for each class, but depends on their proportion in the image. For example, given that the urban areas represent about 4% of the image, the distribution of samples is about 4% in the ‘urban area’ class and 96% for the ‘non-urban’ one. This is more adapted to represent the different spectral characteristics of the rural landscape’s classes (vegetation, bare soil, water, forest).

The classification algorithm used for this application is bagging trees [

48], which is not sensitive to a large number of training data. Bagging is part of the ensemble methods, which also include random forest and boosting. Ensemble methods have already proven their efficiency for the processing of remote sensing images, in particular in urban environments [

44,

49]. They constitute a set of techniques for improving the performance of simple decision trees. The global idea is to perform multiple random samplings and to construct separate tree models. Each tree provides a criterion related to the fact that the input data belong to the urban class or not. Then, a choice procedure on the basis of each result is applied (average in general).

Though many other classification techniques could be used, bagging remains a suitable choice to deal with a large number of observations (as well as neural networks, for example), since their calibration is fast. Moreover, with such an approach, it is also possible to easily evaluate the discrimination power of each input variable by evaluating their impact on each node, which is of prime importance to assess the discriminative power of Sentinel-2 bands [

2].

All spectral bands are kept in the feature vector on which we add PANTEX criteria. Features are then resampled by nearest-neighbors at ( m) with respect to the specifications of the Copernicus IMD HRL product.

3.3. Map Production

Each image has been classified using its own bagging tree model and validated by cross-validation. Therefore, in each pixel

, a series of criteria

(corresponding to the concatenation of the results of all models for each time

t) that belongs to the urban thematic class is available. A global quality criterion can then be estimated to evaluate its accuracy and provide an overall uncertainty for each date. Based on the kappa index, the global uncertainty

at time

t is computed as:

where

is the kappa index of the classification at time

t. With this criterion, a good classification will generate low global uncertainty and vice versa. This ensemble of criteria associated with their global uncertainty needs now to be fused in each pixel to generate a global map. This is the scope of the next section.

3.4. Classification Fusion

In this step, each pixel contains a value related to its similarity to the urban class associated with a global uncertainty. We now need to synthesize this information to provide a unique map. To this end, we rely on evidence theory and in particular on the Dempster–Shafer Theory of evidence (DST). The DST is based on a Bayesian approach and fuses a set of mass functions issued from various sources of observations associated with the belief on some hypotheses. A key advantage is that uncertainty (union of hypotheses) is accurately managed with the Dempster’s fusion rule. Applied to our classification problem, let

and

be the hypotheses that a given pixel belongs to the urban class or not. The uncertainty is formalized as the union

. For each time step

t, if one computes three belief functions

,

and

, such that

and:

is the belief that the corresponding pixel belongs to the urban class;

is the belief that the corresponding pixel does not belong to the urban class;

is overall uncertainty;

it is possible to combine two sources of information at times

and

(associated with mass functions

and

, respectively) using Dempster’s fusion rule. This is given, by assumption

of the powerset

, by:

Roughly, the numerator combines the4 information of the two sources

and

in hypothesis

, while the denominator

is related to the conflict (its second term is 0 if sources are in accordance and grow with conflict). As this fusion rule is associative, to combine

N sources of information, we start by fusing the two first ones, then fusing the result with the third one, and so on (this reads:

, and only pairwise fusions defined in Equation (

2) are involved.).

To apply this fusion rule to the classification of urban patterns, we need to define the mass functions

,

and

at each time

t. Let us remind that, after the classification step, each pixel contains in each time

t a value

related to its similarity to the urban class. In addition to this value, a global accuracy

(see Equation (

1)) is also available in each time step.

The way we get

,

and

in

is therefore a normalization step:

In practice, to accurately deal with clouds, each pixel identified in

Section 3.1 as a cloud has uncertainty

equal to 1.

Finally, once fused, the final decision of the classification between urban/non-urban areas is chosen as the maximum between and in each pixel (with the fused mass functions).

3.5. Change Detection and Imperviousness Degree Computation

Each time a new datum is available, a change detection can be performed by the comparison of classifications. This enables one to easily update databases, as will be shown in the experimental part on the Copernicus HRL IMD 2012, which has been validated by the EEA (European Environment Agency).

The derivation of imperviousness degree (1% to 100%) is produced using an automatic algorithm based on a calibrated Normalized Difference Vegetation Index (NDVI). A description of the Copernicus Imperviousness Layer methodology was described by [

50] for the 2009 update and by [

51] for the 2012 update. Similar methods were also applied in the USA for the development of the National Land Cover database [

52].

3.6. Change Detection Validation

Change detection validation is made from a stratified sampling on the change stratum and unchanged stratum.

A density threshold of 30% was used to derive the built-up layer from the imperviousness layer [

53]. This was not intended to be a separate product, but instead was calculated for the verification process only, because density products cannot be verified.

Based on the assumption that urbanized areas spread more than they appear randomly in the landscape, sampling unchanged areas was done in a 250-m buffer zone along urbanized areas.

In practice, we follow the same process as the Copernicus products to validate our results [

54]: 200 samples were selected including 100 samples in the change stratum and 100 samples in the unchanged stratum. Each sample represents a

m polygon in which a manual interpretation was performed. The assigned class matches the major land use surface inside a polygon.

4. Results and Discussion

The overall processing chain presented in this paper has been applied on the Sentinel-2 and Landsat-8 images for the two study areas. Independent classifications have been performed in each image, and the fusion has been applied in two different ways:

Multitemporal fusion: classifications issued from images of the same sensor have been fused. This yields two results: fusion with Sentinel-2 and fusion with Landsat-8;

Multi-source fusion: all classifications have been fused to provide a single classification.

Results are depicted in

Figure 5 and

Figure 6 for Prague and Rennes, respectively, and quantitative criteria are presented in

Table 2 and

Table 3, both for individual classifications and fusions of them. Because of cloud coverage, results cannot be provided in each pixel, and we also show in these tables the percentage of data for which estimations are available.

From these table, it is worth noting that overall accuracies and kappa indexes are good in all situations. This first reveals the ability of our texture index based on PANTEX to accurately extract urban areas. However, looking at individual classifications, we observe that overall urban areas are not completely covered because of image properties (spatial coordinates) and cloud coverage (third line of

Figure 5 and

Figure 6). When fusing classifications issued from images of the same sensor (fourth line), one observes more consistent maps covering the entire region. The fusion process enables one not only to provide more complete maps, but as shown on

Table 2 and

Table 3, uncertainty is also reduced by combining in an efficient way all classifications, yielding overall accuracies and kappa index that are higher. The same observation holds when fusing images of various sources (last line). This demonstrates the ability of Dempster–Shafer theory to optimally combine various classifications.

In the following sections, we go into more detail, and we show the benefits of the approach to classify Sentinel-2 images and its utility to update large datasets, such as Copernicus HRL IMD.

4.1. Variable Importance of Single Image Classifications

In

Figure 7, we have plotted the contribution of the different bands (all spectral values and PANTEX criteria) in the classification models of each image. The impact of each variable is evaluated during the tree construction through the following principle: a given node associated with a specific variable is split (using a threshold on this variable) if it is considered as heterogeneous. Once split, if the children nodes are not heterogeneous, therefore the contribution of this variable is significant, since it enables one to separate inhomogeneous groups into homogeneous ones. More precisely, each importance is estimated based on the differences of impurity between parent and children nodes. More details are given in [

55].

Several remarks can be made from

Figure 7: as one can observe with the significant contribution of PANTEX, texture plays a key role in each classification model. This is all the more true for Sentinel-2 images whose spatial resolution is higher than Landsat-8, and therefore, PANTEX is more sensitive in urban areas (since it relies on local variations of spectral values). To illustrate this,

Figure 8 presents PANTEX maps extracted on Sentinel-2 and Landsat-8, and we observe sharper delineation of urban areas from Sentinel-2’s PANTEX band.

To explain the relative better efficiency of the City of Prague compared to Rennes, let us remind that this region of France is characterized by a very fragmented agricultural landscape with parcels of small sizes, unlike the Prague area, whose agricultural crops are larger. Therefore, some rural areas are more similar in terms of texture than urban ones, and this reduces the rule of PANTEX. Nevertheless, the overall accuracy is still very good and even larger for the city of Rennes.

As for the spectral bands,

Figure 7 shows that the near-infrared (B8, B8A Sentinel-2 and B5 for Landsat-8) and blue (B01, B02) bands are particularly valuable to extract urban areas. These bands are then followed by the Short Wave Infrared (SWIR) bands (B10, B11, B12 for Sentinel-2 and B6 for Landsat). These most important Sentinel-2 bands are in accordance with [

2]. Man-made features have low reflectance in the near-infrared and can be separated from the vegetation. They also have strong reflectance in the blue and SWIR that can separate them from the rest of the agriculture or semi-natural features.

4.2. Benefits of Classification Fusion

The fusion process enables one to provide a cloud-free classification and also to improve the overall accuracy, as shown in

Table 2 and

Table 3. This illustrates the complementarity of available sources:

Multitemporal data (one of the main pros of Sentinel-2) enable one to consider different phenological stages of the agricultural landscape and to limit commission errors, such as bare soil and other low chlorophyll activity land covers. As observed in

Figure 9, the first image shows a set of bare soils in which urban membership criteria are high. On the contrary, the second image shows the set of parcels with high chlorophyll activity and low membership values. The fusion of both criteria results in low membership values. These differences are highlighted by a high value in the conflict map

of Equation (

2) (

Figure 9f). A comparison with the mean of classifications shows that urban estimates are higher and easily separable (

Figure 9g).

Multi-source fusion (combination of Landsat-8 and Sentinel-2 classifications) enhances also the geometric accuracy from 30 m to 10 m. As presented in

Figure 10, the delineation of the urban areas is more sharp on Sentinel-2 image. Fusion of both classifications provides a sharp delineation with high membership values. The conflict map shows also that differences between images were mainly located in the edges of the urban areas.

Figure 10g also shows that classification fusion provides sharper edges than the mean of classifications.

4.3. Application to Change Detection and Copernicus Products’ Updates

The resulting classifications were then compared to the Copernicus HRL IMD 2012 to detect changes between 2012 and 2015. The change maps were validated with an overall accuracy over 94.75% and a kappa index over 0.90 (

Table 4 and

Table 5). Commission errors are mainly due to the detection of water (lake, reservoir) or long-term bare soil. On the contrary, omission errors correspond to some mixed urban and vegetation patterns, such as residential subdivisions, where the vegetation is particularly dense.

We illustrate in

Figure 11 and

Figure 12 the changes observed between our product (issued from multi-temporal multi-source fusion of Sentinel-2 and Landsat-8) and the Copernicus HRL IMD 2012 derived from it. As one can observe, changes are mainly identified as pixel blocks along the urban areas, illustrating the urban growth.

5. Conclusions

In this work, we have proposed an exploitation of Sentinel-2 images to monitor urban areas and investigate their utility to update Copernicus Land products. The proposed approach takes advantage of the regular availability of new images provided by Sentinel-2 to reduce the uncertainty in the detection of urban areas and the estimation of imperviousness. It relies on several separate image classifications that are fused using the Dempster–Shafer theory. The regular acquisition of images feeds our processing chain to enhance the classification accuracy and provide a cloud cover-free product. In practice, in addition to all spectral bands, a texture index (PANTEX) has been added as a feature descriptor to accurately represent urban areas.

The approach has been evaluated on two large urban areas (Prague in the Czech Republic and Rennes in France) following the Copernicus process. Results exhibit very good accuracies with a kappa index over . This illustrates the ability of Sentinel-2, both in terms of spectral and spatial (through the texture index) resolutions, to detect urban areas.

We also have successfully combined Sentinel-2 and Landsat-8 data to show that Sentinel-2 images increase the geometric accuracy of Landsat-8 classifications, and conversely, Landsat-8 increases the Sentinel-2 repetitiveness and its thematic accuracy.

Therefore, the presented approach is applicable both to heterogeneous landscapes and heterogeneous data. It is also able to reach the needs of operational projects, such as the Copernicus High Resolution Layer Imperviousness, and can be conducted on very large areas, such as Europe. Though applied to urban areas, the methodology can be used in any other land cover classification tasks.

Acknowledgments

This work has been partly supported by the french program ANR JCJC ASTERIX #13-JS02-0005-01.

Author Contributions

Authors contributed equally to this work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- United Nations. World Population Prospects: The 2015 Revision; Technical Report; United Nations: New York, NY, USA, 2015. [Google Scholar]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Radoux, J.; Chomé, G.; Jacques, D.; Waldner, F.; Bellemans, N.; Matton, N.; Lamarche, C.; D’Andrimont, R.; Defourny, P. Sentinel-2’s potential for sub-pixel landscape feature detection. Remote Sens. 2016, 8, 488. [Google Scholar] [CrossRef]

- Pesaresi, M.; Corbane, C.; Julea, A.; Florczyk, A.; Syrris, V.; Soille, P. Assessment of the added-value of Sentinel-2 for detecting built-up areas. Remote Sens. 2016, 8, 299. [Google Scholar] [CrossRef] [Green Version]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Gong, X.; Corpetti, T. Adaptive window size estimation in unsupervised change detection. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 991–1003. [Google Scholar] [CrossRef]

- Inglada, J.; Mercier, G. A new statistical similarity measure for change detection in multitemporal sAR Images and its extension to multiscale change analysis. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1432–1445. [Google Scholar] [CrossRef]

- Mercier, G.; Derrode, S.; Trouvé, E.; Bombrun, L. Chapter 5 Change detection. In Multivariate Image Processing: Methods and Applications; Chanussot, J., Chehdi, K., Collet, C., Eds.; Wiley-ISTE: London, UK, 2009; pp. 109–152. [Google Scholar]

- Hsu, Y.; Nagel, H.; Rekers, G. New likelihood test methods for change detection in image sequences. Comput. Vis. Graph. Image Process. 1984, 26, 73–106. [Google Scholar] [CrossRef]

- Aach, T.; Kaup, A.; Mester, R. Statistical model-based change detection in moving video. Signal Process. 1993, 31, 165–180. [Google Scholar] [CrossRef]

- Kervrann, C.; Boulanger, J.; Pecot, T.; Perez, P. Discriminant random field and patch-based redundancy analysis for image change detection. In Proceedings of the 2009 IEEE International Workshop on Machine Learning for Signal Processing, Grenoble, France, 1–4 September 2009; pp. 1–6.

- He, L.; Laptev, I. Robust change detection in dense urban areas via SVM classifier. In Proceedings of the 2009 Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009; pp. 1–5.

- Ridd, M.K.; Liu, J. A comparison of four algorithms for change detection in an urban environment. Remote Sens. Environ. 1998, 63, 95–100. [Google Scholar] [CrossRef]

- Blaschke, T.; Lang, S.; Hay, G. Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Springer: Berlin, Germany; Heidelberg, Germany, 2008. [Google Scholar]

- Lefebvre, A.; Corpetti, T.; Hubert-Moy, L. A measure for change detection in very high resolution remote sensing images based on texture analysis. In Proceedings of the 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 1697–1700.

- Niemeyer, I.; Marpu, P.; Nussbaum, S. Change detection using object features. In Object-Based Image Analysis; Blaschke, T., Lang, S., Hay, G., Eds.; Lecture Notes in Geoinformation and Cartography; Springer: Berlin, Germany; Heidelberg, Germany, 2008; pp. 185–201. [Google Scholar]

- Celik, T. Change detection in satellite images using a genetic algorithm approach. IEEE Geosci. Remote Sens. Lett. 2010, 7, 386–390. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S. Unsupervised change detection from multichannel SAR data by Markovian data fusion. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2114–2128. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L.; Marconcini, M. A novel approach to unsupervised change detection based on a semisupervised SVM and a similarity measure. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2070–2082. [Google Scholar] [CrossRef]

- Petit, C.; Scudder, T.; Lambin, E. Quantifying processes of land-cover change by remote sensing: Resettlement and rapid land-cover changes in south-eastern Zambia. Int. J. Remote Sens. 2001, 22, 3435–3456. [Google Scholar] [CrossRef]

- Silipaswan, C.; Verbyla, D.; Mcguire, A. Land cover change on the Seward Peninsula: The use of remote sensing to evaluate the potential influences of climate warming on historical vegetation dynamics. Can. J. Remote Sens. 2001, 27, 542–554. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Radke, R.; Andra, S.; Al-Kofahi, O.; Roysam, B. Image change detection algorithms: A systematic survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef] [PubMed]

- Serpico, S.; Bruzzone, L. Information processing for remote sensing. In Change Detection; Chen, C.H., Ed.; World Scientific Publishing: Singapore, 1999; pp. 319–336. [Google Scholar]

- Singh, A. Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Du, P.; Liu, S.; Gamba, P.; Tan, K.; Xia, J. Fusion of difference images for change detection over urban areas. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 1076–1086. [Google Scholar] [CrossRef]

- Gan, Q.; Harris, C.J. Comparison of two measurement fusion methods for Kalman-filter-based multisensor data fusion. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 273–279. [Google Scholar] [CrossRef]

- Sun, S.L.; Deng, Z.L. Multi-sensor optimal information fusion Kalman filter. Automatica 2004, 40, 1017–1023. [Google Scholar] [CrossRef]

- Shafer, G. Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Smarandache, F.; Dezert, J. Advances and Applications of DSmT for Information Fusion (Collected Works); Infinite Study; American Research Press: Rehoboth, NM, USA, 2006; Volume 2. [Google Scholar]

- Le Hégarat-Mascle, S.; Seltz, R.; Hubert-Moy, L.; Corgne, S.; Stach, N. Performance of change detection using remotely sensed data and evidential fusion: Comparison of three cases of application. Int. J. Remote Sens. 2006, 27, 3515–3532. [Google Scholar] [CrossRef]

- Lu, Y.; Trinder, J.; Kubik, K. Automatic building detection using the Dempster-Shafer algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 395–403. [Google Scholar] [CrossRef]

- Corpetti, T.; Planchon, O. Front detection on satellite images based on wavelet and evidence theory: Application to the sea breeze fronts. Remote Sens. Environ. 2011, 115, 306–324. [Google Scholar] [CrossRef]

- Mora, B.; Fournier, R.A.; Fouher, S. Mapping the health of mature deciduous forest stands by fusing multi-source geospatial data with Dempster’s combination rule. Int. J. Remote Sens. 2012, 33, 1139–1163. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Trinder, J.; Clode, S.; Kubik, K. Using the Dempster–Shafer method for the fusion of LIDAR data and multi-spectral images for building detection. Inf. Fusion 2005, 6, 283–300. [Google Scholar] [CrossRef]

- Le Hegarat-Mascle, S.; Bloch, I.; Vidal-Madjar, D. Application of Dempster-Shafer evidence theory to unsupervised classification in multi-source remote sensing. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1018–1031. [Google Scholar] [CrossRef]

- Hagolle, O.; Huc, M.; Pascual, D.V.; Dedieu, G. A multi-temporal method for cloud detection, applied to FORMOSAT-2, VENμS, LANDSAT and SENTINEL-2 images. Remote Sens. Environ. 2010, 114, 1747–1755. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Lefebvre, A.; Corpetti, T.; Hubert-Moy, L. Object-oriented approach and texture analysis for change detection in very high resolution images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. 663–666.

- Smits, P.; Annoni, A. Updating land-cover maps by using texture information from very high-resolution space-borne imagery. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1244–1254. [Google Scholar] [CrossRef]

- Puissant, A.; Hirsch, J.; Weber, C. The utility of texture analysis to improve per-pixel classification for high to very high spatial resolution imagery. Int. J. Remote Sens. 2005, 26, 733–745. [Google Scholar] [CrossRef]

- Gong, P.; Howarth, P. The use of structural information for improving land-cover classification accuracies at the rural-urban fringe. Photogramm. Eng. Remote Sens. 1990, 56, 67–73. [Google Scholar]

- Herold, M.; Liu, X.; Clarke, K. Spatial metrics and image texture for mapping urban land use. Photogramm. Eng. Remote Sens. 2003, 69, 991–1001. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gerhardinger, A.; Kayitakire, F. A robust built-up area presence index by anisotropic rotation-invariant textural measure. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2008, 1, 180–192. [Google Scholar] [CrossRef]

- Haralick, R.; Shanmugam, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybernet. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Pesaresi, M.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Marin-Herrera, M.A.; Ouzounis, G.K.; et al. A global human settlement layer from optical HR/VHR RS data: Concept and first results. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- EEA. Urban Sprawl in Europe. The Ignored Challenge; Technical Report 10; European Environment Agency: Copenhagen, Denmark, 2006. [Google Scholar]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 140, 123–140. [Google Scholar] [CrossRef]

- Puissant, A.; Rougier, S.; Stumpf, A. Object-oriented mapping of urban trees using Random Forest classifiers. Int. J. Appl. Earth Observ. Geoinf. 2014, 26, 235–245. [Google Scholar] [CrossRef]

- Gangkofner, U.; Weichselbaum, J.; Kuntz, S.; Brodsky, L.; Larsson, K.; de Pasquale, V. Update of the European high-resolution layer of built-up areas and soil sealing 2006 with Image2009 data. In Proceedings of the 30th EARSeL Symposium: Remote Sensing for Science, Education and Culture, Paris, France, 31 May–4 June 2010.

- Lefebvre, A.; Beaugendre, N.; Pennec, A.; Sannier, C.; Corpetti, T. Using data fusion to update built-up areas of the 2012 European High-Resolution Layer Imperviousness. In Proceedings of the 33rd EARSeL Symposium, Matera, Italy, 3–6 June 2013.

- Xian, G.; Homer, C. Updating the 2001 national land cover database impervious surface products to 2006 using Landsat imagery change detection methods. Remote Sens. Environ. 2010, 114, 1676–1686. [Google Scholar] [CrossRef]

- Sannier, C.; Gallego, J.; Dahmer, J.; Smith, G.; Dufourmont, H.; Pennec, A. Validation of Copernicus high resolution layer on imperviousness degree for 2006, 2009 and 2012. In Proceedings of the International Symposium on Spatial Accuracy Assessment in Natural Resources and Environmental Sciences, Montpellier, France, 5–8 July 2016.

- Maucha, G.; Büttner, G.; Kosztra, B. Final Draft European Validation of GMES FTS Soil Sealing Enhancement Data Project Manager; Technical Report; European Environment Agency: Copenhagen, Denmark, 2010. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

Figure 1.

Presentation of the study areas.

Figure 1.

Presentation of the study areas.

Figure 2.

Block diagram of the workflow. Different time steps are noted ... ; stands for “Overall Accuracy”; K is the kappa index; CAPI stands for Computer-Assisted Photo Interpretation.

Figure 2.

Block diagram of the workflow. Different time steps are noted ... ; stands for “Overall Accuracy”; K is the kappa index; CAPI stands for Computer-Assisted Photo Interpretation.

Figure 3.

Example of cloud mask detection on a Sentinel-2 image: (a) original Sentinel-2 image; (b) detected cloud mask.

Figure 3.

Example of cloud mask detection on a Sentinel-2 image: (a) original Sentinel-2 image; (b) detected cloud mask.

Figure 4.

PANTEXcriterion computed on Sentinel-2 image: (a) original Sentinel-2 image; (b) minimum PANTEX value. As one can observe, local contrasts issued from urban areas are accurately extracted.

Figure 4.

PANTEXcriterion computed on Sentinel-2 image: (a) original Sentinel-2 image; (b) minimum PANTEX value. As one can observe, local contrasts issued from urban areas are accurately extracted.

Figure 5.

Results at various steps of the approach for Prague city.

Figure 5.

Results at various steps of the approach for Prague city.

Figure 6.

Results at various steps of the approach for Rennes city.

Figure 6.

Results at various steps of the approach for Rennes city.

Figure 7.

Contribution of the various features in the classification models for the Sentinel-2 and Landsat-8 images. As one can observe, PANTEX indexes have a significant contribution compared to spectral values.

Figure 7.

Contribution of the various features in the classification models for the Sentinel-2 and Landsat-8 images. As one can observe, PANTEX indexes have a significant contribution compared to spectral values.

Figure 8.

Comparison of S2and L8PANTEX bands: (a) subset of Sentinel-2 image; (b) Sentinel-2’s PANTEX band; (c) Landsat-8’s PANTEX band.

Figure 8.

Comparison of S2and L8PANTEX bands: (a) subset of Sentinel-2 image; (b) Sentinel-2’s PANTEX band; (c) Landsat-8’s PANTEX band.

Figure 9.

Benefits of the multi-temporal fusion: classification fusion promotes common estimates, whereas the mean of classifications provides less obvious estimation between urban areas and other land covers. (a) Landsat-8 image; (b) Image classification (OA = 97.3%); (c) Landsat-8 image; (d) Image classification (OA = 97.6%); (e) Classification fusion (97.6%); (f) Classification’s conflict; (g) Mean of classifications).

Figure 9.

Benefits of the multi-temporal fusion: classification fusion promotes common estimates, whereas the mean of classifications provides less obvious estimation between urban areas and other land covers. (a) Landsat-8 image; (b) Image classification (OA = 97.3%); (c) Landsat-8 image; (d) Image classification (OA = 97.6%); (e) Classification fusion (97.6%); (f) Classification’s conflict; (g) Mean of classifications).

Figure 10.

Benefits of the multi-source fusion: classification fusion provides sharpened edges, whereas the mean of classifications is more inaccurate. (a) Landsat-8 image; (b) Image classification (OA = 97.3%); (c) Landsat-8 image; (d) Image classification (OA = 97.3%); (e) Classification fusion (97.9%); (f) Classification’s conflict; (g) Mean of classifications).

Figure 10.

Benefits of the multi-source fusion: classification fusion provides sharpened edges, whereas the mean of classifications is more inaccurate. (a) Landsat-8 image; (b) Image classification (OA = 97.3%); (c) Landsat-8 image; (d) Image classification (OA = 97.3%); (e) Classification fusion (97.9%); (f) Classification’s conflict; (g) Mean of classifications).

Figure 11.

2012 to 2015 change detection map in Prague. Changes have been detected by comparison of the Copernicus High Resolution Layer Imperviousness Degree (HRL IMD) (in 2012) and our product (in 2015). (a) The entire area; (b) a zoom of the black squared area.

Figure 11.

2012 to 2015 change detection map in Prague. Changes have been detected by comparison of the Copernicus High Resolution Layer Imperviousness Degree (HRL IMD) (in 2012) and our product (in 2015). (a) The entire area; (b) a zoom of the black squared area.

Figure 12.

2012 to 2015 change detection map in Rennes. Changes have been detected by comparison of the Copernicus HRL IMD (in 2012) and our product (in 2015). (a) The entire area; (b) a zoom of the black squared area.

Figure 12.

2012 to 2015 change detection map in Rennes. Changes have been detected by comparison of the Copernicus HRL IMD (in 2012) and our product (in 2015). (a) The entire area; (b) a zoom of the black squared area.

Figure 13.

Detection of new commercial or industrial areas in Prague (yellow: changes; dark grey: HRL IMD 2012 >30%; light grey: HRL IMD 2012 <30%). (a) Google Earth image (2012); (b) Sentinel-2 image (2015); (c) Change map; (d) HRL IMD 2015.

Figure 13.

Detection of new commercial or industrial areas in Prague (yellow: changes; dark grey: HRL IMD 2012 >30%; light grey: HRL IMD 2012 <30%). (a) Google Earth image (2012); (b) Sentinel-2 image (2015); (c) Change map; (d) HRL IMD 2015.

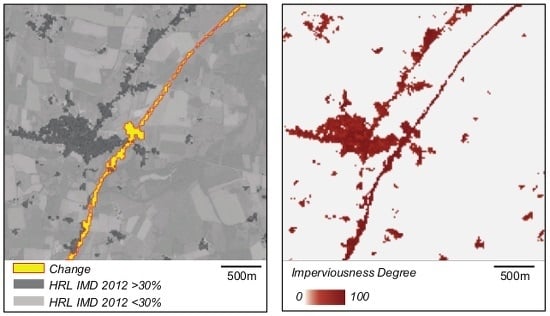

Figure 14.

Detection of a new motorway in Rennes (yellow: changes; dark grey: HRL IMD 2012 >30%; light grey: HRL IMD 2012 <30%). (a) Google Earth image (2012); (b) Sentinel-2 image (2015); (c) Change map; (d) HRL IMD 2015.

Figure 14.

Detection of a new motorway in Rennes (yellow: changes; dark grey: HRL IMD 2012 >30%; light grey: HRL IMD 2012 <30%). (a) Google Earth image (2012); (b) Sentinel-2 image (2015); (c) Change map; (d) HRL IMD 2015.

Figure 15.

Detection of new subdivision in Rennes (yellow: changes; dark grey: HRL IMD 2010 >30%; light grey: HRL IMD 2012 <30%). (a) Google Earth image (2012); (b) Sentinel-2 image (2015); (c) Change map; (d) HRL IMD 2015.

Figure 15.

Detection of new subdivision in Rennes (yellow: changes; dark grey: HRL IMD 2010 >30%; light grey: HRL IMD 2012 <30%). (a) Google Earth image (2012); (b) Sentinel-2 image (2015); (c) Change map; (d) HRL IMD 2015.

Table 1.

List of input images.

Table 1.

List of input images.

| # | Prague | # | Rennes |

|---|

| 1 | 12/10/2015- Landsat 8 | 1 | 09/09/2015 - Landsat 8 |

| 2 | 30/08/2015 - Sentinel-2A | 2 | 28/08/2015 - Sentinel-2A |

| 3 | 13/08/2015 - Sentinel-2A | 3 | 21/08/2015 - Sentinel-2A |

| 4 | 17/07/2015 - Landsat 8 | 4 | 11/05/2015 - Landsat 8 |

Table 2.

Intermediate result for Prague.

Table 2.

Intermediate result for Prague.

| Classification Sensor (date) | Overall Accuracy | Uncertainty (1-Kappa) | Cloud Coverage |

|---|

| Landsat-8 (17/07/2015) | 97.5% | 0.25 | 21% |

| Sentinel-2 (13/08/2015) | 97.3% | 0.27 | 17% |

| Sentinel-2 (30/08/2015) | 97.2% | 0.28 | 7% |

| Landsat-8 (12/10/2015) | 97.3% | 0.28 | 24% |

| Fusion of Landsat-8 classifications | 97.6% | 0.24 | 0% |

| Fusion of Sentinel-2 classifications | 97.3% | 0.23 | 0% |

| Fusion of Sentinel-2 and Landsat-8 classifications | 97.8% | 0.22 | 0% |

Table 3.

Intermediate result for Rennes.

Table 3.

Intermediate result for Rennes.

| Classification Sensor (date) | Overall Accuracy | Uncertainty (1-Kappa) | Cloud Coverage |

|---|

| Landsat-8 (11/05/2015) | 97.7% | 0.24 | 9% |

| Sentinel-2 (21/08/2015) | 97.3% | 0.27 | 0% |

| Sentinel-2 (28/08/2015) | 97.1% | 0.30 | 44% |

| Landsat-8 (09/09/2015) | 97.7% | 0.24 | 3% |

| Fusion of Landsat-8 classifications | 97.8% | 0.23 | 0% |

| Fusion of Sentinel-2 classifications | 97.3% | 0.26 | 0% |

| Fusion of Sentinel-2 and Landsat-8 classifications | 97.9% | 0.21 | 0% |

Table 4.

Change detection validation for Prague (OA = 95%; K = 0.90).

Table 4.

Change detection validation for Prague (OA = 95%; K = 0.90).

| | | Reference | |

|---|

| | | No Change | Change | Total |

|---|

| Classification | No Change | 194 | 14 | 208 |

| Change | 6 | 186 | 192 |

| Total | 200 | 200 | 400 |

Table 5.

Change detection validation for Rennes (OA = 94.75%; K = 0.90).

Table 5.

Change detection validation for Rennes (OA = 94.75%; K = 0.90).

| | | Reference | |

|---|

| | | No Change | Change | Total |

|---|

| Classification | No Change | 183 | 4 | 187 |

| Change | 17 | 166 | 213 |

| Total | 200 | 200 | 400 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).