Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region

Abstract

:1. Introduction

- (1)

- For the problem of low identification accuracy in narrow waters, full-scale skip connections are introduced. This connection transfers and utilizes different scale features in the decoding process, integrating low-level details and high-level semantics in the feature map, which helps the network to extract features in narrow waters

- (2)

- The spatial attention mechanism is used to suppress false alarms in water identification. The mechanism generates a spatial attention coefficient matrix, determines the focus information of the feature map, performs feature sorting and fusion, and suppresses the background irrelevant to water body identification.

- (3)

- Considering the high computational cost and low efficiency of the current high-precision deep learning models, a deep supervision module is added to the model. The staged output of the decoder is used to improve the model efficiency, which enables the model to have fast segmentation capabilities.

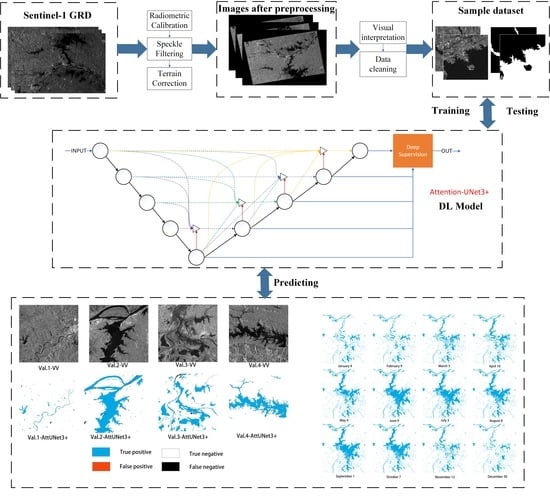

2. Methods

2.1. Preprocessing

2.2. Model Construction

2.2.1. Full-Scale Skip Connections

2.2.2. Attention Module

2.2.3. Deep Supervision

2.3. Accuracy Evaluation

| Prediction | ||||

|---|---|---|---|---|

| Water | Non-water | Sum | ||

| Ground truth | Water | TP | FN | TP + FN |

| Non-water | FP | TN | FP + TN | |

| Sum | TP + FP | FN + TN | TP + TN + FP + FN | |

3. Study Area and Data

3.1. Study Area

3.2. Data

3.2.1. SAR Data

3.2.2. Sample Dataset

4. Results and Analysis

4.1. Comparison of Different Models

4.2. Stage Output Results of Deep Supervision

4.3. Multitemporal Analysis of Poyang Lake

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Schumann, G.; Di Baldassarre, G.; Alsdorf, D.; Bates, P. Near real-time flood wave approximation on large rivers from space: Application to the River Po, Italy. Water Resour. Res. 2010, 46, 1–8. [Google Scholar] [CrossRef]

- Niharika, E.; Adeeba, H.; Krishna, A.S.R.; Yugander, P. K-means based Noisy SAR Image Segmentation using Median Filtering and Otsu Method. In Proceedings of the 2017 IEEE International Conference on Iot and Its Applications (IEEE ICIOT), Nagapattinam, India, 19–20 May 2017. [Google Scholar]

- Bioresita, F.; Puissant, A.; Stumpf, A.; Malet, J.-P. A Method for Automatic and Rapid Mapping of Water Surfaces from Sentinel-1 Imagery. Remote Sens. 2018, 10, 217. [Google Scholar] [CrossRef]

- Liang, J.; Liu, D. A local thresholding approach to flood water delineation using Sentinel-1 SAR imagery. Isprs J. Photogramm. Remote Sens. 2020, 159, 53–62. [Google Scholar] [CrossRef]

- Cao, H.; Zhang, H.; Wang, C.; Zhang, B. Operational Flood Detection Using Sentinel-1 SAR Data over Large Areas. Water 2019, 11, 786. [Google Scholar] [CrossRef]

- Martinis, S.; Kersten, J.; Twele, A. A fully automated TerraSAR-X based flood service. Isprs J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Xie, L.; Zhang, H.; Wang, C.; Chen, F. Water-Body types identification in urban areas from radarsat-2 fully polarimetric SAR data. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 10–25. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, Z.; Chen, Q.; Liu, X. A Practical Plateau Lake Extraction Algorithm Combining Novel Statistical Features and Kullback–Leibler Distance Using Synthetic Aperture Radar Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4702–4713. [Google Scholar] [CrossRef]

- Shen, G.; Fu, W. Water Body Extraction Using Gf-3 Polsar Data—A Case Study in Poyang Lake. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium 2020, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar]

- Klemenjak, S.; Waske, B.; Valero, S.; Chanussot, J. Automatic Detection of Rivers in High-Resolution SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1364–1372. [Google Scholar] [CrossRef]

- Kreiser, Z.; Killough, B.; Rizvi, S.R. Water Across Synthetic Aperture Radar Data (WASARD): SAR Water Body Classification for the Open Data Cube. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium 2018, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Hu, J.; Hong, D.; Wang, Y.; Zhu, X.X. A Comparative Review of Manifold Learning Techniques for Hyperspectral and Polarimetric SAR Image Fusion. Remote Sens. 2019, 11, 681. [Google Scholar] [CrossRef]

- Bangira, T.; Alfieri, S.M.; Menenti, M.; van Niekerk, A. Comparing Thresholding with Machine Learning Classifiers for Mapping Complex Water. Remote Sens. 2019, 11, 1351. [Google Scholar] [CrossRef] [Green Version]

- Lv, W.; Yu, Q.; Yu, W. Water Extraction in SAR Images Using GLCM and Support Vector Machine. In Proceedings of the 2010 IEEE 10th International Conference on Signal Processing Proceedings (ICSP 2010), Beijing, China, 24–28 October 2010; Volume I–Iii. [Google Scholar]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. Wiley Interdiscip. Rev.-Data Min. Knowl. Discov. 2018, 8, e1264. [Google Scholar] [CrossRef]

- Da’U, A.; Salim, N. Recommendation system based on deep learning methods: A systematic review and new directions. Artif. Intell. Rev. 2020, 53, 2709–2748. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More Diverse Means Better: Multimodal Deep Learning Meets Remote-Sensing Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4340–4354. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Jiang, X.; Liang, S.; He, X.; Ziegler, A.D.; Lin, P.; Pan, M.; Wang, D.; Zou, J.; Hao, D.; Mao, G.; et al. Rapid and large-scale mapping of flood inundation via integrating spaceborne synthetic aperture radar imagery with unsupervised deep learning. Isprs J. Photogramm. Remote Sens. 2021, 178, 36–50. [Google Scholar] [CrossRef]

- Pai, M.M.M.; Mehrotra, V.; Aiyar, S.; Verma, U.; Pai, R.M. Automatic Segmentation of River and Land in SAR Images: A Deep Learning Approach. In Proceedings of the 2019 IEEE Second International Conference on Artificial Intelligence and Knowledge Engineering (AIKE), Sardinia, Italy, 3–5 June 2019. [Google Scholar]

- Katiyar, V.; Tamkuan, N.; Nagai, M. Near-Real-Time Flood Mapping Using Off-the-Shelf Models with SAR Imagery and Deep Learning. Remote Sens. 2021, 13, 2334. [Google Scholar] [CrossRef]

- Verma, U.; Chauhan, A.; Pai, M.M.M.; Pai, R. DeepRivWidth: Deep learning based semantic segmentation approach for river identification and width measurement in SAR images of Coastal Karnataka. Comput. Geosci. 2021, 154, 104805. [Google Scholar] [CrossRef]

- Xu, C.; Zhang, S.; Zhao, B.; Liu, C.; Sui, H.; Yang, W.; Mei, L. SAR image water extraction using the attention U-net and multi-scale level set method: Flood monitoring in South China in 2020 as a test case. Geo-Spat. Inf. Sci. 2021, 25, 155–168. [Google Scholar] [CrossRef]

- Li, J.; Wang, C.; Xu, L.; Wu, F.; Zhang, H.; Zhang, B. Multitemporal Water Extraction of Dongting Lake and Poyang Lake Based on an Automatic Water Extraction and Dynamic Monitoring Framework. Remote Sens. 2021, 13, 865. [Google Scholar] [CrossRef]

- Ren, Y.; Li, X.; Yang, X.; Xu, H. Development of a Dual-Attention U-Net Model for Sea Ice and Open Water Classification on SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4010205. [Google Scholar] [CrossRef]

- Dang, B.; Li, Y. MSResNet: Multiscale Residual Network via Self-Supervised Learning for Water-Body Detection in Remote Sensing Imagery. Remote Sens. 2021, 13, 3122. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, P.; Xing, J.; Li, Z.; Xing, X.; Yuan, Z. A Multi-Scale Deep Neural Network for Water Detection from SAR Images in the Mountainous Areas. Remote Sens. 2020, 12, 3205. [Google Scholar] [CrossRef]

- Nemni, E.; Bullock, J.; Belabbes, S.; Bromley, L. Fully Convolutional Neural Network for Rapid Flood Segmentation in Synthetic Aperture Radar Imagery. Remote Sens. 2020, 12, 2532. [Google Scholar] [CrossRef]

- Kim, M.U.; Oh, H.; Lee, S.-J.; Choi, Y.; Han, S. A Large-Scale Dataset for Water Segmentation of SAR Satellite. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Bai, Y.; Wu, W.; Yang, Z.; Yu, J.; Zhao, B.; Liu, X.; Yang, H.; Mas, E.; Koshimura, S. Enhancement of Detecting Permanent Water and Temporary Water in Flood Disasters by Fusing Sentinel-1 and Sentinel-2 Imagery Using Deep Learning Algorithms: Demonstration of Sen1Floods11 Benchmark Datasets. Remote Sens. 2021, 13, 2220. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. Unet 3+: A Full-Scale Connected Unet for Medical Image Segmentation. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech, and Signal Processing, Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Deng, Y.J.; Hou, Y.L.; Yan, J.T.; Zeng, D.X. ELU-Net: An Efficient and Lightweight U-Net for Medical Image Segmentation. IEEE Access 2022, 10, 35932–35941. [Google Scholar] [CrossRef]

- Xia, L.; Zhang, J.; Zhang, X.; Yang, H.; Xu, M. Precise Extraction of Buildings from High-Resolution Remote-Sensing Images Based on Semantic Edges and Segmentation. Remote Sens. 2021, 13, 3083. [Google Scholar] [CrossRef]

- Shi, Y.X.; Xu, D.G. Robotic Grasping Based on Fully Convolutional Network Using Full-Scale Skip Connection. In Proceedings of the 2020 Chinese Automation Congress (CAC 2020) 2020, Shanghai, China, 6–8 November 2020; pp. 7343–7348. [Google Scholar] [CrossRef]

- Zhang, H.; Yuan, J.T.; Tian, X.; Ma, J.Y. GAN-FM: Infrared and Visible Image Fusion Using GAN With Full-Scale Skip Connection and Dual Markovian Discriminators. IEEE Trans. Comput. Imaging 2021, 7, 1134–1147. [Google Scholar] [CrossRef]

- Xiang, X.Z.; Tian, D.S.; Lv, N.; Yan, Q.N. FCDNet: A Change Detection Network Based on Full-Scale Skip Connections and Coordinate Attention. IEEE Geosci. Remote Sens. Lett. 2022, 19. [Google Scholar] [CrossRef]

- Li, R.; Duan, C.X.; Zheng, S.Y.; Zhang, C.; Atkinson, P.M. MACU-Net for Semantic Segmentation of Fine-Resolution Remotely Sensed Images. IEEE Geosci. Remote Sens. Lett. 2022, 19. [Google Scholar] [CrossRef]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef] [PubMed]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Clement, M.A.; Kilsby, C.G.; Moore, P. Multi-temporal synthetic aperture radar flood mapping using change detection. J. Flood Risk Manag. 2018, 11, 152–168. [Google Scholar] [CrossRef]

- Possa, E.M.; Maillard, P. Precise Delineation of Small Water Bodies from Sentinel-1 Data using Support Vector Machine Classification. Can. J. Remote Sens. 2018, 44, 179–190. [Google Scholar] [CrossRef]

- Manjusree, P.; Kumar, L.P.; Bhatt, C.M.; Rao, G.S.; Bhanumurthy, V. Optimization of Threshold Ranges for Rapid Flood Inundation Mapping by Evaluating Backscatter Profiles of High Incidence Angle SAR Images. Int. J. Disaster Risk Sci. 2012, 3, 113–122. [Google Scholar] [CrossRef]

- Xu, H.Q. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Souza, W.D.; Reis, L.G.D.; Ruiz-Armenteros, A.M.; Veleda, D.; Neto, A.R.; Fragoso, C.R.; Cabral, J.; Montenegro, S. Analysis of Environmental and Atmospheric Influences in the Use of SAR and Optical Imagery from Sentinel-1, Landsat-8, and Sentinel-2 in the Operational Monitoring of Reservoir Water Level. Remote Sens. 2022, 14, 2218. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

| No. | Time | Orbit | No. | Time | Orbit |

|---|---|---|---|---|---|

| 1 | 4 January 2021 | 35,987 | 13 | 3 July 2021 | 38,612 |

| 2 | 4 January 2021 | 35,987 | 14 | 3 July 2021 | 38,612 |

| 3 | 9 February 2021 | 36,512 | 15 | 8 August 2021 | 39,137 |

| 4 | 9 February 2021 | 36,512 | 16 | 8 August 2021 | 39,137 |

| 5 | 5 March 2021 | 36,862 | 17 | 1 September 2021 | 39,487 |

| 6 | 5 March 2021 | 36,862 | 18 | 1 September 2021 | 39,487 |

| 7 | 10 April 2021 | 37,387 | 19 | 7 October 2021 | 40,012 |

| 8 | 10 April 2021 | 37,387 | 20 | 7 October 2021 | 40,012 |

| 9 | 4 May 2021 | 37,737 | 21 | 12 November 2021 | 40,537 |

| 10 | 4 May 2021 | 37,737 | 22 | 12 November 2021 | 40,537 |

| 11 | 9 June 2021 | 38,262 | 23 | 30 December 2021 | 40,087 |

| 12 | 9 June 2021 | 38,262 | 24 | 30 December 2021 | 40,087 |

| IOU | Val. 1 | Val. 2 | Val. 3 | Val. 4 | AVG | STD |

|---|---|---|---|---|---|---|

| TS | 45.92 | 86.23 | 70.22 | 77.56 | 69.98 | 15 |

| SegNet | 64.16 | 94.4 | 81.21 | 93.92 | 83.42 | 12.32 |

| Deeplabv3+ | 73.71 | 95.17 | 88.55 | 93.44 | 87.72 | 8.44 |

| UNet | 84.19 | 94.64 | 81.09 | 89.99 | 87.48 | 5.22 |

| Att-UNet | 90.81 | 96.13 | 89.25 | 92.75 | 92.24 | 2.57 |

| Att-UNet3+ | 94.94 | 96.54 | 93.02 | 95.58 | 95.02 | 1.29 |

| Kappa | Val. 1 | Val. 2 | Val. 3 | Val. 4 | AVG | STD |

|---|---|---|---|---|---|---|

| TS | 61.82 | 90.04 | 78.17 | 84.86 | 78.72 | 10.63 |

| SegNet | 77.56 | 96.18 | 87.13 | 96.29 | 89.29 | 7.72 |

| Deeplabv3+ | 84.48 | 96.71 | 92.56 | 95.99 | 92.44 | 4.85 |

| UNet | 91.21 | 96.38 | 87.06 | 93.8 | 92.11 | 3.44 |

| Att-UNet | 95.06 | 97.4 | 93.1 | 95.57 | 95.28 | 1.53 |

| Att-UNet3+ | 97.34 | 97.67 | 95.57 | 97.32 | 96.98 | 0.82 |

| F1-Score | Val. 1 | Val. 2 | Val. 3 | Val. 4 | AVG | STD |

|---|---|---|---|---|---|---|

| TS | 62.93 | 92.61 | 82.51 | 87.36 | 81.35 | 11.22 |

| SegNet | 78.17 | 97.12 | 89.63 | 96.86 | 90.44 | 7.7 |

| Deeplabv3+ | 84.87 | 97.53 | 93.93 | 96.61 | 93.24 | 5.01 |

| UNet | 91.41 | 97.25 | 89.56 | 94.73 | 93.24 | 2.97 |

| Att-UNet | 95.18 | 98.03 | 94.32 | 96.24 | 95.94 | 1.38 |

| Att-UNet3+ | 97.4 | 98.24 | 96.38 | 97.74 | 97.44 | 0.68 |

| Out1 | Out2 | Out3 | Out4 | Out5 | SegNet | Deeplabv3+ | |

|---|---|---|---|---|---|---|---|

| Time (s) | 3.61 | 3.86 | 4.15 | 4.83 | 10.06 | 6.55 | 6.01 |

| Parameters | 18.87 M | 21.96 M | 24.94 M | 27.96 M | 31.09 M | 29.46 M | 41.28 M |

| IOU (%) | 73.25 | 79.99 | 86.64 | 91.54 | 95.02 | 83.42 | 87.72 |

| Kappa (%) | 81.84 | 87.1 | 91.74 | 94.51 | 96.98 | 89.29 | 92.44 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, C.; Zhang, H.; Wang, C.; Ge, J.; Wu, F. Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region. Remote Sens. 2022, 14, 4708. https://doi.org/10.3390/rs14194708

Jiang C, Zhang H, Wang C, Ge J, Wu F. Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region. Remote Sensing. 2022; 14(19):4708. https://doi.org/10.3390/rs14194708

Chicago/Turabian StyleJiang, Chaowei, Hong Zhang, Chao Wang, Ji Ge, and Fan Wu. 2022. "Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region" Remote Sensing 14, no. 19: 4708. https://doi.org/10.3390/rs14194708

APA StyleJiang, C., Zhang, H., Wang, C., Ge, J., & Wu, F. (2022). Water Surface Mapping from Sentinel-1 Imagery Based on Attention-UNet3+: A Case Study of Poyang Lake Region. Remote Sensing, 14(19), 4708. https://doi.org/10.3390/rs14194708