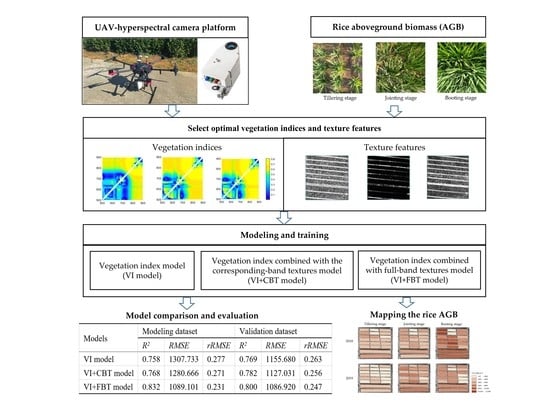

Integrating the Textural and Spectral Information of UAV Hyperspectral Images for the Improved Estimation of Rice Aboveground Biomass

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Field Experiments

2.3. UAV-Based Hyperspectral Image Acquisition and Preprocessing

2.4. AGB Measurement

2.5. Calculations of Vegetation Index and Texture Features

2.5.1. Calculations of Vegetation Index

2.5.2. Calculations of Spatial Features

2.6. Model Construction and Evaluation

2.6.1. Model Construction

2.6.2. Model Evaluation

3. Results and Analysis

3.1. AGB Data Statistical Analysis

3.2. Vegetation Index Selection and Vegetation-Index-Based AGB Model Construction

3.2.1. Vegetation Index Selection

3.2.2. Vegetation Index AGB Model Construction

3.3. Texture-Feature Selection and Coupled AGB Model Construction

3.3.1. Texture Features Selection

3.3.2. Construction of Coupled Model Integrating Vegetation Indices with Corresponding-Band Textures

3.4. Construction of Coupled Model Integrating Vegetation Indices with Full-Band Textures

3.5. Effects of Growth Stages on Model Performance

3.6. The Performance of Different Models under Various Nitrogen Gradients

4. Discussion

4.1. The Sensentive Bands of the Vegetation Indices and Texture Features for Rice AGB Estimation

4.2. Comparison of AGB Estimation Accuracy of Combined Vegetation Indices with Texture Features

4.3. Comparison of Different Growth Stages in Rice AGB Estimation

4.4. Potential Improvements on the Research

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Krishnan, R.; Ramakrishnan, B.; Reddy, K.R.; Reddy, V.R. High-Temperature Effects on Rice Growth, Yield, and grain qualITY. In Advances in Agronomy; Sparks, D.L., Ed.; Academic Press: Burlington, NJ, USA, 2011; Volume 111, pp. 87–206. [Google Scholar]

- Devia, C.A.; Rojas, J.P.; Petro, E.; Martinez, C.; Mondragon, I.F.; Patino, D.; Rebolledo, M.C.; Colorado, J. High-Throughput Biomass Estimation in Rice Crops Using UAV Multispectral Imagery. J. Intell. Robot. Syst. 2019, 96, 573–589. [Google Scholar] [CrossRef]

- Gnyp, M.L.; Miao, Y.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.; Huang, S.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crops Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Tao, H.L.; Feng, H.K.; Xu, L.J.; Miao, M.K.; Long, H.L.; Yue, J.B.; Li, Z.H.; Yang, G.J.; Yang, X.D.; Fan, L.L. Estimation of Crop Growth Parameters Using UAV-Based Hyperspectral Remote Sensing Data. Sensors 2020, 20, 1296. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Atzberger, C. Advances in Remote Sensing of Agriculture: Context Description, Existing Operational Monitoring Systems and Major Information Needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Zhang, G.; Wang, Z.; Liu, J.; Shang, J.; Liang, L. Bibliometric Analysis of Remote Sensing Research Trend in Crop Growth Monitoring: A Case Study in China. Remote Sens. 2019, 11, 809. [Google Scholar] [CrossRef] [Green Version]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Wan, L.; Li, Y.J.; Cen, H.Y.; Zhu, J.P.; Yin, W.X.; Wu, W.K.; Zhu, H.Y.; Sun, D.W.; Zhou, W.J.; He, Y. Combining UAV-Based Vegetation Indices and Image Classification to Estimate Flower Number in Oilseed Rape. Remote Sens. 2018, 10, 1484. [Google Scholar] [CrossRef] [Green Version]

- Hatfield, J.L.; Prueger, J.H.; Sauer, T.J.; Dold, C.; O’Brien, P.; Wacha, K. Applications of Vegetative Indices from Remote Sensing to Agriculture: Past and Future. Inventions 2019, 4, 71. [Google Scholar] [CrossRef] [Green Version]

- Corti, M.; Cavalli, D.; Cabassi, G.; Vigoni, A.; Degano, L.; Gallina, P.M. Application of a low-cost camera on a UAV to estimate maize nitrogen-related variables. Precis. Agric. 2019, 20, 675–696. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Wei, L.F.; Yu, M.; Liang, Y.J.; Yuan, Z.R.; Huang, C.; Li, R.; Yu, Y.W. Precise Crop Classification Using Spectral-Spatial-Location Fusion Based on Conditional Random Fields for UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sens. 2019, 11, 2011. [Google Scholar] [CrossRef] [Green Version]

- Pu, R.L.; Cheng, J. Mapping forest leaf area index using reflectance and textural information derived from WorldView-2 imagery in a mixed natural forest area in Florida, US. Int. J. Appl. Earth Obs. Geoinf. 2015, 42, 11–23. [Google Scholar] [CrossRef]

- Eckert, S. Improved Forest Biomass and Carbon Estimations Using Texture Measures from WorldView-2 Satellite Data. Remote Sens. 2012, 4, 810–829. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Su, Y.Z.; Yang, X. Spatial Distribution of Soil Organic Carbon and Its Influencing Factors in Desert Grasslands of the Hexi Corridor, Northwest China. PLoS ONE 2014, 9, e94652. [Google Scholar] [CrossRef] [PubMed]

- Yue, J.B.; Yang, G.J.; Li, C.C.; Li, Z.H.; Wang, Y.J.; Feng, H.K.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.D.; Wang, L.Q.; Tian, T.; Yin, J.H. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Liu, J.; Xiang, J.J.; Jin, Y.J.; Liu, R.H.; Yan, J.N.; Wang, L.Z. Boost Precision Agriculture with Unmanned Aerial Vehicle Remote Sensing and Edge Intelligence: A Survey. Remote Sens. 2021, 13, 4387. [Google Scholar] [CrossRef]

- Zheng, H.B.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.C.; Cao, W.X.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef] [Green Version]

- Deng, L.; Mao, Z.H.; Li, X.J.; Hu, Z.W.; Duan, F.Z.; Yan, Y.N. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. Isprs J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Van der Meer, F.D.; van der Werff, H.M.A.; van Ruitenbeek, F.J.A.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; de Smeth, J.B.; Woldai, T. Multi- and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Di Gennaro, S.F.; Toscano, P.; Gatti, M.; Poni, S.; Berton, A.; Matese, A. Spectral Comparison of UAV-Based Hyper and Multispectral Cameras for Precision Viticulture. Remote Sens. 2022, 14, 449. [Google Scholar] [CrossRef]

- Zhao, D.H.; Huang, L.M.; Li, J.L.; Qi, J.G. A comparative analysis of broadband and narrowband derived vegetation indices in predicting LAI and CCD of a cotton canopy. Isprs J. Photogramm. Remote Sens. 2007, 62, 25–33. [Google Scholar] [CrossRef]

- Baresel, J.P.; Rischbeck, P.; Hu, Y.C.; Kipp, S.; Hu, Y.C.; Barmeier, G.; Mistele, B.; Schmidhalter, U. Use of a digital camera as alternative method for non-destructive detection of the leaf chlorophyll content and the nitrogen nutrition status in wheat. Comput. Electron. Agric. 2017, 140, 25–33. [Google Scholar] [CrossRef]

- Choudhary, S.S.; Biswal, S.; Saha, R.; Chatterjee, C. A non-destructive approach for assessment of nitrogen status of wheat crop using unmanned aerial vehicle equipped with RGB camera. Arab. J. Geosci. 2021, 14, 1739. [Google Scholar] [CrossRef]

- Zheng, H.B.; Cheng, T.; Li, D.; Yao, X.; Tian, Y.C.; Cao, W.X.; Zhu, Y. Combining Unmanned Aerial Vehicle (UAV)-Based Multispectral Imagery and Ground-Based Hyperspectral Data for Plant Nitrogen Concentration Estimation in Rice. Front. Plant Sci. 2018, 9, 936. [Google Scholar] [CrossRef] [PubMed]

- Abdulridha, J.; Ampatzidis, Y.; Kakarla, S.C.; Roberts, P. Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precis. Agric. 2020, 21, 955–978. [Google Scholar] [CrossRef]

- Yang, W.; Nigon, T.; Hao, Z.Y.; Paiao, G.D.; Fernandez, F.G.; Mulla, D.; Yang, C. Estimation of corn yield based on hyperspectral imagery and convolutional neural network. Comput. Electron. Agric. 2021, 184, 92. [Google Scholar] [CrossRef]

- Liu, S.S.; Li, L.T.; Gao, W.H.; Zhang, Y.K.; Liu, Y.N.; Wang, S.Q.; Lu, J.W. Diagnosis of nitrogen status in winter oilseed rape (Brassica napus L.) using in-situ hyperspectral data and unmanned aerial vehicle (UAV) multispectral images. Comput. Electron. Agric. 2018, 151, 185–195. [Google Scholar] [CrossRef]

- Jiang, X.G.; Tang, L.L.; Wang, C.Y.; Wang, C. Spectral characteristics and feature selection of hyperspectral remote sensing data. Int. J. Remote Sens. 2004, 25, 51–59. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Evaluation of narrowband and broadband vegetation indices for determining optimal hyperspectral wavebands for agricultural crop characterization. Photogramm. Eng. Remote Sens. 2002, 68, 607–621. [Google Scholar]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. Isprs J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Li, S.; Yuan, F.; Ata-Ui-Karim, S.T.; Zheng, H.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Combining Color Indices and Textures of UAV-Based Digital Imagery for Rice LAI Estimation. Remote Sens. 2019, 11, 1763. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Yi, Q.; Hu, J.; Xie, L.; Yao, X.; Xu, T.; Zheng, J. Combining spectral and textural information in UAV hyperspectral images to estimate rice grain yield. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 2397. [Google Scholar] [CrossRef]

- Makelainen, A.; Saari, H.; Hippi, I.; Sarkeala, J.; Soukkamaki, J. 2D Hyperspectral Frame Imager Camera Data in Photogrammetric Mosaicking. In Proceedings of the Conference on Unmanned Aerial Vehicles in Geomatics (UAV-g), Rostock, Germany, 4–6 September 2013; pp. 263–267. [Google Scholar]

- Wu, C.Y.; Niu, Z.; Tang, Q.; Huang, W.J. Estimating chlorophyll content from hyperspectral vegetation indices: Modeling and validation. Agric. For. Meteorol. 2008, 148, 1230–1241. [Google Scholar] [CrossRef]

- He, J.Y.; Zhang, N.; Su, X.; Lu, J.S.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Estimating Leaf Area Index with a New Vegetation Index Considering the Influence of Rice Panicles. Remote Sens. 2019, 11, 1809. [Google Scholar] [CrossRef] [Green Version]

- Townshend, J.R.G.; Goff, T.E.; Tucker, C.J. Multitemporal Dimensionality of Images of Normalized Difference Vegetation Index at Continental Scales. Ieee Trans. Geosci. Remote Sens. 1985, 23, 888–895. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of Leaf-Area Index From Quality of Light on Forest Floor. Ecology 1969, 50, 663. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Bharati, M.H.; Liu, J.J.; MacGregor, J.F. Image texture analysis: Methods and comparisons. Chemom. Intell. Lab. Syst. 2004, 72, 57–71. [Google Scholar] [CrossRef]

- Haralick, R.M. Glossary and Index to Remotely Sensed Image Pattern-Recognition Concepts. Pattern Recognit. 1973, 5, 391–403. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. Ieee Trans. Syst. Man Cybern. 1973, SMC3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Bai, X.; Chen, Y.; Chen, J.; Cui, W.; Tai, X.; Zhang, Z.; Cui, J.; Ning, J. Optimal window size selection for spectral information extraction of sampling points from UAV multispectral images for soil moisture content inversion. Comput. Electron. Agric. 2021, 190, 106456. [Google Scholar] [CrossRef]

- Yue, J.B.; Feng, H.K.; Jin, X.L.; Yuan, H.H.; Li, Z.H.; Zhou, C.Q.; Yang, G.J.; Tian, Q.J. A Comparison of Crop Parameters Estimation Using Images from UAV-Mounted Snapshot Hyperspectral Sensor and High-Definition Digital Camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- Silhavy, P.; Silhavy, R.; Prokopova, Z. Evaluation of Data Clustering for Stepwise Linear Regression on Use Case Points Estimation. In Proceedings of the 6th Computer Science On-Line Conference (CSOC), Zlin, Czech Republic, 26–29 April 2017; pp. 491–496. [Google Scholar]

- Marill, K.A. Advanced statistics: Linear regression, Part II: Multiple linear regression. Acad. Emerg. Med. 2004, 11, 94–102. [Google Scholar] [CrossRef] [PubMed]

- Xie, Q.Y.; Huang, W.J.; Zhang, B.; Chen, P.F.; Song, X.Y.; Pascucci, S.; Pignatti, S.; Laneve, G.; Dong, Y.Y. Estimating Winter Wheat Leaf Area Index From Ground and Hyperspectral Observations Using Vegetation Indices. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 771–780. [Google Scholar] [CrossRef]

- Yoder, B.J.; Waring, R.H. The Normalized Difference Vegetation Index of Small Douglas-Fir Canopies with Varying Chlorophyll Concentrations. Remote Sens. Environ. 1994, 49, 81–91. [Google Scholar] [CrossRef]

- Wang, F.M.; Yao, X.P.; Xie, L.L.; Zheng, J.Y.; Xu, T.Y. Rice Yield Estimation Based on Vegetation Index and Florescence Spectral Information from UAV Hyperspectral Remote Sensing. Remote Sens. 2021, 13, 3390. [Google Scholar] [CrossRef]

- Yao, Y.; Miao, Y.; Cao, Q.; Wang, H.; Gnyp, M.L.; Bareth, G.; Khosla, R.; Yang, W.; Liu, F.; Liu, C. In-Season Estimation of Rice Nitrogen Status With an Active Crop Canopy Sensor. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4403–4413. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Li, Z.; Zhou, C.; Xu, K. Mapping winter-wheat biomass and grain yield based on a crop model and UAV remote sensing. Int. J. Remote Sens. 2021, 42, 1577–1601. [Google Scholar] [CrossRef]

- Zha, H.N.; Miao, Y.X.; Wang, T.T.; Li, Y.; Zhang, J.; Sun, W.C.; Feng, Z.Q.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef] [Green Version]

- Hussain, S.; Gao, K.X.; Din, M.; Gao, Y.K.; Shi, Z.H.; Wang, S.Q. Assessment of UAV-Onboard Multispectral Sensor for Non-Destructive Site-Specific Rapeseed Crop Phenotype Variable at Different Phenological Stages and Resolutions. Remote Sens. 2020, 12, 397. [Google Scholar] [CrossRef] [Green Version]

- Grava, J.; Raisanen, K.A. Growth and nutrient accumulation and distribution in wild rice. Agron. J. 1978, 70, 1077–1081. [Google Scholar] [CrossRef]

- Zhao, G.M.; Miao, Y.X.; Wang, H.Y.; Su, M.M.; Fan, M.S.; Zhang, F.S.; Jiang, R.F.; Zhang, Z.J.; Liu, C.; Liu, P.H.; et al. A preliminary precision rice management system for increasing both grain yield and nitrogen use efficiency. Field Crops Res. 2013, 154, 23–30. [Google Scholar] [CrossRef]

- Bacon, P.E.; Lewin, L.G. Rice growth under different stubble and nitrogen-fertilization management-techniques. Field Crops Res. 1990, 24, 51–65. [Google Scholar] [CrossRef]

- Cheng, T.; Song, R.; Li, D.; Zhou, K.; Zheng, H.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Spectroscopic Estimation of Biomass in Canopy Components of Paddy Rice Using Dry Matter and Chlorophyll Indices. Remote Sens. 2017, 9, 319. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.; Li, F.; Wang, W.; Yu, K. Estimating Above-Ground Biomass of Potato Using Random Forest and Optimized Hyperspectral Indices. Remote Sens. 2021, 13, 2339. [Google Scholar] [CrossRef]

- Liu, H.P.; An, H.J.; Zhang, Y.X. Analysis of WorldView-2 band importance in tree species classification based on recursive feature elimination. Curr. Sci. 2018, 115, 1366–1374. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. [Google Scholar] [CrossRef]

- Fu, Y.; Yang, G.; Song, X.; Li, Z.; Xu, X.; Feng, H.; Zhao, C. Improved Estimation of Winter Wheat Aboveground Biomass Using Multiscale Textures Extracted from UAV-Based Digital Images and Hyperspectral Feature Analysis. Remote Sens. 2021, 13, 581. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Li, J.; Guo, X.; Wang, S.; Lu, J. Estimating biomass of winter oilseed rape using vegetation indices and texture metrics derived from UAV multispectral images. Comput. Electron. Agric. 2019, 166, 105026. [Google Scholar] [CrossRef]

- Lu, J.; Eitel, J.U.H.; Engels, M.; Zhu, J.; Ma, Y.; Liao, F.; Zheng, H.; Wang, X.; Yao, X.; Cheng, T.; et al. Improving Unmanned Aerial Vehicle (UAV) remote sensing of rice plant potassium accumulation by fusing spectral and textural information. Int. J. Appl. Earth Obs. Geoinf. 2021, 104, 102592. [Google Scholar] [CrossRef]

- Zheng, H.; Ma, J.; Zhou, M.; Li, D.; Yao, X.; Cao, W.; Zhu, Y.; Cheng, T. Enhancing the Nitrogen Signals of Rice Canopies across Critical Growth Stages through the Integration of Textural and Spectral Information from Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2020, 12, 957. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Ding, F.; Chen, C.; Liu, T.; Sun, C.; Ding, D.; Huo, Z. Correlation of wheat biomass and yield with UAV image characteristic parameters. Trans. Chin. Soc. Agric. Eng. 2019, 35, 104–110. [Google Scholar]

- Liu, C.; Yang, G.; Li, Z.; Tang, F.; Feng, H.; Wang, J.; Zhang, C.; Zhang, L. Monitoring of Winter Wheat Biomass Using UAV Hyperspectral Texture Features. In Proceedings of the 11th IFIP WG 5.14 International Conference on Computer and Computing Technologies in Agriculture (CCTA), Jilin, China, 12–15 August 2019; pp. 241–250. [Google Scholar]

- Zheng, H.B.; Zhou, X.; He, J.Y.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Early season detection of rice plants using RGB, NIR-G-B and multispectral images from unmanned aerial vehicle (UAV). Comput. Electron. Agric. 2020, 169. [Google Scholar] [CrossRef]

- Wang, L.; Chen, S.S.; Peng, Z.P.; Huang, J.C.A.; Wang, C.Y.; Jiang, H.; Zheng, Q.; Li, D. Phenology Effects on Physically Based Estimation of Paddy Rice Canopy Traits from UAV Hyperspectral Imagery. Remote Sens. 2021, 13, 1792. [Google Scholar] [CrossRef]

- Ren, H.R.; Zhou, G.S.; Zhang, F. Using negative soil adjustment factor in soil-adjusted vegetation index (SAVI) for aboveground living biomass estimation in arid grasslands. Remote Sens. Environ. 2018, 209, 439–445. [Google Scholar] [CrossRef]

- Yang, K.; Gong, Y.; Fang, S.; Duan, B.; Yuan, N.; Peng, Y.; Wu, X.; Zhu, R. Combining Spectral and Texture Features of UAV Images for the Remote Estimation of Rice LAI throughout the Entire Growing Season. Remote Sens. 2021, 13, 3001. [Google Scholar] [CrossRef]

- Guo, Y.; Chen, S.; Wu, Z.; Wang, S.; Robin Bryant, C.; Senthilnath, J.; Cunha, M.; Fu, Y.H. Integrating Spectral and Textural Information for Monitoring the Growth of Pear Trees Using Optical Images from the UAV Platform. Remote Sens. 2021, 13, 1795. [Google Scholar] [CrossRef]

- Fu, Y.Y.; Yang, G.J.; Li, Z.H.; Song, X.Y.; Li, Z.H.; Xu, X.G.; Wang, P.; Zhao, C.J. Winter Wheat Nitrogen Status Estimation Using UAV-Based RGB Imagery and Gaussian Processes Regression. Remote Sens. 2020, 12, 3778. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castro, W.; Marcato Junior, J.; Polidoro, C.; Osco, L.P.; Goncalves, W.; Rodrigues, L.; Santos, M.; Jank, L.; Barrios, S.; Valle, C.; et al. Deep Learning Applied to Phenotyping of Biomass in Forages with UAV-Based RGB Imagery. Sensors 2020, 20, 4802. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.Q.; Hu, L.; Wu, H.B.; Ren, H.Z.; Qiao, H.B.; Li, P.J.; Fan, W.J. Integration of Crop Growth Model and Random Forest for Winter Wheat Yield Estimation From UAV Hyperspectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6253–6269. [Google Scholar] [CrossRef]

- Alvarez-Taboada, F.; Paredes, C.; Julian-Pelaz, J. Mapping of the Invasive Species Hakea sericea Using Unmanned Aerial Vehicle (UAV) and WorldView-2 Imagery and an Object-Oriented Approach. Remote Sens. 2017, 9, 913. [Google Scholar] [CrossRef] [Green Version]

- Cen, H.; Wan, L.; Zhu, J.; Li, Y.; Li, X.; Zhu, Y.; Weng, H.; Wu, W.; Yin, W.; Xu, C.; et al. Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image-frame snapshot cameras. Plant Methods 2019, 15, 32. [Google Scholar] [CrossRef]

- Yu, M.L.; Liu, S.J.; Song, L.; Huang, J.W.; Li, T.Z.; Wang, D. Spectral Characteristics and Remote Sensing Model of Tailings with Different Water Contents. Spectrosc. Spectr. Anal. 2019, 39, 3096–3101. [Google Scholar] [CrossRef]

- Liu, C.; Yang, G.; Li, Z.; Tang, F.; Wang, J.; Zhang, C.; Zhang, L. Biomass Estimation in Winter Wheat by UAV Spectral Information and Texture Information Fusion. Sci. Agric. Sin. 2018, 51, 3060–3073. [Google Scholar]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. Isprs J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

| Vegetation Indices | Formulas | Reference |

|---|---|---|

| Normalized difference vegetation index, NDVI | ) | [39] |

| Ratio vegetation index, RVI | [40] | |

| Difference vegetation index, DVI | [41] |

| Texture | Formula | Meaning |

|---|---|---|

| Mean (MEA) | The overall grey level in the GLCM window. | |

| Variance (VAR) | The change in grey level variance in the GLCM window. | |

| Homonity (HOM) | The homogeneity of grey level in the GLCM window. | |

| Contrast (CON) | The clarity of texture in the GLCM window, as opposed to HOM. | |

| Dissimilarity (DIS) | The similarity of the pixels in the GLCM window, similar to CON. | |

| Entropy (ENT) | The diversity of the pixels in the GLCM window, proportional to the complexity of the image texture. | |

| Secondary moment (SEM) | The uniformity of greyscale in the GLCM window. | |

| Correlation (COR) | The ductility of the grey value in the GLCM window. |

| Growth Stage | AVG * | MIN. | MAX. | SD. | VAR. | CV(%) |

|---|---|---|---|---|---|---|

| Tillering stage | 1869.305 | 498.667 | 6069.429 | 972.093 | 944,965.520 | 52.0 |

| Jointing stage | 5101.651 | 1900.444 | 9112.444 | 1884.780 | 3,552,394.162 | 36.9 |

| Booting stage | 7909.396 | 3424.667 | 12,707.840 | 2093.530 | 4,382,866.921 | 26.5 |

| Tillering–jointing–booting stages | 4960.117 | 498.667 | 12,707.840 | 3008.570 | 9,051,493.766 | 60.7 |

| Index | Selected Band Combination | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| DVI | (520,536) | (600,685) | (650,588) | (752,800) | (776,840) | (752,888) | (688,704) | (679,712) | (808,748) | (848,744) |

| NDVI | (528,685) | (568,760) | (696,768) | (691,879) | (760,699) | (768,664) | (784,700) | (800,504) | (800,685) | (888,504) |

| RVI | (504,568) | (551,671) | (584,536) | (685,576) | (744,800) | (776,720) | (792,848) | (800,724) | (824,728) | (840,744) |

| Bands | Filtered Textures | |||||

|---|---|---|---|---|---|---|

| Vegetation index corresponding bands | SEM748 | MEA800 | MEA808 | |||

| Full bands | COR504 | ENT536 | COR650 | COR632 | ENT584 | COR635 |

| Models | Calibration Dataset | Validation Dataset | ||||

|---|---|---|---|---|---|---|

| R2 | RMSE | rRMSE | R2 | RMSE | rRMSE | |

| Vegetation index model (VI model) | 0.758 | 1307.733 | 0.277 | 0.769 | 1155.680 | 0.263 |

| Vegetation index combined with corresponding-band texture model (VI+CBT model) | 0.768 | 1280.666 | 0.271 | 0.782 | 1127.031 | 0.256 |

| Vegetation index combined with full-band textures model (VI+FBT model) | 0.832 | 1089.101 | 0.231 | 0.800 | 1086.920 | 0.247 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, T.; Wang, F.; Xie, L.; Yao, X.; Zheng, J.; Li, J.; Chen, S. Integrating the Textural and Spectral Information of UAV Hyperspectral Images for the Improved Estimation of Rice Aboveground Biomass. Remote Sens. 2022, 14, 2534. https://doi.org/10.3390/rs14112534

Xu T, Wang F, Xie L, Yao X, Zheng J, Li J, Chen S. Integrating the Textural and Spectral Information of UAV Hyperspectral Images for the Improved Estimation of Rice Aboveground Biomass. Remote Sensing. 2022; 14(11):2534. https://doi.org/10.3390/rs14112534

Chicago/Turabian StyleXu, Tianyue, Fumin Wang, Lili Xie, Xiaoping Yao, Jueyi Zheng, Jiale Li, and Siting Chen. 2022. "Integrating the Textural and Spectral Information of UAV Hyperspectral Images for the Improved Estimation of Rice Aboveground Biomass" Remote Sensing 14, no. 11: 2534. https://doi.org/10.3390/rs14112534

APA StyleXu, T., Wang, F., Xie, L., Yao, X., Zheng, J., Li, J., & Chen, S. (2022). Integrating the Textural and Spectral Information of UAV Hyperspectral Images for the Improved Estimation of Rice Aboveground Biomass. Remote Sensing, 14(11), 2534. https://doi.org/10.3390/rs14112534