Day and Night Clouds Detection Using a Thermal-Infrared All-Sky-View Camera

Abstract

:1. Introduction

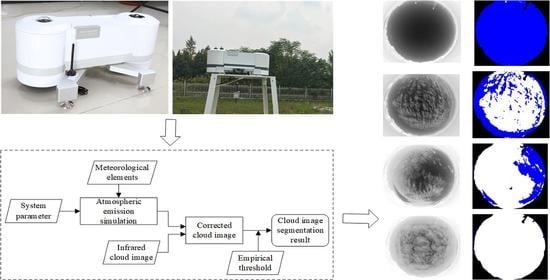

2. Description of the ASC-200 System

3. Retrieval of Clouds Information from TIR Image

3.1. Atmospheric Infrared Radiation Characteristics

3.2. TIR Clouds Imaging

3.3. Determination of the Clouds Region

4. Results and Discussion

4.1. Dataset Description

4.2. Consistency Analysis of the Observation Results

4.3. Recognition Accuracy of Different Types of Clouds by Infrared Observation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fu, C.L.; Cheng, H.Y. Predicting solar irradiance with all-sky image features via regression. Sol. Energy 2013, 97, 537–550. [Google Scholar] [CrossRef]

- Urquhart, B.; Kurtz, B.; Dahlin, E.; Ghonima, M.; Shields, J.E.; Kleissl, J. Development of a sky imaging system for short-term solar power forecasting. Atmos. Meas. Tech. 2015, 8, 875–890. [Google Scholar] [CrossRef] [Green Version]

- Mondragón, R.; Alonso-Montesinos, J.; Riveros-Rosas, D.; Valdés, M.; Estévez, H.; González-Cabrera, A.E.; Stremme, W. Attenuation Factor Estimation of Direct Normal Irradiance Combining Sky Camera Images and Mathematical Models in an Inter-Tropical Area. Remote Sens. 2020, 12, 1212. [Google Scholar] [CrossRef] [Green Version]

- Koyasu, T.; Yukita, K.; Ichiyanagi, K.; Minowa, M.; Yoda, M.; Hirose, K. Forecasting variation of solar radiation and movement of cloud by sky image data. In Proceedings of the IEEE International Conference on Renewable Energy Research and Applications (ICRERA), Birmingham, UK, 20–23 November 2016. [Google Scholar]

- Yin, B.; Min, Q. Climatology of aerosol and cloud optical properties at the Atmospheric Radiation Measurements Climate Research Facility Barrow and Atqasuk sites. J. Geophys. Res. Atmos. 2014, 119, 1820–1834. [Google Scholar] [CrossRef]

- Reinke, D.L.; Combs, C.L.; Kidder, S.Q.; Haar, T.H.V. Satellite Cloud Composite Climatologies: A New High Resolution Tool in Atmospheric Research and Forecasting. Bull. Am. Meteor. Soc. 2010, 73, 278–286. [Google Scholar] [CrossRef] [Green Version]

- Mace, G.G.; Benson, S.; Kato, S. Cloud radiative forcing at the Atmospheric Radiation Measurement Program Climate Research Facility: 2. Vertical redistribution of radiant energy by clouds. J. Geophys. Res. Atmos. 2006, 111. [Google Scholar] [CrossRef] [Green Version]

- Redman, B.J.; Shaw, J.A.; Nugent, P.W.; Clark, R.T.; Piazzolla, S. Reflective all-sky thermal infrared cloud imager. Opt. Express 2018, 26, 11276–11283. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ren, R.; Gu, L.; Wang, H. Clouds and Clouds Shadows Detection and Matching in MODIS Multispectral Satellite Images. In Proceedings of the 2012 International Conference on Industrial Control and Electronics Engineering, IEEE, Xi’an, China, 23–25 August 2012. [Google Scholar]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering Quantitative Remote Sensing Products Contaminated by Thick Clouds and Shadows Using Multitemporal Dictionary Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- Alonso-Montesinos, J. Real-Time Automatic Cloud Detection Using a Low-Cost Sky Camera. Remote Sens. 2020, 12, 1382. [Google Scholar] [CrossRef]

- Ghonima, M.S.; Urquhart, B.; Chow, C.W.; Shields, J.E.; Cazorla, A.; Kleissl, J. A method for cloud detection and opacity classification based on ground based sky imagery. Atmos. Meas. Tech. 2012, 5, 2881–2892. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Key, J.R.; Wang, X. The influence of changes in cloud cover on recent surface temperature trends in the Arctic. J. Clim. 2008, 21, 705–715. [Google Scholar] [CrossRef]

- Naud, C.M.; Booth, J.F.; Del Genio, A.D. The relationship between boundary layer stability and cloud cover in the post-cold-frontal region. J. Clim. 2016, 29, 8129–8849. [Google Scholar] [CrossRef] [PubMed]

- Kazantzidis, A.; Tzoumanikas, P.; Bais, A.F.; Fotopoulos, S.; Economou, G. Cloud detection and classification with the use of whole-sky ground-based images. Atmos. Res. 2012, 113, 80–88. [Google Scholar] [CrossRef]

- Long, C.; Slater, D.; Tooman, T.P. Total Sky Imager Model 880 Status and Testing Results; Pacific Northwest National Laboratory: Richland, WA, USA, 2001. [Google Scholar]

- Kim, B.Y.; Cha, J.W. Cloud Observation and Cloud Cover Calculation at Nighttime Using the Automatic Cloud Observation System (ACOS) Package. Remote Sens. 2020, 12, 2314. [Google Scholar] [CrossRef]

- Krauz, L.; Janout, P.; Blažek, M.; Páta, P. Assessing Cloud Segmentation in the Chromacity Diagram of All-Sky Images. Remote Sens. 2020, 12, 1902. [Google Scholar] [CrossRef]

- Janout, P.; Blažek, M.; Páta, P. New generation of meteorology cameras. In Photonics, Devices, and Systems VII; International Society for Optics and Photonics: Bellingham, WA, USA, 2017. [Google Scholar]

- Román, R.; Antón, M.; Cazorla, A.; de Miguel, A.; Olmo, F.J.; Bilbao, J.; Alados-Arboledas, L. Calibration of an all-sky camera for obtaining sky radiance at three wavelengths. Atmos. Meas. Tech. 2012, 5, 2013–2024. [Google Scholar] [CrossRef] [Green Version]

- Cazorla, A. Development of a Sky Imager for Cloud Classification and Aerosol Characterization. Ph.D. Thesis, University of Granada, Granada, Spain, 2010. [Google Scholar]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. Design of low-cost, compact and weather-proof whole sky imagers for High-Dynamic-Range captures. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 5359–5362. [Google Scholar]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. WAHRSIS: A low-cost high-resolution whole sky imager with near-infrared capabilities. In Infrared Imaging Systems: Design, Analysis, Modeling, and Testing; International Society for Optics and Photonics: Bellingham, WA, USA, 2014. [Google Scholar]

- Tao, F.; Xie, W.; Wang, Y.; Xia, Y. Development of an all-sky imaging system for cloud cover assessment. Appl. Opt. 2019, 58, 5516–5524. [Google Scholar]

- Thurairajah, B.; Shaw, J.A. Cloud statistics measured with the infrared cloud imager (ICI). IEEE Trans. Geosci. Remote Sens. 2005, 43, 2000–2007. [Google Scholar] [CrossRef]

- Sun, X.; Gao, T.; Zhai, D.; Zhao, S.; Lian, J.G. Whole sky infrared cloud measuring system based on the uncooled infrared focal plane array. Infrared Laser Eng. 2008, 37, 761–764. [Google Scholar]

- Sun, X.; Liu, L.; Zhao, S. Whole Sky Infrared Remote Sensing of Clouds. Procedia Earth Planet. Sci. 2011, 2, 278–283. [Google Scholar] [CrossRef] [Green Version]

- Klebe, D.I.; Blatherwick, R.D.; Morris, V.R. Ground-based all-sky mid-infrared and visible imagery for purposes of characterizing cloud properties. Atmos. Meas. Tech. 2014, 7, 637–645. [Google Scholar] [CrossRef] [Green Version]

- Klebe, D.; Sebag, J.; Blatherwick, R.D. All-Sky Mid-Infrared Imagery to Characterize Sky Conditions and Improve Astronomical Observational Performance. Publ. Astron. Soc. Pac. 2012, 124, 1309–1317. [Google Scholar] [CrossRef]

- Aebi, C.; Gröbner, J.; Kämpfer, N. Cloud fraction determined by thermal infrared and visible all-sky cameras. Atmos. Meas. Tech. 2018, 11, 5549–5563. [Google Scholar] [CrossRef] [Green Version]

- Debevec, P.E.; Malik, J. Recovering high dynamic range radiance maps from photographs. In ACM SIGGRAPH 2008 Classes; Association for Computing Machinery: New York, NY, USA, 2008; pp. 1–10. [Google Scholar]

- Shaw, J.A.; Nugent, P.W.; Pust, N.J.; Thurairajah, B.; Mizutani, K. Radiometric cloud imaging with an uncooled microbolometer thermal infrared camera. Opt. Express 2005, 13, 5807–5817. [Google Scholar] [CrossRef] [PubMed]

- Nugent, P.W.; Shaw, J.A.; Piazzolla, S. Infrared cloud imaging in support of Earth-space optical communication. Opt. Express 2009, 17, 7862–7872. [Google Scholar] [CrossRef]

- Anderson, G.P.; Clough, S.A.; Kneizys, F.X.; Chetwynd, J.H.; Shettle, E.P. AFGL Atmospheric Constituent Profiles (0.120 km); Air Force Geophysics Lab Hanscom AFB MA; No. AFGL-TR-86-0110; Air Force Geophysics Lab: Bedford, MA, USA, 1986. [Google Scholar]

- Anderson, G.P.; Berk, A.; Acharya, P.K.; Matthew, M.W.; Bernstein, L.S.; Chetwynd, J.H., Jr.; Jeong, L.S. MODTRAN4: Radiative transfer modeling for remote sensing. In Algorithms for Multispectral, Hyperspectral, and Ultraspectral Imagery VI; International Society for Optics and Photonics: Bellingham, WA, USA, 2000; Volume 4049, pp. 176–183. [Google Scholar]

- Shaw, J.A.; Nugent, P.W. Physics principles in radiometric infrared imaging of clouds in the atmosphere. Eur. J. Phys. 2013, 34, S111–S121. [Google Scholar] [CrossRef]

- Sun, X.; Qin, C.; Qin, J.; Liu, L.; Hu, Y. Ground-based infrared remote sensing based on the height of middle and low cloud. J. Remote Sens. 2012, 16, 166–173. [Google Scholar]

- Smith, S.; Toumi, R. Measuring Cloud Cover and Brightness Temperature with a Ground-Based Thermal Infrared Camera. J. Appl. Meteorol. Climatol. 2008, 47, 683–693. [Google Scholar] [CrossRef]

- Nugent, P.W. Wide-Angle Infrared Cloud Imaging for Clouds Cover Statistics. Ph.D. Thesis, Montana State University, Bozeman, MT, USA, 2008. [Google Scholar]

- Xie, W.; Liu, D.; Yang, M.; Chen, S.; Wang, B.; Wang, Z.; Xia, Y.; Liu, Y.; Wang, Y.; Zhang, C. SegCloud: A novel clouds image segmentation model using deep Convolutional Neural Network for ground-based all-sky-view camera observation. Atmos. Meas. Tech. 2020, 13, 1953–1954. [Google Scholar] [CrossRef] [Green Version]

| Clouds Types | Description | Clouds-Based Height (m) |

|---|---|---|

| AC | High patched clouds with small cloudlets, mosaic-like, white | 2700–5500 |

| CI | High, thin clouds, wisplike or sky covering, whitish | 6000–7500 |

| CU | Low, puff clouds with clearly defined edges | 600–1600 |

| SC | Low or mid-level, lumpy layer of clouds, broken to almost overcast | 700–2400 |

| ST | Low or mid-level layer of clouds, uniform, generally overcast, gray | 200–500 |

| Clouds Types | AC | CI | CU | SC | ST | CF |

|---|---|---|---|---|---|---|

| Samples | 58 | 57 | 49 | 57 | 52 | 60 |

| Diff > 2 | 5 | 15 | 6 | 0 | 2 | 0 |

| Ratio | 8.6% | 26% | 12.2% | 0% | 3.8% | 0% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Liu, D.; Xie, W.; Yang, M.; Gao, Z.; Ling, X.; Huang, Y.; Li, C.; Liu, Y.; Xia, Y. Day and Night Clouds Detection Using a Thermal-Infrared All-Sky-View Camera. Remote Sens. 2021, 13, 1852. https://doi.org/10.3390/rs13091852

Wang Y, Liu D, Xie W, Yang M, Gao Z, Ling X, Huang Y, Li C, Liu Y, Xia Y. Day and Night Clouds Detection Using a Thermal-Infrared All-Sky-View Camera. Remote Sensing. 2021; 13(9):1852. https://doi.org/10.3390/rs13091852

Chicago/Turabian StyleWang, Yiren, Dong Liu, Wanyi Xie, Ming Yang, Zhenyu Gao, Xinfeng Ling, Yong Huang, Congcong Li, Yong Liu, and Yingwei Xia. 2021. "Day and Night Clouds Detection Using a Thermal-Infrared All-Sky-View Camera" Remote Sensing 13, no. 9: 1852. https://doi.org/10.3390/rs13091852