Registration for Optical Multimodal Remote Sensing Images Based on FAST Detection, Window Selection, and Histogram Specification

Abstract

:1. Introduction

2. Materials and Methods

2.1. Imaging Systems and Test Images

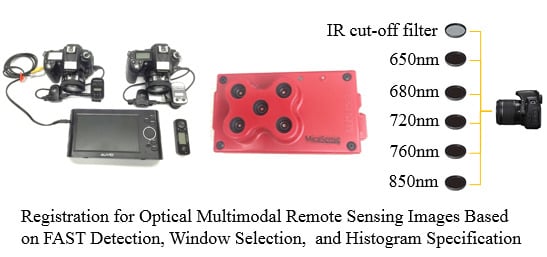

2.1.1. Multispectral Imaging Camera Based on Changeable Filters

2.1.2. Dual-Camera Imaging System

2.1.3. Five-Band Multispectral Imaging System

2.1.4. Test Images

2.2. Computer Platform and Software

2.3. Registration Method

2.3.1. Selection of Feature Detectors

2.3.2. Histogram Specification

2.3.3. Window Selection and Local Matching

2.3.4. Elimination of Mismatches and Global Transformation

3. Results

3.1. Comparison of Feature Detectors

3.2. Registration Result

3.3. Accuracy Assessment

4. Discussion

4.1. Search for the Appropriate Window Radius Size

4.2. Importance of Histogram Specification within Windows

4.3. Comparison of State-Of-The-Art Methods and the Proposed Method

4.4. Comparison of Software Embedded Methods and the Proposed Method

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Aicardi, I.; Nex, F.; Gerke, M.; Lingua, A. An image-based approach for the co-registration of multi-temporal uav image datasets. Remote Sens. 2016, 8, 779. [Google Scholar] [CrossRef]

- Pritt, M.; Gribbons, M.A. Automated Registration of Synthetic Aperture Radar Imagery with High Resolution Digital Elevation Models. U.S. Patent No. 8,842,036, 23 September 2014. [Google Scholar]

- Tommaselli, A.M.; Galo, M.; De Moraes, M.V.; Marcato, J.; Caldeira, C.R.; Lopes, R.F. Generating virtual images from oblique frames. Remote Sens. 2013, 5, 1875–1893. [Google Scholar] [CrossRef]

- Chen, J.; Luo, L.; Liu, C.; Yu, J.-G.; Ma, J. Nonrigid registration of remote sensing images via sparse and dense feature matching. J. Opt. Soc. Am. A 2016, 33, 1313–1322. [Google Scholar] [CrossRef] [PubMed]

- Turner, D.; Lucieer, A.; de Jong, S. Time series analysis of landslide dynamics using an unmanned aerial vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef]

- Sedaghat, A.; Ebadi, H. Remote sensing image matching based on adaptive binning sift descriptor. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5283–5293. [Google Scholar] [CrossRef]

- Grant, B.G. UAV imagery analysis: Challenges and opportunities. In Proceedings of the Long-Range Imaging II, Anaheim, CA, USA, 1 May 2017; Volume 10204, p. 1020406. [Google Scholar]

- Zhang, J.; Yang, C.; Song, H.; Hoffmann, W.; Zhang, D.; Zhang, G. Evaluation of an airborne remote sensing platform consisting of two consumer-grade cameras for crop identification. Remote Sens. 2016, 8, 257. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor correction and radiometric calibration of a 6-band multispectral imaging sensor for uav remote sensing. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2012, 39-B1, 393–398. [Google Scholar] [CrossRef]

- Dehaan, R. Evaluation of Unmanned Aerial Vehicle (UAV)-Derived Imagery for the Detection of Wild Radish in Wheat; Charles Sturt University: Albury-Wodonga, Australia, 2015. [Google Scholar]

- Bongiorno, D.L.; Bryson, M.; Dansereau, D.G.; Williams, S.B. Spectral characterization of COTS RGB cameras using a linear variable edge filter. Korean J. Chem. Eng. 2013, 8660, 618–623. [Google Scholar]

- McKee, M. The remote sensing data from your UAV probably isn’t scientific, but it should be! In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping II, Anaheim, CA, USA, 8 May 2017; Volume 10218, p. 102180M. [Google Scholar]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Joglekar, J.; Gedam, S.S. Area based image matching methods—A survey. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 130–136. [Google Scholar]

- Moigne, J.L.; Netanyahu, N.S.; Eastman, R.D. Image Registration for Remote Sensing; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Hong, G.; Zhang, Y. Combination of feature-based and area-based image registration technique for high resolution remote sensing image. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 377–380. [Google Scholar]

- Behling, R.; Roessner, S.; Segl, K.; Kleinschmit, B.; Kaufmann, H. Robust automated image co-registration of optical multi-sensor time series data: Database generation for multi-temporal landslide detection. Remote Sens. 2014, 6, 2572–2600. [Google Scholar] [CrossRef]

- Habib, A.F.; Alruzouq, R.I. Line-based modified iterated Hough transform for automatic registration of multi-source imagery. Photogramm. Rec. 2004, 19, 5–21. [Google Scholar] [CrossRef]

- Sheng, Y.; Shah, C.A.; Smith, L.C. Automated image registration for hydrologic change detection in the lake-rich Arctic. IEEE Geosci. Remote Sens. Lett. 2008, 5, 414–418. [Google Scholar] [CrossRef]

- Shah, C.A.; Sheng, Y.; Smith, L.C. Automated image registration based on pseudoinvariant metrics of dynamic land-surface features. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3908–3916. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Harris, C.G.; Pike, J.M. 3D positional integration from image sequences. Image Vis. Comput. 1988, 6, 87–90. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the European Conference on Computer Vision, Berlin/Heidelberg, Germany, May 2006; pp. 430–443. [Google Scholar]

- Fonseca, L.M.G.; Manjunath, B.S. Registration techniques for multisensor remotely sensed images. Photogramm. Eng. Remote Sens. 1996, 62, 1049–1056. [Google Scholar]

- Brown, L.G. A survey of image registration techniques. Acm Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Lindeberg, T. Feature detection with automatic scale selection. Int. J. Comput. Vis. 1998, 30, 79–116. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Mokhtarian, F.; Suomela, R. Robust image corner detection through curvature scale space. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1376–1381. [Google Scholar] [CrossRef]

- Harris, C. A combined corner and edge detector. In Proceedings of the Fourth Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 3, pp. 147–151. [Google Scholar]

- Rosten, E.; Drummond, T. Fusing points and lines for high performance tracking. In Proceedings of the Tenth IEEE International Conference on Computer Vision, Beijing, China, 17–21 October 2005. [Google Scholar]

- Nikolova, M. A fast algorithm for exact histogram specification. Simple extension to colour images. Lect. Notes Comput. Sci. 2013, 7893, 174–185. [Google Scholar]

- Torr, P.H.S.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Ma, J.; Chan, J.C.W.; Canters, F. Fully automatic subpixel image registration of multiangle chris/proba data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2829–2839. [Google Scholar] [CrossRef]

- Yang, K.; Tang, L.; Liu, X.; Dingxiang, W.U.; Bian, Y.; Zhenglong, L.I. Different source image registration method based on texture common factor. Comput. Eng. 2016, 42, 233–237. [Google Scholar]

- Hexagon Geospatial. ERDAS IMAGINE Help. AutoSync Theory. Available online: https://hexagongeospatial.fluidtopics.net/reader/P7L4c0T_d3papuwS98oGQ/A6cPYHL_ydRnsJNL9JttFA (accessed on 9 April 2018).

- Harris Geospatial Solutions. Docs Center. Using ENVI. Automatic Image to Image Registration. Available online: http://www.harrisgeospatial.com/docs/RegistrationImageToImage.html (accessed on 9 April 2018).

| Algorithm | Detection Time (s) | Count of Points | Detection Speed (μs/point) | Correct Matching Rate (%) |

|---|---|---|---|---|

| SIFT | 2.83 & 2.04 a | 724 & 355 | 3908.8 & 5746.5 | 95.5 (21/22) b |

| CSS | 1.07 & 0.65 | 750 & 347 | 1426.7 & 1873.2 | 69.2 (9/13) |

| Harris | 0.69 & 0.56 | 744 & 346 | 927.4 & 1618.5 | 78.3 (18/23) |

| SURF | 0.20 & 0.17 | 723 & 345 | 276.6 & 492.8 | 56.7 (38/67) |

| FAST | 0.10 & 0.09 | 741 & 341 | 135.0 & 263.9 | 95.0 (19/20) |

| ID | Sensor | Width (Pixel) | Image Set | Sensed | Reference | Optimal Radius (Pixel) | Ratio b | Appropriate Radius (Pixel) c |

|---|---|---|---|---|---|---|---|---|

| 1 | Multispectral camera based on changeable filters | 3264 | Set I-a | RGB | a LP650nm | 330 | 9.89 | 326 |

| 2 | RGB | LP680nm | 310 | 10.53 | ||||

| 3 | RGB | LP720nm | 300 | 10.88 | ||||

| 4 | RGB | LP760nm | 250 | 13.06 | ||||

| 5 | RGB | LP850nm | 470 | 6.94 | ||||

| 6 | Set I-b | RGB | LP650nm | 340 | 9.6 | |||

| 7 | RGB | LP680nm | 350 | 9.33 | ||||

| 8 | RGB | LP720nm | 350 | 9.33 | ||||

| 9 | RGB | LP760nm | 300 | 10.88 | ||||

| 10 | RGB | LP850nm | 350 | 9.33 | ||||

| 11 | Set I-c | RGB | LP680nm | 350 | 9.33 | |||

| 12 | RGB | LP720nm | 290 | 11.26 | ||||

| 13 | RGB | LP850nm | 320 | 10.2 | ||||

| 14 | RGB | a NP670nm | 390 | 8.37 | ||||

| 15 | RGB | NP720nm | 370 | 8.82 | ||||

| 16 | RGB | NP850nm | 330 | 9.89 | ||||

| 17 | Set I-d | RGB | LP680nm | 290 | 11.26 | |||

| 18 | RGB | LP720nm | 390 | 8.37 | ||||

| 19 | RGB | LP850nm | 370 | 8.82 | ||||

| 20 | RGB | NP670nm | 370 | 8.82 | ||||

| 21 | RGB | NP720nm | 360 | 9.07 | ||||

| 22 | RGB | NP850nm | 350 | 9.33 | ||||

| 23 | Dual-camera imaging system | 2848 | Set II-a | RGB | NIR | 350 | 8.14 | 285 |

| 24 | Set II-b | 230 | 12.38 | |||||

| 25 | Set II-c | 160 | 17.8 | |||||

| 26 | Set II-d | 200 | 14.24 | |||||

| 27 | Five-band multispectral imaging system | 960 | Set III-a | B | G | 120 | 8 | 96 |

| 28 | B | R | 80 | 12 | ||||

| 29 | B | RDG | 60 | 16 | ||||

| 30 | B | NIR | 60 | 16 | ||||

| 31 | Set III-b | B | G | 110 | 8.73 | |||

| 32 | B | R | 70 | 13.71 | ||||

| 33 | B | RDG | 90 | 10.67 | ||||

| 34 | B | NIR | 60 | 16 | ||||

| 35 | Set III-c | B | G | 170 | 5.65 | |||

| 36 | B | R | 150 | 6.4 | ||||

| 37 | B | RDG | 120 | 8 | ||||

| 38 | B | NIR | 130 | 7.38 | ||||

| 39 | Set III-d | B | G | 150 | 6.4 | |||

| 40 | B | R | 140 | 6.86 | ||||

| 41 | B | RDG | 150 | 6.4 | ||||

| 42 | B | NIR | 160 | 6 | ||||

| Mean ratio ≈ 10. | ||||||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Zhang, J.; Yang, C.; Song, H.; Shi, Y.; Zhou, X.; Zhang, D.; Zhang, G. Registration for Optical Multimodal Remote Sensing Images Based on FAST Detection, Window Selection, and Histogram Specification. Remote Sens. 2018, 10, 663. https://doi.org/10.3390/rs10050663

Zhao X, Zhang J, Yang C, Song H, Shi Y, Zhou X, Zhang D, Zhang G. Registration for Optical Multimodal Remote Sensing Images Based on FAST Detection, Window Selection, and Histogram Specification. Remote Sensing. 2018; 10(5):663. https://doi.org/10.3390/rs10050663

Chicago/Turabian StyleZhao, Xiaoyang, Jian Zhang, Chenghai Yang, Huaibo Song, Yeyin Shi, Xingen Zhou, Dongyan Zhang, and Guozhong Zhang. 2018. "Registration for Optical Multimodal Remote Sensing Images Based on FAST Detection, Window Selection, and Histogram Specification" Remote Sensing 10, no. 5: 663. https://doi.org/10.3390/rs10050663

APA StyleZhao, X., Zhang, J., Yang, C., Song, H., Shi, Y., Zhou, X., Zhang, D., & Zhang, G. (2018). Registration for Optical Multimodal Remote Sensing Images Based on FAST Detection, Window Selection, and Histogram Specification. Remote Sensing, 10(5), 663. https://doi.org/10.3390/rs10050663