1. Introduction

Fingerprint biometrics is increasingly being used in the commercial, civilian, physiological, and financial domains based on two important characteristics of fingerprints: (1) fingerprints do not change with time and (2) every individual’s fingerprints are unique [

1,

2,

3,

4,

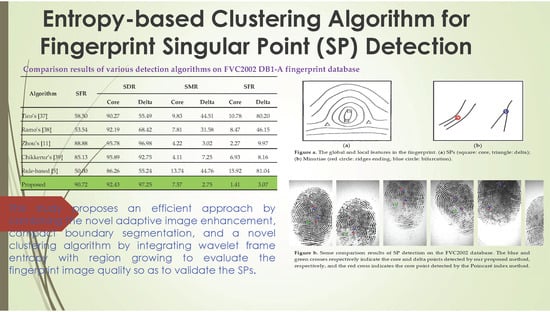

5]. Owing to these characteristics, fingerprints have long been used in automated fingerprint identification or verification systems. These systems rely on accurate recognition of fingerprint features. At the global level, fingerprints have ridge flows assembled in a specific formation, resulting in different ridge topology patterns such as core and delta (singular points (SPs)), as shown in

Figure 1a. These SPs are the basic features required for fingerprint classification and indexing. Local fingerprint features are carried by local ridge details such as ridge endings and bifurcations (minutiae), as shown in

Figure 1b. Fingerprint minutiae are often used to conduct matching tasks because they are generally stable and highly distinctive [

6].

Most previous SP extraction algorithms were performed directly over fingerprint orientation images. The most popular method is based on the Poincaré index [

7], which typically computes the accumulated rotation of the vector field along a closed curve surrounding a local point. Wang et al. [

8] proposed a fingerprint orientation model based on 2D Fourier expansions to extract SPs independently. Nilsson and Bigun [

9] as well as Liu [

10] used the symmetry properties of SPs to extract them by first applying a complex filter to the orientation field in multiple resolution scales by detecting the parabolic and triangular symmetry associated with core and delta points. Zhou et al. [

11] proposed a feature of differences of the orientation values along a circle (DORIC) in addition to the Poincaré index to effectively remove spurious detections, take the topological relations of SPs as a global constraint for fingerprints, and use the global orientation field for SP detection. Chen et al. [

12] obtained candidate SPs by the multiscale analysis of orientation entropy and then applied some post-processing steps to filter the spurious core and delta points.

However, SP detection is sensitive to noise, and extracting SPs reliably is a very challenging problem. When input fingerprint images have poor quality, the performance of these methods degrades rapidly. Noise in fingerprint images makes SP extraction unreliable and may result in a missed or wrong detection. Therefore, fingerprint image enhancement is a key step before extracting SPs.

Fingerprint image enhancement remains an active area of research. Researchers have attempted to reduce noise and improve the contrast between ridges and valleys in fingerprint images. Most fingerprint image enhancement algorithms are based on the estimation of an orientation field [

13,

14,

15]. Some methods use variations of Gabor filters to enhance fingerprint images [

16,

17]. These methods are based on the estimation of a single orientation and a single frequency; they can remove undesired noise and preserve and improve the clarity of ridge and valley structures in images. However, they are not suitable for enhancing ridges in regions with high curvature. Wang and Wang [

18] first detected the SP area and then improved it by applying a bandpass filter in the Fourier domain. However, detecting the SP region when the fingerprint image has extremely poor quality is highly difficult. Yang et al. [

19] first enhanced fingerprint images in the spatial domain with a spatial ridge-compensation filter by learning from the images and then used a frequency bandpass filter that is separable in the radial- and angular-frequency domains. Yun and Cho [

20] analyzed fingerprint images, divided them into oily, neutral, and dry according to their properties, and then applied a specific enhancement strategy for each type. To enhance fingerprint images, Fronthaler et al. [

21] used a Laplacian-like image pyramid to decompose the original fingerprint into subbands corresponding to different spatial scales and then performed contextual smoothing on these pyramid levels, where the corresponding filtering directions stem from the frequency-adapted structure tensor. Bennet and Perumal [

22] transformed fingerprint images into the wavelet domain and then used singular value decomposition (SVD) to decompose the low subband coefficient matrix. Fingerprint images were enhanced by multiplying the singular value matrix of the low-low(LL) subband with the ratio of the largest singular value of the generated normalized matrix with mean of 0 and variance of 1 and the largest singular value of the LL subband. However, the resulting images were sometimes uneven. This is because SVD was applied only to the low subband and a generated normalized matrix was used. To overcome this problem, Wang et al. [

23] introduced a novel lighting compensation scheme involving the use of adaptive SVD on wavelet coefficients. First, they decomposed the input fingerprint image into four subbands by 2D discrete wavelet transform (DWT). Subsequently, they compensated fingerprint images by adaptively obtaining the compensation coefficients for each subband based on the referred Gaussian template.

The aforementioned methods for enhancing fingerprint images can reduce noise and improve the contrast between ridges and valleys in the images. However, they are not really effective with fingerprint images having very poor quality, particularly blurring. To overcome this problem, we need to segment the fingerprint foreground with the interleaved ridge and valley structure from the complex background with non-fingerprint patterns for more accurate and efficient feature extraction and identification. Many studies have investigated segmentation on rolled and plain fingerprint images. Mehtreet al. [

24] partitioned a fingerprint image into blocks and then performed block classification based on gradient and variance information to segment fingerprint images into blocks. This method was further extended to a composite method [

25] that takes advantage of both the directional and the variance approaches. Zhang et al. [

26] proposed an adaptive total variation decomposition model by incorporating the orientation field and local orientation coherence for latent fingerprint segmentation. Based on a ridge quality measure that was defined as the structural similarity between the fingerprint patch and its dictionary-based reconstruction, Cao et al. [

27] proposed a learning-based method for latent fingerprint image segmentation.

This study proposes an efficient approach by combining the novel adaptive image enhancement, compact boundary segmentation, and a novel clustering algorithm by integrating wavelet frame entropy with region growing to evaluate the fingerprint image quality so as to validate the SPs. Experiments were conducted on FVC2002 DB1 and FVC2002 DB2 databases [

28]. The experimental results indicate the excellent performance of the proposed method.

The rest of this paper is organized as follows.

Section 2 introduces the proposed image enhancement, precise boundary segmentation, and blurring detection based on wavelet entropy clustering algorithm.

Section 3 describes the proposed algorithm for SP detection.

Section 4 presents experimental results to verify the proposed approach. Finally,

Section 5 presents the conclusions of this study.

3. SP Detection

In general, SPs of a fingerprint are detected by a Poincaré index-based algorithm. However, the Poincaré index method usually results in considerable spurious detection, particularly for low-quality fingerprint images. This is because the conventional Poincaré index along the boundary of a given region equals the sum of the Poincaré indices of the core points within this region, and it contains no information about the characteristics and cannot describe the core point completely. To overcome the shortcoming of the Poincaré index method, we propose an adaptive method based on wavelet extrema for core point detection. Wavelet extrema contain information on both the transform modulus maxima and minima in the image, considered to be among the most meaningful features for signal characterization.

First, we align the ROI based on the Poincaré’s core points and the local orientation field. The Poincaré index at pixel (

x,

y), which is enclosed by 12 direction fields taken in a counterclockwise direction, is calculated as follows:

where

and

where

and

are the paired neighboring coordinates of the direction fields. A core point has a Poincaré index of +1/2. By contrast, a delta point has a Poincaré index of -1/2. The core pointsdetected in this step are called rough core points.

Next, we align the fingerprint image under the right-angle coordinate system based on the number and location of preliminary core points. Because fingerprints may have different numbers of cores, the first step in alignment is to adopt the preliminary Poincaré indexed positions as a reference. If the number of preliminary cores is 2, the image is rotated along the orientation calculated from the midpoint between the two cores. If the number of cores is equal to 1, the image is rotated along the direction calculated from the neighboring orientation of the core. If the number of cores is zero, the fingerprint is kept intact. The rotation angle is calculated as follows:

where

is the local orientation around a pixel and

is the core subregion of interest (COI) centered at the Poincaré index core point (

xc,

yc) with size of 60 × 60 pixels, which was determined to avoid possible variability near the boundary while one is fingerprinted by the reader. Fingerprint alignment is performed to make the pattern rotation-invariant and to reduce the false rejection rate. The rotations are given by the following Equation:

and point (

x,

y) with orientation angle

is mapped to point

Figure 5 shows some fingerprint alignment by our method with different numbers of cores.

After alignment, the COI subregion with size of 60 × 60 pixels centered at the Poincaré’s detected point is further segmented from the aligned image. The COI then goes through a skeletonization process to peel off as many ridge pixels as possible without affecting the general shape of the ridge, as shown in

Figure 6a, and is then transformed to a skeletonized ridge image, as shown in

Figure 6b. The skeletonized ridge image is used to compute the wavelet extrema, as shown in

Figure 6c.

Wavelet modulus maxima representations for two-dimensional signals were proposed by Mallat [

33] as a tool for extracting information on singularities, which were considered to be among the most meaningful features for signal characterization. Most wavelet transform local extrema are actually modulus maxima (there are examples of signals for which the wavelet extrema and modulus representations are the same). The set of indices and the local maximum, denoted as

, and local minimum, denoted as

, of skeletonized ridge image

f are defined as follows:

Where

z∈

Z. Similarly, the indices and values of wavelet transform extrema for an image

f is defined as follows:

where

is the 2D non-separable wavelet transform of image

f at scale

j,

j = 1, 2,…,

J. The SP of a fingerprint image can be found by extracting curvature primitives and discovering the location of these primitives in the subregion, as shown in

Figure 6c.

We find the exact location of the core point defined by the Henry system and trace the skeletonized ridge curves with 8-adjacency to explore wavelet extrema in 1-pixel increments by starting at 10 pixels apart from two sides. The highest extrema in the ridge curve correspond to core point candidates. We devise two 8-adjacency grids to locate the wavelet extrema (

Figure 7a,b). Beginning from two opposite ends and moving toward the center of the subregion, the black-colored pixel of each grid is designated as the central point to trace. Based on this central point, the moving guideline is as follows: if the gray-level of the adjacent pixel is 0, then move toward that pixel, where the number shown in the grid indicates the moving sequence. This method enables one to follow the real track of the ridge curve. Whenever a singularity is detected, its location is noted.

Figure 7c shows that it is common to find multiple core point candidates with small vertical displacements, and the area below the lowest ridge curve is circumscribed for locating the core point. In the Henry system, exact core point location can be performed as follows: (a) locate the topmost extrema in the innermost ridge curve if there is no rod; (b) otherwise, locate the top of the rods. The following equation summarizes this process:

Where

s is the determined core point,

i is the number of rods below the innermost ridge curve,

is the topmost extrema in the innermost ridge curve, and

is the located rod extrema below the innermost ridge curve.

Figure 7d presents an example marked with the blue cross.

4. Experimental Results and Discussion

In this section, to illustrate the effectiveness of our proposed method, we present some of the performed experiments using both FVC2002 DB1 and DB2 fingerprint databases. FVC2002 includes four databases, namely, DB1, DB2, DB3, and DB4, collected using different sensors or technologies that are widely used in practice. Each database is 110 fingers wide (w) and 8 impressions per finger deep (d) (880 fingerprints in all). Fingerprints from 101 to 110 (set B) have been made available to the participants to allow for parameter tuning before the submission of the algorithms. The benchmark is then constituted by fingers numbered from 1 to 100 (set A). Volunteers were randomly partitioned into three groups (30 persons each); each group was associated with a database and therefore to a different fingerprint scanner. Each volunteer was invited to present themselves at the collection place in three distinct sessions, with at least two weeks between each session. The forefinger and middle finger of both hands (in total, four fingers) of each volunteer were acquired by interleaving the acquisition of the different fingers to maximize differences in finger placement. No efforts were made to control image quality and the sensor platens were not systematically cleaned. In each session, four impressions were acquired of each of the four fingers of each volunteer. During the second session, individuals were requested to exaggerate the displacement (impressions 1 and 2) and rotation (impressions 3 and 4) of the finger without exceeding 35°. During the third session, fingers were alternatively dried (impressions 1 and 2) and moistened (impressions 3 and 4). The SPs of all fingerprints in the testing database were manually labeled beforehand to obtain the ground truth. For a ground-truth , if a detected satisfies , it is said to be truly detected; otherwise, it is called a miss.

The singular point detection rate (SDR) is defined as the ratio of truly detected SPs to all ground-truth SPs:

The singular point miss rate (SMR) is defined as the ratio of the number of missed SPs to the number of all ground-truth SPs. The sum of the detection rate and miss rate is 100%:

The singular point false alarm rate (SFR) is defined as the ratio of the number of falsely detected SPs to the total number of ground-truth

SPs:

The singular point correctly detected rate (SCR) is defined as the ratio of all truly detected SPs to all detected SPs in a fingerprint of all fingerprint images:

First, the compensation weight coefficients are calculated by using Equation (4) and the equalized image,

, having the same size as the original fingerprint image can be generated by Equation (5).

Figure 8 and

Figure 9 show example image results of the proposed method for FVC2002 DB1 and DB2, respectively. As shown in

Figure 8c and

Figure 9b, the background of the fingerprint image has been removed, thereby providing an image with nearly normal distribution. It also improves the clarity and continuity of ridge structures in the fingerprint image.

Then, we show the effectiveness by comparing the amount of information in our method and in the original fingerprint images by using the entropy of an image. The entropy of information

H was introduced by Shannon [

34] in 1948, and it can be calculated by the following equation:

where

denotes the probability mass function of gray level

i, and it is calculated as follows:

In digital image processing, entropy is a measure of an image’s information content, which is interpreted as the average uncertainty of the information source. The entropy of an image can be used for measuring image visual aspects [

35] or for gathering information to be used as parameters in some systems [

36]. Entropy is widely used for measuring the amount of information within an image. Higher entropy implies that an image contains more information.

Entropy is measured to quantify the information produced from the enhanced image. For good enhancement, the entropy of the enhanced image should be close to that of the original image. This small difference between entropies of the original and the enhanced images indicates that the image details are preserved. It also shows that the histogram shape is maintained; thus, the saturation case can be avoided.

Table 2 shows the entropy of equalized images compared with original images for each image shown in

Figure 8 and

Figure 9. The result shows that the equalized fingerprint images have smaller entropy while they are still close to the entropy of the original image. It means that our method can remove noise from the original image while retaining the structure of the fingerprint image.

Next, the equalized fingerprint image was used to determine the contour and detected the blur region of the fingerprint, as discussed in

Section 2.2 and

Section 2.3.

Figure 10 shows the binarized image obtained by applying Equation (8) to the equalized image. Based on the binary images, as shown in

Figure 10b, we can detect the region of impression (ROI), and the contour of the fingerprint is acquired in a polygon, as shown in

Figure 10c.

Figure 11b presents the blur detection result obtained by 2D non-separable wavelet entropy filtering for low-quality images, as discussed in

Section 2.3. In what follows, an ROI with a 30% blur region is considered to have bad quality, and its SP detection is not good enough.

Our experiments were tested on the FVC2002 DB1_A and FVC2002 DB2_A databases. We compared the results of our proposed SP detection with results obtained using other methods, including a rule-based algorithm [

5], Zhou’s algorithm [

11], Tico’s algorithm [

37], Ramo’s algorithm [

38], and Chikkerur and Ratha’s algorithm [

39]. In these methods, the singular points were measured on Euclidean distance. While no standard terms exist to define a correct detection, we devoted our attention in this research to a method for detecting a singular point precisely and followed the convention for adopting the 10-pixel deviation on the distance between the expected and the detected singular points to validate the performance of the proposed method. In addition, the singular point detection based on the Poincaré index method is sensitive for low-quality fingerprints. In this paper, we show that by combining a novel adaptive image enhancement, compact boundary segmentation, and NSDWT for localization, the detection of singular points is more robust. Moreover, a novel clustering algorithm by integrating wavelet frame entropy with region growing is introduced to evaluate the fingerprint image quality to validate the detected singular points.

Table 3 and

Table 4 show the correctly detected rate, detection rate, miss rate, and false alarm rate. The results in the tables indicate that our method not only has a higher correctly detected rate than other methods but also has a low false alarm rate.

Figure 12 presents the results of truly detected SPs on the FVC2002 database; the core points and the delta points are closer as ground truth SPs.

Figure 13 presents some comparison results of SP detection for the FVC2002 database using our proposed method and the Poincaré index method. In this figure, blue and green crosses indicate the core and delta points, respectively, detected by our proposed method, and the red cross indicates the core point detected by the Poincaré index method. The results show that the location of the SPs detected using our method is more accurate than those of the SPs detected using the Poincaré index method.