Key Points

-

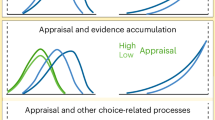

Most behavioural and computational models of decision making assume that the following five processes are carried out at the time the decision is made: representation, action valuation, action selection, outcome valuation, and learning.

-

On the basis of a sizeable body of animal and human behavioural evidence, several groups have proposed the existence of three different types of valuation systems: Pavlovian, habitual and goal-directed systems.

-

Pavlovian systems assign value to only a small set of 'prepared' behaviours and thus have a limited behavioural repertoire. Nevertheless, they might be the driving force behind behaviours with important economic consequences (for example, overeating). Examples include preparatory behaviours, such as approaching a cue that predicts food, and consummatory behaviours, such as ingesting available food.

-

Habit valuation systems learn to assign values to stimulus–response associations on the basis of previous experience through a process of trial-and-error. Examples of habits include a smoker's desire to have a cigarette at particular times of day (for example, after a meal) and a rat's tendency to forage in a cue-dependent location after sufficient training.

-

Goal-directed systems assign values to actions by computing action–outcome associations and then evaluating the rewards that are associated with the different outcomes. An example of a goal-directed behaviour is the decision what to eat at a new restaurant.

-

An important difference between habitual and goal-directed systems has to do with how they respond to changes in the environment. The goal-directed system updates the value of an action as soon as the value of its outcome changes, whereas the habit system only learns with repeated experience.

-

The values computed by the three systems can be modulated by factors such as the risk that is associated with the decision, the time delay to the outcomes, and social considerations.

-

The quality of the decisions made by an animal depend on how its brain assigns control to the different valuation systems in situations in which it has to make a choice between several potential actions that are assigned conflicting values.

-

The learning properties of the habit system seem to be well-described by simple reinforcement algorithms, such as Q-learning. Some of the key computations that are predicted by these models are instantiated in the dopamine system.

Abstract

Neuroeconomics is the study of the neurobiological and computational basis of value-based decision making. Its goal is to provide a biologically based account of human behaviour that can be applied in both the natural and the social sciences. This Review proposes a framework to investigate different aspects of the neurobiology of decision making. The framework allows us to bring together recent findings in the field, highlight some of the most important outstanding problems, define a common lexicon that bridges the different disciplines that inform neuroeconomics, and point the way to future applications.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

$189.00 per year

only $15.75 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Busemeyer, J. R. & Johnson, J. G. in Handbook of Judgment and Decision Making (eds Koehler, D. & Narvey, N.) 133–154 (Blackwell Publishing Co., New York, 2004).

Mas-Colell, A., Whinston, M. & Green, J. Microeconomic Theory (Cambridge Univ. Press, Cambridge, 1995).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction (MIT Press, Cambridge, Massachusetts, 1998).

Dickison, A. & Balleine, B. W. in Steven's Handbook of Experimental Psychology Vol. 3 Learning, Motivation & Emotion (ed. Gallistel, C.) 497–533 (Wiley & Sons, New York, 2002).

Dayan, P. in Better Than Conscious? Implications for Performance and Institutional Analysis (eds Engel, C. & Singer, W.) 51–70 (MIT Press, Cambridge, Massachusetts, 2008).

Balleine, B. W., Daw, N. & O'Doherty, J. in Neuroeconomics: Decision-Making and the Brain (eds Glimcher, P. W., Fehr, E., Camerer, C. & Poldrack, R. A.) 365–385 (Elsevier, New York, 2008).

Bouton, M. E. Learning and Behavior: A Contemporary Synthesis (Sinauer Associates, Inc., Sunderland, Massachusetts, 2007). This book reviews a large amount of evidence pointing to multiple valuation systems being active in value-based decision making.

Dayan, P. & Seymour, B. in Neuroeconomics: Decision Making and the Brain (eds Glimcher, P. W., Camerer, C. F., Fehr, E. & Poldrack, R. A.) 175–191 (Elsevier, New York, 2008).

Dayan, P., Niv, Y., Seymour, B. & Daw, N. D. The misbehavior of value and the discipline of the will. Neural Netw. 19, 1153–1160 (2006). This paper provided several models of how “pathological behaviours” can arise from the competition process between Pavlovian, habitual and goal-directed valuation systems.

Keay, K. A. & Bandler, R. Parallel circuits mediating distinct emotional coping reactions to different types of stress. Neurosci. Biobehav. Rev. 25, 669–678 (2001).

Cardinal, R. N., Parkinson, J. A., Hall, J. & Everitt, B. J. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci. Biobehav. Rev. 26, 321–352 (2002).

Holland, P. C. & Gallagher, M. Amygdala-frontal interactions and reward expectancy. Curr. Opin. Neurobiol. 14, 148–155 (2004).

Fendt, M. & Fanselow, M. S. The neuroanatomical and neurochemical basis of conditioned fear. Neurosci. Biobehav. Rev. 23, 743–760 (1999).

Adams, D. B. Brain mechanisms of aggressive behavior: an updated review. Neurosci. Biobehav. Rev. 30, 304–318 (2006).

Niv, Y. in Neuroscience (Hebrew University, Jerusalem, 2007).

Dayan, P. & Abbott, L. R. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems (MIT Press, Cambridge, Massachusetts, 1999).

Balleine, B. W. Neural bases of food-seeking: affect, arousal and reward in corticostriatolimbic circuits. Physiol. Behav. 86, 717–730 (2005). This important paper reviews a large amount of evidence pointing to multiple valuation systems being active in value-based decision making.

Yin, H. H. & Knowlton, B. J. The role of the basal ganglia in habit formation. Nature Rev. Neurosci. 7, 464–476 (2006).

Killcross, S. & Coutureau, E. Coordination of actions and habits in the medial prefrontal cortex of rats. Cereb. Cortex 13, 400–408 (2003).

Coutureau, E. & Killcross, S. Inactivation of the infralimbic prefrontal cortex reinstates goal-directed responding in overtrained rats. Behav. Brain Res. 146, 167–174 (2003).

Yin, H. H., Knowlton, B. J. & Balleine, B. W. Blockade of NMDA receptors in the dorsomedial striatum prevents action-outcome learning in instrumental conditioning. Eur. J. Neurosci. 22, 505–512 (2005).

Wallis, J. D. & Miller, E. K. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur. J. Neurosci. 18, 2069–2081 (2003).

Padoa-Schioppa, C. & Assad, J. A. Neurons in the orbitofrontal cortex encode economic value. Nature 441, 223–226 (2006). This paper showed that neurons in the monkey OFC encode the goal value of individual rewarding objects (for example, different liquids) irrespective of the action that needs to be taken to obtain them.

Wallis, J. D. Orbitofrontal cortex and its contribution to decision-making. Annu. Rev. Neurosci. 30, 31–56 (2007).

Barraclough, D. J., Conroy, M. L. & Lee, D. Prefrontal cortex and decision making in a mixed-strategy game. Nature Neurosci. 7, 404–410 (2004).

Schoenbaum, G. & Roesch, M. Orbitofrontal cortex, associative learning, and expectancies. Neuron 47, 633–636 (2005).

Tom, S. M., Fox, C. R., Trepel, C. & Poldrack, R. A. The neural basis of loss aversion in decision-making under risk. Science 315, 515–518 (2007). This fMRI study showed that the striatal-OFC network encodes a value signal at the time of the goal-directed choice that is consistent with the properties of PT. Furthermore, the study presented evidence that suggests that both the appetitive and the aversive aspects of goal-directed decisions might be encoded in a common valuation network.

Plassmann, H., O'Doherty, J. & Rangel, A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J. Neurosci. 27, 9984–9988 (2007).

Hare, T., O'Doherty, J., Camerer, C. F., Schultz, W. & Rangel, A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J. Neurosci. (in the press).

Paulus, M. P. & Frank, L. R. Ventromedial prefrontal cortex activation is critical for preference judgments. Neuroreport 14, 1311–1315 (2003).

Erk, S., Spitzer, M., Wunderlich, A. P., Galley, L. & Walter, H. Cultural objects modulate reward circuitry. Neuroreport 13, 2499–2503 (2002).

Fellows, L. K. & Farah, M. J. The role of ventromedial prefrontal cortex in decision making: judgment under uncertainty or judgment per se? Cereb. Cortex 17, 2669–2674 (2007).

Lengyel, M. & Dayan, P. Hippocampal contributions to control: the third way. NIPS [online] (2007).

Montague, P. R. Why Choose This Book? (Dutton, 2006).

Fehr, E. & Camerer, C. F. Social neuroeconomics: the neural circuitry of social preferences. Trends Cogn. Sci. 11, 419–427 (2007).

Lee, D. Game theory and neural basis of social decision making. Nature Neurosci. 11, 404–409 (2008).

Platt, M. L. & Huettel, S. A. Risky business: the neuroeconomics of decision making under uncertainty. Nature Neurosci. 11, 398–403 (2008).

Paulus, M. P., Rogalsky, C., Simmons, A., Feinstein, J. S. & Stein, M. B. Increased activation in the right insula during risk-taking decision making is related to harm avoidance and neuroticism. Neuroimage 19, 1439–1448 (2003).

Leland, D. S. & Paulus, M. P. Increased risk-taking decision-making but not altered response to punishment in stimulant-using young adults. Drug Alcohol Depend. 78, 83–90 (2005).

Paulus, M. P. et al. Prefrontal, parietal, and temporal cortex networks underlie decision-making in the presence of uncertainty. Neuroimage 13, 91–100 (2001).

Huettel, S. A., Song, A. W. & McCarthy, G. Decisions under uncertainty: probabilistic context influences activation of prefrontal and parietal cortices. J. Neurosci. 25, 3304–3311 (2005).

Bossaerts, P. & Hsu, M. in Neuroeconomics: Decision Making and the Brain (eds Glimcher, P. W., Camerer, C. F., Fehr, E. & Poldrack, R. A.) 351–364 (Elsevier, New York, 2008).

Preuschoff, K. & Bossaerts, P. Adding prediction risk to the theory of reward learning. Ann. NY Acad. Sci. 1104, 135–146 (2007).

Preuschoff, K., Bossaerts, P. & Quartz, S. R. Neural differentiation of expected reward and risk in human subcortical structures. Neuron 51, 381–390 (2006).

Tobler, P. N., O'Doherty, J. P., Dolan, R. J. & Schultz, W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J. Neurophysiol. 97, 1621–1632 (2007).

Rolls, E. T., McCabe, C. & Redoute, J. Expected value, reward outcome, and temporal difference error representations in a probabilistic decision task. Cereb. Cortex 18, 652–663 (2007).

Dreher, J. C., Kohn, P. & Berman, K. F. Neural coding of distinct statistical properties of reward information in humans. Cereb. Cortex 16, 561–573 (2006).

Preuschoff, K., Quartz, S. R. & Bossaerts, P. Human insula activation reflects prediction errors as well as risk. J. Neurosci. 28, 2745–2752 (2008). This fMRI study shows that the human insula encodes risk-prediction errors that could be used to learn the riskiness of different options and that are complementary to reward-prediction errors.

Tobler, P. N., Fiorillo, C. D. & Schultz, W. Adaptive coding of reward value by dopamine neurons. Science 307, 1642–1645 (2005).

Platt, M. L. & Glimcher, P. W. Neural correlates of decision variables in parietal cortex. Nature 400, 233–238 (1999).

Camerer, C. F. & Weber, M. Recent developments in modelling preferences: uncertainty and ambiguity. J. Risk Uncertain. 5, 325–370 (1992).

Hsu, M., Bhatt, M., Adolphs, R., Tranel, D. & Camerer, C. F. Neural systems responding to degrees of uncertainty in human decision-making. Science 310, 1680–1683 (2005).

Huettel, S. A., Stowe, C. J., Gordon, E. M., Warner, B. T. & Platt, M. L. Neural signatures of economic preferences for risk and ambiguity. Neuron 49, 765–775 (2006).

Hertwig, R., Barron, G., Weber, E. U. & Erev, I. Decisions from experience and the effect of rare events in risky choice. Psychol. Sci. 15, 534–539 (2004).

Weller, J. A., Levin, I. P., Shiv, B. & Bechara, A. Neural correlates of adaptive decision making for risky gains and losses. Psychol. Sci. 18, 958–964 (2007).

De Martino, B., Kumaran, D., Seymour, B. & Dolan, R. J. Frames, biases, and rational decision-making in the human brain. Science 313, 684–687 (2006).

Frederick, S., Loewenstein, G. & O'Donoghue, T. Time discounting and time preference: a critical review. J. Econ. Lit. 40, 351–401 (2002).

McClure, S. M., Laibson, D. I., Loewenstein, G. & Cohen, J. D. Separate neural systems value immediate and delayed monetary rewards. Science 306, 503–507 (2004). This fMRI study argued that competing goal-directed valuation systems play a part in decisions that involve choosing between immediate small monetary payoffs and larger but delayed payoffs.

McClure, S. M., Ericson, K. M., Laibson, D. I., Loewenstein, G. & Cohen, J. D. Time discounting for primary rewards. J. Neurosci. 27, 5796–5804 (2007).

Berns, G. S., Laibson, D. & Loewenstein, G. Intertemporal choice - toward an integrative framework. Trends Cogn. Sci. 11, 482–488 (2007).

Kable, J. W. & Glimcher, P. W. The neural correlates of subjective value during intertemporal choice. Nature Neurosci. 10, 1625–1633 (2007). This fMRI study argued that a single goal-directed valuation system plays a part in decisions that involve choosing between immediate small monetary payoffs and larger but delayed payoffs.

Read, D., Frederick, S., Orsel, B. & Rahman, J. Four score and seven years ago from now: the “date/delay” effect in temporal discounting. Manage. Sci. 51, 1326–1335 (1997).

Mischel, W. & Underwood, B. Instrumental ideation in delay of gratification. Child Dev. 45, 1083–1088 (1974).

Wilson, M. & Daly, M. Do pretty women inspire men to discount the future? Proc. Biol. Sci. 271 (Suppl 4), S177–S179 (2004).

Berns, G. S. et al. Neurobiological substrates of dread. Science 312, 754–758 (2006).

Loewenstein, G. Anticipation and the valuation of delayed consumption. Econ. J. 97, 666–684 (1987).

Stevens, J. R., Hallinan, E. V. & Hauser, M. D. The ecology and evolution of patience in two New World monkeys. Biol. Lett. 1, 223–226 (2005).

Herrnstein, R. J. Relative and absolute strength of response as a function of frequency of reinforcement. J. Exp. Anal. Behav. 4, 267–272 (1961).

Mazur, J. E. Estimation of indifference points with an adjusting-delay procedure. J. Exp. Anal. Behav. 49, 37–47 (1988).

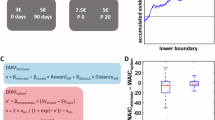

Corrado, G. S., Sugrue, L. P., Seung, H. S. & Newsome, W. T. Linear-nonlinear-poisson models of primate choice dynamics. J. Exp. Anal. Behav. 84, 581–617 (2005).

Newsome, W. T., Britten, K. H. & Movshon, J. A. Neuronal correlates of a perceptual decision. Nature 341, 52–54 (1989).

Kim, J. N. & Shadlen, M. N. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nature Neurosci. 2, 176–185 (1999).

Gold, J. I. & Shadlen, M. N. The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574 (2007).

Gold, J. I. & Shadlen, M. N. Banburisms and the brain: decoding the relationship between sensory stimuli, decisions, and reward. Neuron 36, 299–308 (2002).

Gold, J. I. & Shadlen, M. N. Neural computations that underlie decisions about sensory stimuli. Trends Cogn. Sci. 5, 10–16 (2001).

Heekeren, H. R., Marrett, S. & Ungerleider, L. G. The neural systems that mediate human perceptual decision making. Nature Rev. Neurosci. 9, 467–479 (2008).

Daw, N. D., Niv, Y. & Dayan, P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nature Neurosci. 8, 1704–1711 (2005). This paper proposed a theoretical model of how the brain might assign control to the different goal and habitual systems.

Frank, M. J., Seeberger, L. C. & O'Reilly, R. C. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science 306, 1940–1943 (2004).

Frank, M. J. Hold your horses: a dynamic computational role for the subthalamic nucleus in decision making. Neural Netw. 19, 1120–1136 (2006).

de Araujo, I. E., Rolls, E. T., Kringelbach, M. L., McGlone, F. & Phillips, N. Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur. J. Neurosci. 18, 2059–2068 (2003).

de Araujo, I. E., Kringelbach, M. L., Rolls, E. T. & McGlone, F. Human cortical responses to water in the mouth, and the effects of thirst. J. Neurophysiol. 90, 1865–1876 (2003).

Anderson, A. K. et al. Dissociated neural representations of intensity and valence in human olfaction. Nature Neurosci. 6, 196–202 (2003).

de Araujo, I. E., Rolls, E. T., Velazco, M. I., Margot, C. & Cayeux, I. Cognitive modulation of olfactory processing. Neuron 46, 671–679 (2005).

McClure, S. M. et al. Neural correlates of behavioral preference for culturally familiar drinks. Neuron 44, 379–387 (2004).

Kringelbach, M. L., O'Doherty, J., Rolls, E. T. & Andrews, C. Activation of the human orbitofrontal cortex to a liquid food stimulus is correlated with its subjective pleasantness. Cereb. Cortex 13, 1064–1071 (2003).

Small, D. M. et al. Dissociation of neural representation of intensity and affective valuation in human gustation. Neuron 39, 701–711 (2003).

Blood, A. J. & Zatorre, R. J. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc. Natl Acad. Sci. USA 98, 11818–11823 (2001).

O'Doherty, J. et al. Sensory-specific satiety-related olfactory activation of the human orbitofrontal cortex. Neuroreport 11, 399–403 (2000).

Small, D. M., Zatorre, R. J., Dagher, A., Evans, A. C. & Jones-Gotman, M. Changes in brain activity related to eating chocolate: from pleasure to aversion. Brain 124, 1720–1733 (2001).

Breiter, H. C., Aharon, I., Kahneman, D., Dale, A. & Shizgal, P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron 30, 619–639 (2001).

Knutson, B., Fong, G. W., Adams, C. M., Varner, J. L. & Hommer, D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport 12, 3683–3687 (2001).

Zink, C. F., Pagnoni, G., Martin-Skurski, M. E., Chappelow, J. C. & Berns, G. S. Human striatal responses to monetary reward depend on saliency. Neuron 42, 509–517 (2004).

Peyron, R. et al. Haemodynamic brain responses to acute pain in humans: sensory and attentional networks. Brain 122, 1765–1780 (1999).

Davis, K. D., Taylor, S. J., Crawley, A. P., Wood, M. L. & Mikulis, D. J. Functional MRI of pain- and attention-related activations in the human cingulate cortex. J. Neurophysiol. 77, 3370–3380 (1997).

Seo, H. & Lee, D. Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. J. Neurosci. 27, 8366–8377 (2007).

Pecina, S., Smith, K. S. & Berridge, K. C. Hedonic hot spots in the brain. Neuroscientist 12, 500–511 (2006).

Berridge, K. C. & Robinson, T. E. Parsing reward. Trends Neurosci. 26, 507–513 (2003).

Berridge, K. C. Pleasures of the brain. Brain Cogn. 52, 106–128 (2003).

Plassmann, H., O'Doherty, J., Shiv, B. & Rangel, A. Marketing actions can modulate neural representations of experienced pleasantness. Proc. Natl Acad. Sci. USA 105, 1050–1054 (2008). This paper showed that the level of “experienced pleasantness” encoded in the medial OFC at the time of consuming a wine is modulated by subjects' beliefs about the price of the wine that they are drinking.

Montague, P. R., King-Casas, B. & Cohen, J. D. Imaging valuation models in human choice. Annu. Rev. Neurosci. 29, 417–448 (2006).

Tremblay, L., Hollerman, J. R. & Schultz, W. Modifications of reward expectation-related neuronal activity during learning in primate striatum. J. Neurophysiol. 80, 964–977 (1998).

Hollerman, J. R. & Schultz, W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nature Neurosci. 1, 304–309 (1998).

Schultz, W., Dayan, P. & Montague, P. R. A neural substrate of prediction and reward. Science 275, 1593–1599 (1997). This seminal paper proposed the connection between the prediction-error component of reinforcement-learning models and the behaviour of dopamine cells.

Mirenowicz, J. & Schultz, W. Importance of unpredictability for reward responses in primate dopamine neurons. J. Neurophysiol. 72, 1024–1027 (1994).

Schultz, W. Multiple dopamine functions at different time courses. Annu. Rev. Neurosci. 30, 259–288 (2007).

Schultz, W. Neural coding of basic reward terms of animal learning theory, game theory, microeconomics and behavioural ecology. Curr. Opin. Neurobiol. 14, 139–147 (2004).

Montague, P. R., Dayan, P. & Sejnowski, T. J. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 16, 1936–1947 (1996).

Yacubian, J. et al. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J. Neurosci. 26, 9530–9537 (2006).

Tanaka, S. C. et al. Prediction of immediate and future rewards differentially recruits cortico-basal ganglia loops. Nature Neurosci. 7, 887–893 (2004).

Pagnoni, G., Zink, C. F., Montague, P. R. & Berns, G. S. Activity in human ventral striatum locked to errors of reward prediction. Nature Neurosci. 5, 97–98 (2002).

O'Doherty, J. P., Dayan, P., Friston, K., Critchley, H. & Dolan, R. J. Temporal difference models and reward-related learning in the human brain. Neuron 38, 329–337 (2003).

Knutson, B., Westdorp, A., Kaiser, E. & Hommer, D. fMRI visualization of brain activity during a monetary incentive delay task. Neuroimage 12, 20–27 (2000).

Delgado, M. R., Nystrom, L. E., Fissell, C., Noll, D. C. & Fiez, J. A. Tracking the hemodynamic responses to reward and punishment in the striatum. J. Neurophysiol. 84, 3072–3077 (2000).

Bayer, H. M. & Glimcher, P. W. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron 47, 129–141 (2005).

Bayer, H. M., Lau, B. & Glimcher, P. W. Statistics of midbrain dopamine neuron spike trains in the awake primate. J. Neurophysiol. 98, 1428–1439 (2007).

Seymour, B., Daw, N., Dayan, P., Singer, T. & Dolan, R. Differential encoding of losses and gains in the human striatum. J. Neurosci. 27, 4826–4831 (2007).

Daw, N. D., Kakade, S. & Dayan, P. Opponent interactions between serotonin and dopamine. Neural Netw. 15, 603–616 (2002).

Lohrenz, T., McCabe, K., Camerer, C. F. & Montague, P. R. Neural signature of fictive learning signals in a sequential investment task. Proc. Natl Acad. Sci. USA 104, 9493–9498 (2007).

Camerer, C. F. & Chong, J. K. Self-tuning experience weighted attraction learning in games. J. Econ. Theory 133, 177–198 (2007).

Olsson, A. & Phelps, E. A. Social learning of fear. Nature Neurosci. 10, 1095–1102 (2007).

Montague, P. R. et al. Dynamic gain control of dopamine delivery in freely moving animals. J. Neurosci. 24, 1754–1759 (2004).

Tversky, A. & Kahneman, D. Advances in prospect theory cumulative representation of uncertainty. J. Risk Uncertain. 5, 297–323 (1992).

Kahneman, D. & Tversky, A. Prospect Theory: an analysis of decision under risk. Econometrica 4, 263–291 (1979). This seminal paper proposed the PT model for goal-directed valuation in the presence of risk and provided some supporting evidence. It is one of the most cited papers in economics.

Chen, K., Lakshminarayanan, V. & Santos, L. How basic are behavioral biases? Evidence from capuchin-monkey trading behavior. J. Polit. Econ. 114, 517–537 (2006).

Camerer, C. F. in Choice, Values, and Frames (eds Kahneman, D. & Tversky, A.) (Cambridge Univ. Press, Cambridge, 2000).

Gilboa, I. & Schmeidler, D. Maxmin expected utility with non-unique prior. J. Math. Econ. 28, 141–153 (1989).

Ghirardato, P., Maccheroni, F. & Marinacci, M. Differentiating ambiguity and ambiguity attitude. J. Econ. Theory 118, 133–173 (2004).

Nestler, E. J. & Charney, D. S. The Neurobiology of Mental Illness (Oxford Univ. Press, Oxford, 2004).

Kauer, J. A. & Malenka, R. C. Synaptic plasticity and addiction. Nature Rev. Neurosci. 8, 844–858 (2007).

Hyman, S. E., Malenka, R. C. & Nestler, E. J. Neural mechanisms of addiction: the role of reward-related learning and memory. Annu. Rev. Neurosci. 29, 565–598 (2006).

Redish, A. D. & Johnson, A. A computational model of craving and obsession. Ann. NY Acad. Sci. 1104, 324–339 (2007).

Redish, A. D. Addiction as a computational process gone awry. Science 306, 1944–1947 (2004). This paper showed how addiction can be conceptualized as a disease of the habit valuation system, using a simple modification of the reinforcement-learning model.

Paulus, M. P. Decision-making dysfunctions in psychiatry—altered homeostatic processing? Science 318, 602–606 (2007).

Miller, E. K. & Cohen, J. D. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202 (2001).

Hazy, T. E., Frank, M. J. & O'Reilly, R. C. Towards an executive without a homunculus: computational models of the prefrontal cortex/basal ganglia system. Philos. Trans. R. Soc. Lond. B Biol. Sci. 362, 1601–1613 (2007).

Niv, Y., Joel, D. & Dayan, P. A normative perspective on motivation. Trends Cogn. Sci. 10, 375–381 (2006).

Acknowledgements

Financial support from the National Science Foundation (SES-0134618, A.R.) and the Human Frontier Science Program (C.F.C.) is gratefully acknowledged. This work was also supported by a grant from the Gordon and Betty Moore Foundation to the Caltech Brain Imaging Center (A.R., C.F.C.). R.M. acknowledges support from the National Institute on Drug Abuse, the National Institute of Neurological Disorders and Stroke, The Kane Family Foundation, The Angel Williamson Imaging Center and The Dana Foundation.

Author information

Authors and Affiliations

Corresponding author

Related links

Glossary

- Valence

-

The appetitive or aversive nature of a stimulus.

- Propositional logic system

-

A cognitive system that makes predictions about the world on the basis of known pieces of information.

- Statistical moments

-

Properties of a distribution, such as mean and variance.

- Expected-utility theory

-

A theory that states that the value of a prospect (or of random rewards) equals the sum of the value of the potential outcomes weighted by their probability.

- Prospect theory

-

An alternative to the expected utility theory that also pertains to how to evaluate prospects.

- Dual-process models

-

A class of psychological models in which two processes with different properties compete to determine the outcome of a computation.

- Race-to-barrier diffusion process

-

A stochastic process that terminates when the variable of interest reaches a certain threshold value.

- Credit-assignment problem

-

The problem of crediting rewards to particular actions in complex environments.

Rights and permissions

About this article

Cite this article

Rangel, A., Camerer, C. & Montague, P. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci 9, 545–556 (2008). https://doi.org/10.1038/nrn2357

Published:

Issue Date:

DOI: https://doi.org/10.1038/nrn2357

This article is cited by

-

A neural mechanism for conserved value computations integrating information and rewards

Nature Neuroscience (2024)

-

Mapping expectancy-based appetitive placebo effects onto the brain in women

Nature Communications (2024)

-

A Sequential Sampling Approach to the Integration of Habits and Goals

Computational Brain & Behavior (2024)

-

Dopamine facilitates the translation of physical exertion into assessments of effort

npj Parkinson's Disease (2023)

-

Neurocomputational mechanisms of food and physical activity decision-making in male adolescents

Scientific Reports (2023)