Abstract

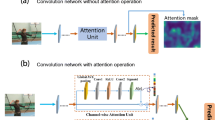

As an important research issue in computer vision, human action recognition has been regarded as a crucial mean of communication and interaction between humans and computers. To help computers automatically recognize human behaviors and accurately understand human intentions, this paper proposes a separable three-dimensional residual attention network (defined as Sep-3D RAN), which is a lightweight network and can extract the informative spatial-temporal representations for the applications of video-based human computer interaction. Specifically, Sep-3D RAN is constructed via stacking multiple separable three-dimensional residual attention blocks, in which each standard three-dimensional convolution is approximated as a cascaded two-dimensional spatial convolution and a one-dimensional temporal convolution, and then a dual attention mechanism is built by embedding a channel attention sub-module and a spatial attention sub-module sequentially in each residual block, thereby acquiring more discriminative features to improve the model guidance capability. Furthermore, a multi-stage training strategy is used for Sep-3D RAN training, which can relieve the over-fitting effectively. Finally, experimental results demonstrate that the performance of Sep-3D RAN can surpass the existing state-of-the-art methods.

Similar content being viewed by others

References

Atto A M, Benoît A, Lambert P (2020) Timed-image based deep learning for action recognition in video sequences. Pattern Recogn 104:107353

Bassano C, Solari F, Chessa M (2018) Studying natural human-computer interaction in immersive virtual reality: A comparison between actions in the peripersonal and in the near-action space. In: Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications-Vol 2: Hucapp. Scite Press, pp 108–115

Cai J, Hu J (2020) 3d rans: 3d residual attention networks for action recognition. Vis Comput 36(6):1261–1270

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE Press, pp 6299–6308

Castiglione A, Cozzolino G, Moscato F, et al. (2020) Cognitive analysis in social networks for viral marketing. IEEE Transactions on Industrial Informatics. https://doi.org/10.1109/TII.2020.3026013

Castiglione A, Nappi M, Ricciardi S (2020) Trustworthy method for person identification in iiot environments by means of facial dynamics. IEEE Transactions on Industrial Informatics. https://doi.org/10.1109/TII.2020.2977774

Chenarlogh V A, Razzazi F (2018) Multi-stream 3d cnn structure for human action recognition trained by limited data. IET Comput Vis 13(3):338–344

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. IEEE Press, pp 1933–1941

Gu Y, Ye X, Sheng W, et al. (2020) Multiple stream deep learning model for human action recognition. Image Vis Comput 93:103818

Hara K, Kataoka H, Satoh Y (2018) Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet?. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. IEEE Press, pp 6546–6555

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. IEEE Press, pp 7132–7141

Ji S, Xu W, Yang M, et al. (2012) 3d convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Jin T, He Z, Basu A, et al. (2019) Dense convolutional networks for efficient video analysis. In: 2019 5th International Conference on Control, Automation and Robotics. IEEE Press, pp 550–554

Karpathy A, Toderici G, Shetty S, et al. (2014) Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. IEEE Press, pp 1725–1732

Kay W, Carreira J, Simonyan K, et al. (2017) The kinetics human action video dataset. arXiv:1705.06950

Li Q, Qiu Z, Yao T, et al. (2016) Action recognition by learning deep multi-granular spatio-temporal video representation. In: Proceedings of the 2016 ACM on International Conference on Multimedia Retrieval. ACM Press, pp 159–166

Lin B, Fang B, Yang W, et al. (2019) Human action recognition based on spatio-temporal three-dimensional scattering transform descriptor and an improved vlad feature encoding algorithm. Neurocomputing 348:145–157

Liu Q, Che X, Bie M (2019) R-stan: Residual spatial-temporal attention network for action recognition. IEEE Access 7:82246–82255

Meng L, Zhao B, Chang B, et al. (2019) Interpretable spatio-temporal attention for video action recognition. In: Proceedings of the IEEE international conference on computer vision workshops. IEEE Press, pp 1513–1522

Qiu Z, Yao T, Mei T (2017) Learning spatio-temporal representation with pseudo-3d residual networks. In: proceedings of the IEEE International Conference on Computer Vision. IEEE Press, pp 5533–5541

Ren F, Bao Y (2020) A review on human-computer interaction and intelligent robots. Int J Inf Technol Decis Making 19(01):5–47

Sajjad M, Khan S, Hussain T, et al. (2019) Cnn-based anti-spoofing two-tier multi-factor authentication system. Pattern Recogn Lett 126:123–131

Sang H, Zhao Z, He D (2019) Two-level attention model based video action recognition network. IEEE Access 7:118388–118401

Sheng B, Li J, Xiao F, et al. (2020) Multilayer deep features with multiple kernel learning for action recognition. Neurocomputing 399:65–74

Soomro K, Zamir A R, Shah M (2012) Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv:1212.0402

Sun L, Jia K, Yeung D-Y, et al. (2015) Human action recognition using factorized spatio-temporal convolutional networks. In: Proceedings of the IEEE international conference on computer vision. IEEE Press, pp 4597–4605

Sun S, Kuang Z, Sheng L, et al. (2018) Optical flow guided feature: A fast and robust motion representation for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. IEEE Press, pp 1390–1399

Tran D, Bourdev L, Fergus R, et al. (2015) Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE international conference on computer vision. IEEE Press, pp 4489–4497

Tran D, Ray J, Shou Z, et al. (2017) Convnet architecture search for spatiotemporal feature learning. arXiv:1708.05038

Tran D, Wang H, Torresani L, et al. (2018) A closer look at spatiotemporal convolutions for action recognition. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. IEEE Press, pp 6450–6459

Tran D, Wang H, Torresani L, et al. (2019) Video classification with channel-separated convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision. IEEE Press, pp 5552–5561

Wang L, Xu Y, Cheng J, et al. (2018) Human action recognition by learning spatio-temporal features with deep neural networks. IEEE access 6:17913–17922

Wang L, Xiong Y, Wang Z, et al. (2016) Temporal segment networks: Towards good practices for deep action recognition. In: European conference on computer vision. Springer Press, pp 20–36

Wang X, Miao Z, Zhang R, et al. (2019) I3d-lstm: A new model for human action recognition. IOP Conference Series Materials Science and Engineering 569:032035

Wang X, Yang L T, Song L, et al. (2020) A tensor-based multi-attributes visual feature recognition method for industrial intelligence. IEEE Transactions on Industrial Informatics. https://doi.org/10.1109/TII.2020.2999901

Wang X, Yang L T, Wang Y, et al. (2020) Adtt: A highly-efficient distributed tensor-train decomposition method for iiot big data. IEEE Transactions on Industrial Informatics. https://doi.org/10.1109/TII.2020.2967768

Woo S, Park J, Lee J-Y, et al. (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision. Springer Press, pp 3–19

Wu Z, Huang Y, Wang L, et al. (2016) A comprehensive study on cross-view gait based human identification with deep cnns. IEEE Trans Pattern Anal Mach Intell 39(2):209–226

Xie S, Sun C, Huang J, et al. (2018) Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification. In: Proceedings of the European Conference on Computer Vision. Springer Press, pp 305–321

Yang H, Yuan C, Li B, et al. (2019) Asymmetric 3d convolutional neural networks for action recognition. Pattern Recogn 85:1–12

Yi Y, Li A, Zhou X (2020) Human action recognition based on action relevance weighted encoding. Signal Process Image Commun 80:115640

Yu S, Xie L, Liu L, et al. (2019) Learning long-term temporal features with deep neural networks for human action recognition. IEEE Access 8:1840–1850

Yu T, Guo C, Wang L, et al. (2018) Joint spatial-temporal attention for action recognition. Pattern Recogn Lett 112:226–233

Zhang Y, Hao K, Tang X, et al. (2019) Long-term 3d convolutional fusion network for action recognition. In: 2019 IEEE International Conference on Artificial Intelligence and Computer Applications. IEEE Press, pp 216–220

Zhang Z, Lv Z, Gan C, et al. (2020) Human action recognition using convolutional lstm and fully-connected lstm with different attentions. Neurocomputing 410:304–316

Zhao J, Mao X, Chen L (2019) Speech emotion recognition using deep 1d & 2d cnn lstm networks. Biomed Signal Process Control 47:312–323

Acknowledgements

The authors are grateful to the anonymous reviewers and the editor for their valuable comments and suggestions. This work is supported by Natural Science Foundation of China (Grant Nos. 61702066 and 61903056), Major Project of Science and Technology Research Program of Chongqing Education Commission of China (Grant No. KJZDM201900601), Chongqing Research Program of Basic Research and Frontier Technology (Grant Nos. cstc2021jcyj-msxmX0761 and cstc2018jcyjAX0154), Project Supported by Chongqing Municipal Key Laboratory of Institutions of Higher Education (Grant No. cqupt-mct-201901), Project Supported by Chongqing Key Laboratory of Mobile Communications Technology (Grant No. cqupt-mct-202002), Project Supported by Engineering Research Center of Mobile Communications, Ministry of Education (Grant No. cqupt-mct202006).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, Z., Peng, Y., Gan, C. et al. Separable 3D residual attention network for human action recognition. Multimed Tools Appl 82, 5435–5453 (2023). https://doi.org/10.1007/s11042-022-12972-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12972-3