Abstract

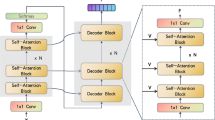

The task of video action segmentation is to classify an untrimmed long video at the frame level. With the requirement of processing long-term feature sequences containing much information, many computing units and auxiliary-training structures are required. The redundant information in these features can interfere with classification inference. It is an effective feature optimization mechanism to distinguish between useful and useless information by adjusting weight distribution. Such a method can adaptively calibrate complex features without increasing many calculations and improve frame-wise classification performance. Therefore, this study proposes a temporal and channel-combined attention block (TCB) that can be used for temporal sequences. It combines the attention of temporal and channel dimensions to reasonably assign weights to features. TCB contains two submodules: multi-scale temporal attention (MTA) and channel attention (CHA). MTA can adapt to different action instances with varying durations in a video using multilayer dilated convolution to capture multi-scale temporal relations and generate frame-wise attention weights. CHA captures the dependencies between channels and generates channel-wise attention weights to selectively increase the weights of important features. We combined the two attention modules to form a two-dimensional attention mechanism to improve action segmentation performance. We inserted TCB on boundary-aware cascade networks for simulation testing. The results show that our attention mechanism can improve action segmentation performance. In the three action segmentation datasets GTEA, 50Salads, and Breakfast, the accuracy Acc increased by an average of 1.4%, the Edit score increased by an average of 2.1%, and the F1 score increased by an average of approximately 2.1%.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Du Z, Mukaidani H. (2021) Linear dynamical systems approach for human action recognition with dual-stream deep features[J]. Applied Intelligence, pp 1–19

Jiang G, Jiang X, Fang Z, et al. (2021) An efficient attention module for 3d convolutional neural networks in action recognition[J]. Applied Intelligence, pp 1–15

Zhang XY, Huang YP, Mi Y, et al. (2021) Video sketch: A middle-level representation for action recognition[J]. Appl Intell 51(4):2589–2608

Lea C, Flynn MD, Vidal R et al (2017) Temporal convolutional networks for action segmentation and detection[C]. Proceedings of the IEEE conference on computer vision and pattern recognition, pp 156–165

Farha Y A, Gall J (2019) Ms-tcn: Multi-stage temporal convolutional network for action segmentation[C]. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3575–3584

Singh Li SJ, AbuFarha Y, Liu Y et al (2020) Ms-tcn++: Multi-stage temporal convolutional network for action segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence

Wang Z, Gao Z, Wang L et al (2020) Boundary-aware cascade networks for temporal action segmentation[C]. In: European conference on computer vision. Springer, Cham, pp 34– 51

Ishikawa Y, Kasai S, Aoki Y et al (2021) Alleviating over-segmentation errors by detecting action boundaries[C]. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp 2322–2331

Singhania D, Rahaman R, Yao A (2021) Coarse to Fine Multi-Resolution Temporal Convolutional Network[J]. arXiv preprint arXiv:2105.10859

Singh B, Marks TK, Jones M et al (2016) A multi-stream bi-directional recurrent neural network for fine-grained action detection[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1961–1970

Richard A, Kuehne H, Gall J (2017) Weakly supervised action learning with rnn based fine-to-coarse modeling[C]. In: Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, pp 754–763

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Woo S, Park J, Lee JY et al (2018) Cbam: Convolutional block attention module[C]. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Qin Z, Zhang P, Wu F et al (2020) FcaNet: Frequency Channel Attention Networks[J]. arXiv e-prints. arXiv:2012.11879

Wang Q, Wu B, Zhu P, et al. (2020) ECA-Net: efficient channel attention for deep convolutional neural networks, 2020 IEEE[C]. In: CVF conference on computer vision and pattern recognition (CVPR), IEEE

Zhang H, Zu K, Lu J et al (2021) Epsanet: An efficient pyramid split attention block on convolutional neural network[J]. arXiv preprint arXiv:2105.14447

Vaswani A, Shazeer N, Parmar N et al (2017) Attention is all you need[C]. In: Advances in neural information processing systems, pp 5998–6008

Wang Z, She Q, Smolic A (2021) ACTION-Net: Multipath Excitation for Action Recognition[C]. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13214–13223

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Carreira J, Zisserman A (2017)

Fathi A, Ren X, Rehg JM (2011) Learning to recognize objects in egocentric activities[C]. In: CVPR 2011. IEEE, pp 3281–3288

Stein S, McKenna SJ (2013) Combining embedded accelerometers with computer vision for recognizing food preparation activities[C]. In: Proceedings of the 2013 ACM ACM international joint conference on Pervasive and ubiquitous computing, pp 729–738

Kuehne H, Arslan A, Serre T (2014) The language of actions: Recovering the syntax and semantics of goal-directed human activities[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 780–787

Richard A, Gall J (2016) Temporal action detection using a statistical language model[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3131–3140

Lea C, Reiter A, Vidal R, et al. (2016) Segmental spatiotemporal cnns for fine-grained action segmentation[C]. In: European conference on computer vision. Springer, Cham, pp 36–52

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation[C]. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 234–241

Ding L, Xu C (2017) Tricornet: A hybrid temporal convolutional and recurrent network for video action segmentation[J]. arXiv preprint arXiv:1705.07818

Lei P, Todorovic S (2018) Temporal deformable residual networks for action segmentation in videos[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6742–6751

Gammulle H, Fernando T, Denman S, et al. (2019) Coupled generative adversarial network for continuous fine-grained action segmentation[C]. In: 2019 IEEE winter conference on applications of computer vision (WACV), IEEE, pp 200–209

Ding L, Xu C (2018) Weakly-supervised action segmentation with iterative soft boundary assignment[C]. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6508–6516

Kuehne H, Gall J, Serre T. (2016) An end-to-end generative framework for video segmentation and recognition[C]. In: 2016 IEEE winter conference on applications of computer vision (WACV), IEEE, pp 1–8

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, D., Cao, Z., Mao, L. et al. A temporal and channel-combined attention block for action segmentation. Appl Intell 53, 2738–2750 (2023). https://doi.org/10.1007/s10489-022-03569-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03569-2