Abstract

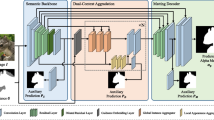

Due to the difficulty and labor-consuming nature of getting highly accurate or matting annotations, there only exists a limited amount of highly accurate labels available to the public. To tackle this challenge, we propose a DiffuMatting which inherits the strong Everything generation ability of diffusion and endows the power of ‘matting anything’. Our DiffuMatting can 1). act as an anything matting factory with high accurate annotations 2). be well-compatible with community LoRAs or various conditional control approaches to achieve the community-friendly art design and controllable generation. Specifically, inspired by green-screen-matting, we aim to teach the diffusion model to paint on a fixed green screen canvas. To this end, a large-scale green-screen dataset (Green100K) is collected as a training dataset for DiffuMatting. Secondly, a green background control loss is proposed to keep the drawing board as a pure green color to distinguish the foreground and background. To ensure the synthesized object has more edge details, a detailed-enhancement of transition boundary loss is proposed as a guideline to generate objects with more complicated edge structures. Aiming to simultaneously generate the object and its matting annotation, we build a matting head to make a green-color removal in the latent space of the VAE decoder. Our DiffuMatting shows several potential applications (e.g., matting-data generator, community-friendly art design and controllable generation). As a matting-data generator, DiffuMatting synthesizes general object and portrait matting sets, effectively reducing the relative MSE error by 15.4% in General Object Matting. The dataset is released in our project page at https://diffumatting.github.io.

X. Hu and X. Peng—Contributed equally to this work.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Azadi, S., Tschannen, M., Tzeng, E., Gelly, S., Darrell, T., Lucic, M.: Semantic bottleneck scene generation. arXiv preprint arXiv:1911.11357 (2019)

Azizi, S., Kornblith, S., Saharia, C., Norouzi, M., Fleet, D.J.: Synthetic data from diffusion models improves ImageNet classification. arXiv preprint arXiv:2304.08466 (2023)

Bansal, H., Grover, A.: Leaving reality to imagination: robust classification via generated datasets. arXiv preprint arXiv:2302.02503 (2023)

Baranchuk, D., Rubachev, I., Voynov, A., Khrulkov, V., Babenko, A.: Label-efficient semantic segmentation with diffusion models. In: ICLR (2022)

Chen, X., Huang, L., Liu, Y., Shen, Y., Zhao, D., Zhao, H.: AnyDoor: zero-shot object-level image customization. arXiv preprint arXiv:2307.09481 (2023)

Dai, W., et al.: InstructBLIP: towards general-purpose vision-language models with instruction tuning (2023)

Devaranjan, J., Fidler, S., Kar, A.: Unsupervised learning of scene structure for synthetic data generation (9 September 2021), uS Patent App. 17/117,425 (2021)

Goferman, S., Zelnik-Manor, L., Tal, A.: Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell. 34(10), 1915–1926 (2011)

Gu, S., et al.: Vector quantized diffusion model for text-to-image synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10696–10706 (2022)

He, R., et al.: Is synthetic data from generative models ready for image recognition? In: ICLR (2022)

Ho, J., Salimans, T.: Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598 (2022)

Hou, Q., Cheng, M.M., Hu, X., Borji, A., Tu, Z., Torr, P.H.: Deeply supervised salient object detection with short connections. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3203–3212 (2017)

Kar, A., et al.: Meta-Sim: learning to generate synthetic datasets. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4551–4560 (2019)

Karras, T., Aittala, M., Aila, T., Laine, S.: Elucidating the design space of diffusion-based generative models. Adv. Neural. Inf. Process. Syst. 35, 26565–26577 (2022)

Ke, Z., Sun, J., Li, K., Yan, Q., Lau, R.W.: MODNet: real-time trimap-free portrait matting via objective decomposition. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, pp. 1140–1147 (2022)

Kirillov, A., et al.: Segment anything. arXiv preprint arXiv:2304.02643 (2023)

Le Moing, G., Vu, T.H., Jain, H., Pérez, P., Cord, M.: Semantic palette: guiding scene generation with class proportions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9342–9350 (2021)

Li, B., Xue, K., Liu, B., Lai, Y.K.: BBDM: image-to-image translation with Brownian bridge diffusion models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1952–1961 (2023)

Li, D., Ling, H., Kim, S.W., Kreis, K., Fidler, S., Torralba, A.: BigDatasetGAN: synthesizing ImageNet with pixel-wise annotations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 21330–21340 (2022)

Li, J., Ma, S., Zhang, J., Tao, D.: Privacy-preserving portrait matting. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 3501–3509 (2021)

Li, J., Zhang, J., Maybank, S.J., Tao, D.: Bridging composite and real: towards end-to-end deep image matting. Int. J. Comput. Vision 130(2), 246–266 (2022)

Li, J., Zhang, J., Tao, D.: Deep automatic natural image matting. arXiv preprint arXiv:2107.07235 (2021)

Liew, J.H., Cohen, S., Price, B., Mai, L., Feng, J.: Deep interactive thin object selection. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 305–314 (2021)

Lin, S., Ryabtsev, A., Sengupta, S., Curless, B.L., Seitz, S.M., Kemelmacher-Shlizerman, I.: Real-time high-resolution background matting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8762–8771 (2021)

Liu, F., Tran, L., Liu, X.: Fully understanding generic objects: modeling, segmentation, and reconstruction. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7423–7433 (2021)

Lu, H., Dai, Y., Shen, C., Xu, S.: Indices matter: learning to index for deep image matting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3266–3275 (2019)

Park, M., Yun, J., Choi, S., Choo, J.: Learning to generate semantic layouts for higher text-image correspondence in text-to-image synthesis. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7591–7600 (2023)

Peebles, W., Xie, S.: Scalable diffusion models with transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4195–4205 (2023)

Podell, D., et al.: SDXL: improving latent diffusion models for high-resolution image synthesis. arXiv preprint arXiv:2307.01952 (2023)

Qiao, Y., et al.: Attention-guided hierarchical structure aggregation for image matting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13676–13685 (2020)

Qin, X., Dai, H., Hu, X., Fan, D.P., Shao, L., Van Gool, L.: Highly accurate dichotomous image segmentation. In: Avidan, S., Brostow, G., Cisse, M., Farinella, G.M., Hassner, T. (eds.) European Conference on Computer Vision, pp. 38–56. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-19797-0_3

Qin, X., et al.: Boundary-aware segmentation network for mobile and web applications. arXiv preprint arXiv:2101.04704 (2021)

Rombach, R., Blattmann, A., Lorenz, D., Esser, P., Ommer, B.: High-resolution image synthesis with latent diffusion models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10684–10695 (2022)

Saharia, C., et al.: Photorealistic text-to-image diffusion models with deep language understanding. Adv. Neural. Inf. Process. Syst. 35, 36479–36494 (2022)

Sariyildiz, M.B., Alahari, K., Larlus, D., Kalantidis, Y.: Fake it till you make it: learning transferable representations from synthetic ImageNet clones. In: CVPR 2023–IEEE/CVF Conference on Computer Vision and Pattern Recognition (2023)

Sauer, A., Schwarz, K., Geiger, A.: StyleGAN-XL: scaling StyleGAN to large diverse datasets. In: ACM SIGGRAPH 2022 Conference Proceedings, pp. 1–10 (2022)

Schuhmann, C., et al.: LAION-5B: an open large-scale dataset for training next generation image-text models. Adv. Neural. Inf. Process. Syst. 35, 25278–25294 (2022)

Shenoda, M., Kim, E.: DiffuGen: adaptable approach for generating labeled image datasets using stable diffusion models. arXiv preprint arXiv:2309.00248 (2023)

Trabucco, B., Doherty, K., Gurinas, M., Salakhutdinov, R.: Effective data augmentation with diffusion models. arXiv preprint arXiv:2302.07944 (2023)

Wang, L., et al.: Learning to detect salient objects with image-level supervision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 136–145 (2017)

Wang, W., et al.: Semantic image synthesis via diffusion models. arXiv preprint arXiv:2207.00050 (2022)

Wang, Y., Qi, L., Chen, Y.C., Zhang, X., Jia, J.: Image synthesis via semantic composition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 13749–13758 (2021)

Wu, W., Zhao, Y., Shou, M.Z., Zhou, H., Shen, C.: Diffumask: Synthesizing images with pixel-level annotations for semantic segmentation using diffusion models. International Conference on Computer Vision (2023)

Xu, N., Price, B., Cohen, S., Huang, T.: Deep image matting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2970–2979 (2017)

Yang, L., Xu, X., Kang, B., Shi, Y., Zhao, H.: FreeMask: synthetic images with dense annotations make stronger segmentation models. arXiv preprint arXiv:2310.15160 (2023)

Zeng, Y., Zhang, P., Zhang, J., Lin, Z., Lu, H.: Towards high-resolution salient object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7234–7243 (2019)

Zhang, Y., et al.: DatasetGAN: efficient labeled data factory with minimal human effort. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10145–10155 (2021)

Zhu, P., Abdal, R., Femiani, J., Wonka, P.: Barbershop: GAN-based image compositing using segmentation masks. arXiv preprint arXiv:2106.01505 (2021)

Acknowledgments

This work was supported by National Key R&D Program of China (No. 2022ZD0118202), in part by the National Natural Science Foundation of China (No. 62072386), in part by Yunnan Provincial Major Science and Technology Special Plan Project (No. 202402AD080001), in part by Henan Province key research and development project (No. 231111212000) and the Open Foundation of Henan Key Laboratory of General Aviation Technology (No. ZHKF-230212).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2025 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Hu, X. et al. (2025). DiffuMatting: Synthesizing Arbitrary Objects with Matting-Level Annotation. In: Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G. (eds) Computer Vision – ECCV 2024. ECCV 2024. Lecture Notes in Computer Science, vol 15126. Springer, Cham. https://doi.org/10.1007/978-3-031-73113-6_23

Download citation

DOI: https://doi.org/10.1007/978-3-031-73113-6_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-73112-9

Online ISBN: 978-3-031-73113-6

eBook Packages: Computer ScienceComputer Science (R0)