Abstract

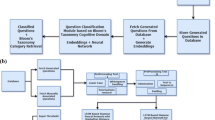

Prior work in standardized science exams requires support from large text corpus, such as targeted science corpus from Wikipedia or SimpleWikipedia. However, retrieving knowledge from the large corpus is time-consuming and questions embedded in complex semantic representation may interfere with retrieval. Inspired by the dual process theory in cognitive science, we propose a MetaQA framework, where system 1 is an intuitive meta-classifier and system 2 is a reasoning module. Specifically, our method based on meta-learning method and large language model BERT, which can efficiently solve science problems by learning from related example questions without relying on external knowledge bases. We evaluate our method on AI2 Reasoning Challenge (ARC), and the experimental results show that meta-classifier yields considerable classification performance on emerging question types. The information provided by meta-classifier significantly improves the accuracy of reasoning module from \(46.6\%\) to \(64.2\%\), which has a competitive advantage over retrieval-based QA methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Clark, P.: Elementary school science and math tests as a driver for AI: take the aristo challenge! In: Twenty-Seventh IAAI Conference (2015)

Clark, P., Etzioni, O.: My computer is an honor student-but how intelligent is it? Standardized tests as a measure of AI. AI Mag. 37(1), 5–12 (2016)

Clark, P., et al.: From‘F’ to ‘A’ on the ny regents science exams: an overview of the aristo project. arXiv preprint arXiv:1909.01958 (2019)

Clark, P., Harrison, P., Balasubramanian, N.: A study of the knowledge base requirements for passing an elementary science test. In: Proceedings of the 2013 workshop on Automated knowledge base construction, pp. 37–42. ACM (2013)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Dua, D., Wang, Y., Dasigi, P., Stanovsky, G., Singh, S., Gardner, M.: Drop: a reading comprehension benchmark requiring discrete reasoning over paragraphs. arXiv preprint arXiv:1903.00161 (2019)

Evans, J.S.B.T.: Dual-processing accounts of reasoning, judgment, and social cognition. Annu. Rev. Psychol. 59, 255–278 (2008)

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. In: Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 1126–1135. JMLR. org (2017)

Godea, A., Nielsen, R.: Annotating educational questions for student response analysis. In: Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018) (2018)

Graves, A., Wayne, G., Danihelka, I.: Neural turing machines. arXiv preprint arXiv:1410.5401 (2014)

Gu, J., Wang, Y., Chen, Y., Cho, K., Li, V.O.: Meta-learning for low-resource neural machine translation. arXiv preprint arXiv:1808.08437 (2018)

Hovy, E., Gerber, L., Hermjakob, U., Lin, C.Y., Ravichandran, D.: Toward semantics-based answer pinpointing. In: Proceedings of the First International Conference on Human Language Technology Research (2001)

Jansen, P., Sharp, R., Surdeanu, M., Clark, P.: Framing qa as building and ranking intersentence answer justifications. Comput. Linguist. 43(2), 407–449 (2017)

Jansen, P.A., Wainwright, E., Marmorstein, S., Morrison, C.T.: Worldtree: a corpus of explanation graphs for elementary science questions supporting multi-hop inference. arXiv preprint arXiv:1802.03052 (2018)

Khashabi, D., Khot, T., Sabharwal, A., Roth, D.: Question answering as global reasoning over semantic abstractions. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Lai, G., Xie, Q., Liu, H., Yang, Y., Hovy, E.: Race: large-scale reading comprehension dataset from examinations. arXiv preprint arXiv:1704.04683 (2017)

Liu, Y., et al.: Roberta: a robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692 (2019)

Minsky, M.: Society of Mind. Simon and Schuster, New York (1988)

Mishra, N., Rohaninejad, M., Chen, X., Abbeel, P.: A simple neural attentive meta-learner (2017)

Munkhdalai, T., Yu, H.: Meta networks. In: Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 2554–2563. JMLR. org (2017)

Musa, R., et al.: Answering science exam questions using query reformulation with background knowledge (2018)

Nichol, A., Schulman, J.: Reptile: a scalable metalearning algorithm, vol. 2. arXiv preprint arXiv:1803.02999 (2018)

Pan, X., Sun, K., Yu, D., Ji, H., Yu, D.: Improving question answering with external knowledge. arXiv preprint arXiv:1902.00993 (2019)

Peters, M.E., et al.: Deep contextualized word representations. arXiv preprint arXiv:1802.05365 (2018)

Qiu, Y., Frei, H.P.: Concept based query expansion. In: Proceedings of the 16th annual international ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 160–169. ACM (1993)

Ran, Q., Lin, Y., Li, P., Zhou, J., Liu, Z.: Numnet: machine reading comprehension with numerical reasoning. arXiv preprint arXiv:1910.06701 (2019)

Roberts, K., et al., K.: Automatically classifying question types for consumer health questions. In: AMIA Annual Symposium Proceedings, vol. 2014, p. 1018. American Medical Informatics Association (2014)

Santoro, A., Bartunov, S., Botvinick, M., Wierstra, D., Lillicrap, T.: One-shot learning with memory-augmented neural networks. arXiv preprint arXiv:1605.06065 (2016)

Sloman, S.A.: The empirical case for two systems of reasoning. Psychol. Bull. 119, 3 (1996)

Vig, J.: A multiscale visualization of attention in the transformer model. arXiv preprint arXiv:1906.05714 (2019)

Xu, D., et al., J.: Multi-class hierarchical question classification for multiple choice science exams. arXiv preprint arXiv:1908.05441 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Zheng, X., Wang, P., Wang, Q., Shi, Z. (2021). Challenge Closed-Book Science Exam: A Meta-Learning Based Question Answering System. In: Uehara, H., Yamaguchi, T., Bai, Q. (eds) Knowledge Management and Acquisition for Intelligent Systems. PKAW 2021. Lecture Notes in Computer Science(), vol 12280. Springer, Cham. https://doi.org/10.1007/978-3-030-69886-7_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-69886-7_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-69885-0

Online ISBN: 978-3-030-69886-7

eBook Packages: Computer ScienceComputer Science (R0)