Abstract

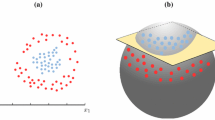

Training SVM requires large memory and long cpu time when the pattern set is large. To alleviate the computational burden in SVM training, we propose a fast preprocessing algorithm which selects only the patterns near the decision boundary. Preliminary simulation results were promising: Up to two orders of magnitude, training time reduction was achieved including the preprocessing, without any loss in classification accuracies.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Almeida, M.B., Braga, A. and Braga J.P.(2000). SVM-KM: speeding SVMs learning with a priori cluster selection and k-means, Proc. of the 6th Brazilian Symposium on Neural Networks, pp. 162–167.

Arya, S., Mount, D.M., Netanyahu, N.S. and Silverman, R., (1998). An Optimal Algorithm for Approximate Nearest Neighbor Searching in Fixed Dimensions, Journal of the ACM, vol. 45, no. 6, pp. 891–923.

Choi, S.H. and Rockett, P., (2002). The Training of Neural Classifiers with Condensed Dataset, IEEE Transactions on Systems, Man, and Cybernetics — PART B: Cybernetics, vol. 32, no. 2, pp. 202–207.

Grother, P.J., Candela, G.T. and Blue, J.L, (1997). Fast Implementations of Nearest Neighbor Classifiers, Pattern Recognition, vol. 30, no. 3, pp. 459–465.

Hearst, M.A., Scholkopf, B., Dumais, S., Osuna, E., and Platt, J., (1998). Trends and Controversies — Support Vector Machines, IEEE Intelligent Systems, vol. 13, pp. 18–28.

Liu C.L., and Nakagawa M., (2001). Evaluation of Prototype Learning Algorithms for Nearest-Neighbor Classifier in Application to Handwritten Character Recognition, Pattern Recognition, vol. 34, pp. 601–615.

Lyhyaoui, A., Martinez, M., Mora, I., Vazquez, M., Sancho, J. and Figueiras-Vaidal, A.R., (1999). Sample Selection Via Clustering to Construct Support Vector-Like Classifiers, IEEE Transactions on Neural Networks, vol. 10, no. 6, pp. 1474–1481.

Masuyama, N., Kudo, M., Toyama, J. and Shimbo, M., (1999). Termination Conditions for a Fast k-Nearest Neighbor Method, Proc. of 3rd International Conference on Knoweldge-Based Intelligent Information Engineering Systems, Adelaide, Australia, pp. 443–446.

Mitchell, T.M., (1997). Machine Learning, McGraw Hill, See also Lecture Slides on Chapter8 for the Book at http://www-2.cs.cmu.edu/tom/mlbook-chapter-slides.html.

Platt, J.C. (1999). Fast Training of Support Vector Machines Using Sequential Minimal Optimization, Advances in Kernel Methods: Support Vector Machines, MIT press, Cambridge, MA, pp. 185–208.

Shin, H.J. and Cho, S.Z., (2002). Pattern Selection For Support Vector Classifiers, Proc. of the 3rd International Conference on Intelligent Data Engineering and Automated Learning (IDEAL), Manchester, UK, pp. 469–474.

Shin, H.J. and Cho, S.Z., (2002). Pattern Selection Using the Bias and Variance of Ensemble, Journal of the Korean Institute of Industrial Engineers, vol. 28, no. 1, pp. 112–127, 2002.

Short, R., and Fukunaga, (1981). The Optimal Distance Measure for Nearest Neighbor Classification, IEEE Transactions on Information and Theory, vol. IT-27, no. 5, pp. 622–627.

Vapnik, V., (2000). The Nature of Statistical Learning Theory, Springer-Verlag New York, Inc. 2nd eds.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2003 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Shin, H., Cho, S. (2003). Fast Pattern Selection for Support Vector Classifiers. In: Whang, KY., Jeon, J., Shim, K., Srivastava, J. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2003. Lecture Notes in Computer Science(), vol 2637. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-36175-8_37

Download citation

DOI: https://doi.org/10.1007/3-540-36175-8_37

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-04760-5

Online ISBN: 978-3-540-36175-6

eBook Packages: Springer Book Archive