Mainframe computer

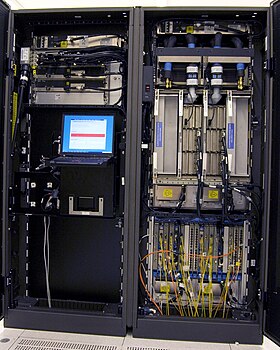

A mainframe computer, informally called a mainframe or big iron,[1] is a computer used primarily by large organizations for critical applications like bulk data processing for tasks such as censuses, industry and consumer statistics, enterprise resource planning, and large-scale transaction processing. A mainframe computer is large but not as large as a supercomputer and has more processing power than some other classes of computers, such as minicomputers, servers, workstations, and personal computers. Most large-scale computer-system architectures were established in the 1960s, but they continue to evolve. Mainframe computers are often used as servers.

The term mainframe was derived from the large cabinet, called a main frame,[2] that housed the central processing unit and main memory of early computers.[3][4] Later, the term mainframe was used to distinguish high-end commercial computers from less powerful machines.[5]

Design

[edit]Modern mainframe design is characterized less by raw computational speed and more by:

- Redundant internal engineering resulting in high reliability and security

- Extensive input-output ("I/O") facilities with the ability to offload to separate engines

- Strict backward compatibility with older software

- High hardware and computational utilization rates through virtualization to support massive throughput

- Hot swapping of hardware, such as processors and memory

The high stability and reliability of mainframes enable these machines to run uninterrupted for very long periods of time, with mean time between failures (MTBF) measured in decades.

Mainframes have high availability, one of the primary reasons for their longevity, since they are typically used in applications where downtime would be costly or catastrophic. The term reliability, availability and serviceability (RAS) is a defining characteristic of mainframe computers. Proper planning and implementation are required to realize these features. In addition, mainframes are more secure than other computer types: the NIST vulnerabilities database, US-CERT, rates traditional mainframes such as IBM Z (previously called z Systems, System z, and zSeries),[vague] Unisys Dorado, and Unisys Libra as among the most secure, with vulnerabilities in the low single digits, as compared to thousands for Windows, UNIX, and Linux.[6] Software upgrades usually require setting up the operating system or portions thereof, and are non disruptive only when using virtualizing facilities such as IBM z/OS and Parallel Sysplex, or Unisys XPCL, which support workload sharing so that one system can take over another's application while it is being refreshed.

In the late 1950s, mainframes had only a rudimentary interactive interface (the console) and used sets of punched cards, paper tape, or magnetic tape to transfer data and programs. They operated in batch mode to support back office functions such as payroll and customer billing, most of which were based on repeated tape-based sorting and merging operations followed by line printing to preprinted continuous stationery. When interactive user terminals were introduced, they were used almost exclusively for applications (e.g. airline booking) rather than program development. However, in 1961 the first[7] academic, general-purpose timesharing system that supported software development,[8] CTSS, was released at MIT on an IBM 709, later 7090 and 7094.[9] Typewriter and Teletype devices were common control consoles for system operators through the early 1970s, although ultimately supplanted by keyboard/display devices.

By the early 1970s, many mainframes acquired interactive user terminals[NB 1] operating as timesharing computers, supporting hundreds of users simultaneously along with batch processing. Users gained access through keyboard/typewriter terminals and later character-mode text[NB 2] terminal CRT displays with integral keyboards, or finally from personal computers equipped with terminal emulation software. By the 1980s, many mainframes supported general purpose graphic display terminals, and terminal emulation, but not graphical user interfaces. This form of end-user computing became obsolete in the 1990s due to the advent of personal computers provided with GUIs. After 2000, modern mainframes partially or entirely phased out classic "green screen" and color display terminal access for end-users in favour of Web-style user interfaces.[citation needed]

The infrastructure requirements were drastically reduced during the mid-1990s, when CMOS mainframe designs replaced the older bipolar technology. IBM claimed that its newer mainframes reduced data center energy costs for power and cooling, and reduced physical space requirements compared to server farms.[10]

Characteristics

[edit]

Modern mainframes can run multiple different instances of operating systems at the same time. This technique of virtual machines allows applications to run as if they were on physically distinct computers. In this role, a single mainframe can replace higher-functioning hardware services available to conventional servers. While mainframes pioneered this capability, virtualization is now available on most families of computer systems, though not always to the same degree or level of sophistication.[11]

Mainframes can add or hot swap system capacity without disrupting system function, with specificity and granularity to a level of sophistication not usually available with most server solutions.[citation needed] Modern mainframes, notably the IBM Z servers, offer two levels of virtualization: logical partitions (LPARs, via the PR/SM facility) and virtual machines (via the z/VM operating system). Many mainframe customers run two machines: one in their primary data center and one in their backup data center—fully active, partially active, or on standby—in case there is a catastrophe affecting the first building. Test, development, training, and production workload for applications and databases can run on a single machine, except for extremely large demands where the capacity of one machine might be limiting. Such a two-mainframe installation can support continuous business service, avoiding both planned and unplanned outages. In practice, many customers use multiple mainframes linked either by Parallel Sysplex and shared DASD (in IBM's case),[citation needed] or with shared, geographically dispersed storage provided by EMC or Hitachi.

Mainframes are designed to handle very high volume input and output (I/O) and emphasize throughput computing. Since the late 1950s,[NB 3] mainframe designs have included subsidiary hardware[NB 4] (called channels or peripheral processors) which manage the I/O devices, leaving the CPU free to deal only with high-speed memory. It is common in mainframe shops to deal with massive databases and files. Gigabyte to terabyte-size record files are not unusual.[12] Compared to a typical PC, mainframes commonly have hundreds to thousands of times as much data storage online,[13] and can access it reasonably quickly. Other server families also offload I/O processing and emphasize throughput computing.

Mainframe return on investment (ROI), like any other computing platform, is dependent on its ability to scale, support mixed workloads, reduce labor costs, deliver uninterrupted service for critical business applications, and several other risk-adjusted cost factors.

Mainframes also have execution integrity characteristics for fault tolerant computing. For example, z900, z990, System z9, and System z10 servers effectively execute result-oriented instructions twice, compare results, arbitrate between any differences (through instruction retry and failure isolation), then shift workloads "in flight" to functioning processors, including spares, without any impact to operating systems, applications, or users. This hardware-level feature, also found in HP's NonStop systems, is known as lock-stepping, because both processors take their "steps" (i.e. instructions) together. Not all applications absolutely need the assured integrity that these systems provide, but many do, such as financial transaction processing.[citation needed]

Current market

[edit]IBM, with the IBM Z series, continues to be a major manufacturer in the mainframe market. In 2000, Hitachi co-developed the zSeries z900 with IBM to share expenses, and the latest Hitachi AP10000 models are made by IBM. Unisys manufactures ClearPath Libra mainframes, based on earlier Burroughs MCP products and ClearPath Dorado mainframes based on Sperry Univac OS 1100 product lines. Hewlett Packard Enterprise sells its unique NonStop systems, which it acquired with Tandem Computers and which some analysts classify as mainframes. Groupe Bull's GCOS, Stratus OpenVOS, Fujitsu (formerly Siemens) BS2000, and Fujitsu-ICL VME mainframes are still available in Europe, and Fujitsu (formerly Amdahl) GS21 mainframes globally. NEC with ACOS and Hitachi with AP10000-VOS3[14] still maintain mainframe businesses in the Japanese market.

The amount of vendor investment in mainframe development varies with market share. Fujitsu and Hitachi both continue to use custom S/390-compatible processors, as well as other CPUs (including POWER and Xeon) for lower-end systems. Bull uses a mixture of Itanium and Xeon processors. NEC uses Xeon processors for its low-end ACOS-2 line, but develops the custom NOAH-6 processor for its high-end ACOS-4 series. IBM also develops custom processors in-house, such as the Telum. Unisys produces code compatible mainframe systems that range from laptops to cabinet-sized mainframes that use homegrown CPUs as well as Xeon processors. Furthermore, there exists a market for software applications to manage the performance of mainframe implementations. In addition to IBM, significant market competitors include BMC[15] and Precisely;[16] former competitors include Compuware[17][18] and CA Technologies.[19] Starting in the 2010s, cloud computing is now a less expensive, more scalable alternative.[citation needed]

History

[edit]

Several manufacturers and their successors produced mainframe computers from the 1950s until the early 21st century, with gradually decreasing numbers and a gradual transition to simulation on Intel chips rather than proprietary hardware. The US group of manufacturers was first known as "IBM and the Seven Dwarfs":[20]: p.83 usually Burroughs, UNIVAC, NCR, Control Data, Honeywell, General Electric and RCA, although some lists varied. Later, with the departure of General Electric and RCA, it was referred to as IBM and the BUNCH. IBM's dominance grew out of their 700/7000 series and, later, the development of the 360 series mainframes. The latter architecture has continued to evolve into their current zSeries mainframes which, along with the then Burroughs and Sperry (now Unisys) MCP-based and OS1100 mainframes, are among the few mainframe architectures still extant that can trace their roots to this early period. While IBM's zSeries can still run 24-bit System/360 code, the 64-bit IBM Z CMOS servers have nothing physically in common with the older systems. Notable manufacturers outside the US were Siemens and Telefunken in Germany, ICL in the United Kingdom, Olivetti in Italy, and Fujitsu, Hitachi, Oki, and NEC in Japan. The Soviet Union and Warsaw Pact countries manufactured close copies of IBM mainframes during the Cold War;[citation needed] the BESM series and Strela are examples of independently designed Soviet computers. Elwro in Poland was another Eastern Bloc manufacturer, producing the ODRA, R-32 and R-34 mainframes.

Shrinking demand and tough competition started a shakeout in the market in the early 1970s—RCA sold out to UNIVAC and GE sold its business to Honeywell; between 1986 and 1990 Honeywell was bought out by Bull; UNIVAC became a division of Sperry, which later merged with Burroughs to form Unisys Corporation in 1986.

In 1984 estimated sales of desktop computers ($11.6 billion) exceeded mainframe computers ($11.4 billion) for the first time. IBM received the vast majority of mainframe revenue.[21] During the 1980s, minicomputer-based systems grew more sophisticated and were able to displace the lower end of the mainframes. These computers, sometimes called departmental computers, were typified by the Digital Equipment Corporation VAX series.

In 1991, AT&T Corporation briefly owned NCR. During the same period, companies found that servers based on microcomputer designs could be deployed at a fraction of the acquisition price and offer local users much greater control over their own systems given the IT policies and practices at that time. Terminals used for interacting with mainframe systems were gradually replaced by personal computers. Consequently, demand plummeted and new mainframe installations were restricted mainly to financial services and government. In the early 1990s, there was a rough consensus among industry analysts that the mainframe was a dying market as mainframe platforms were increasingly replaced by personal computer networks. InfoWorld's Stewart Alsop infamously predicted that the last mainframe would be unplugged in 1996; in 1993, he cited Cheryl Currid, a computer industry analyst as saying that the last mainframe "will stop working on December 31, 1999",[22] a reference to the anticipated Year 2000 problem (Y2K).

That trend started to turn around in the late 1990s as corporations found new uses for their existing mainframes and as the price of data networking collapsed in most parts of the world, encouraging trends toward more centralized computing. The growth of e-business also dramatically increased the number of back-end transactions processed by mainframe software as well as the size and throughput of databases. Batch processing, such as billing, became even more important (and larger) with the growth of e-business, and mainframes are particularly adept at large-scale batch computing. Another factor currently increasing mainframe use is the development of the Linux operating system, which arrived on IBM mainframe systems in 1999. Linux allows users to take advantage of open source software combined with mainframe hardware RAS. Rapid expansion and development in emerging markets, particularly People's Republic of China, is also spurring major mainframe investments to solve exceptionally difficult computing problems, e.g. providing unified, extremely high volume online transaction processing databases for 1 billion consumers across multiple industries (banking, insurance, credit reporting, government services, etc.) In late 2000, IBM introduced 64-bit z/Architecture, acquired numerous software companies such as Cognos and introduced those software products to the mainframe. IBM's quarterly and annual reports in the 2000s usually reported increasing mainframe revenues and capacity shipments. However, IBM's mainframe hardware business has not been immune to the recent overall downturn in the server hardware market or to model cycle effects. For example, in the 4th quarter of 2009, IBM's System z hardware revenues decreased by 27% year over year. But MIPS (millions of instructions per second) shipments increased 4% per year over the past two years.[23] Alsop had himself photographed in 2000, symbolically eating his own words ("death to the mainframe").[24]

In 2012, NASA powered down its last mainframe, an IBM System z9.[25] However, IBM's successor to the z9, the z10, led a New York Times reporter to state four years earlier that "mainframe technology—hardware, software and services—remains a large and lucrative business for I.B.M., and mainframes are still the back-office engines behind the world's financial markets and much of global commerce".[26] As of 2010[update], while mainframe technology represented less than 3% of IBM's revenues, it "continue[d] to play an outsized role in Big Blue's results".[27]

IBM has continued to launch new generations of mainframes: the IBM z13 in 2015,[28] the z14 in 2017,[29][30] the z15 in 2019,[31] and the z16 in 2022, the latter featuring among other things an "integrated on-chip AI accelerator" and the new Telum microprocessor.[32]

Differences from supercomputers

[edit]A supercomputer is a computer at the leading edge of data processing capability, with respect to calculation speed. Supercomputers are used for scientific and engineering problems (high-performance computing) which crunch numbers and data,[33] while mainframes focus on transaction processing. The differences are:

- Mainframes are built to be reliable for transaction processing (measured by TPC-metrics; not used or helpful for most supercomputing applications) as it is commonly understood in the business world: the commercial exchange of goods, services, or money.[citation needed] A typical transaction, as defined by the Transaction Processing Performance Council,[34] updates a database system for inventory control (goods), airline reservations (services), or banking (money) by adding a record. A transaction may refer to a set of operations including disk read/writes, operating system calls, or some form of data transfer from one subsystem to another which is not measured by the processing speed of the CPU. Transaction processing is not exclusive to mainframes but is also used by microprocessor-based servers and online networks.

- Supercomputer performance is measured in floating point operations per second (FLOPS)[35] or in traversed edges per second or TEPS,[36] metrics that are not very meaningful for mainframe applications, while mainframes are sometimes measured in millions of instructions per second (MIPS), although the definition depends on the instruction mix measured.[37] Examples of integer operations measured by MIPS include adding numbers together, checking values or moving data around in memory (while moving information to and from storage, so-called I/O is most helpful for mainframes; and within memory, only helping indirectly). Floating point operations are mostly addition, subtraction, and multiplication (of binary floating point in supercomputers; measured by FLOPS) with enough digits of precision to model continuous phenomena such as weather prediction and nuclear simulations (only recently standardized decimal floating point, not used in supercomputers, are appropriate for monetary values such as those useful for mainframe applications). In terms of computational speed, supercomputers are more powerful.[38]

Mainframes and supercomputers cannot always be clearly distinguished; up until the early 1990s, many supercomputers were based on a mainframe architecture with supercomputing extensions. An example of such a system is the HITAC S-3800, which was instruction-set compatible with IBM System/370 mainframes, and could run the Hitachi VOS3 operating system (a fork of IBM MVS).[39] The S-3800 therefore can be seen as being both simultaneously a supercomputer and also an IBM-compatible mainframe.

In 2007,[40] an amalgamation of the different technologies and architectures for supercomputers and mainframes has led to a so-called gameframe.

See also

[edit]- Channel I/O

- Cloud computing

- Commodity computing

- Computer types

- Failover

- Gameframe

- List of transistorized computers

- Master the Mainframe Contest

Notes

[edit]References

[edit]- ^ Vance, Ashlee (July 20, 2005). "IBM Preps Big Iron Fiesta". The Register. Retrieved October 2, 2020.

- ^ Edwin D. Reilly (2004). Concise Encyclopedia of Computer Science (illustrated ed.). John Wiley & Sons. p. 482. ISBN 978-0-470-09095-4. Extract of page 482

- ^ "mainframe, n". Oxford English Dictionary (on-line ed.). Archived from the original on August 7, 2021.

- ^ Ebbers, Mike; Kettner, John; O’Brien, Wayne; Ogden, Bill (March 2011). Introduction to the New Mainframe: z/OS Basics (PDF) (Third ed.). IBM. p. 11. Retrieved March 30, 2023.

- ^ Beach, Thomas E. (August 29, 2016). "Types of Computers". Computer Concepts and Terminology. Los Alamos: University of New Mexico. Archived from the original on August 3, 2020. Retrieved October 2, 2020.

- ^ "National Vulnerability Database". Archived from the original on September 25, 2011. Retrieved September 20, 2011.

- ^ Singh, Jai P.; Morgan, Robert P. (October 1971). Educational Computer Utilization and Computer Communications (PDF) (Report). St. Louis, MO: Washington University. p. 13. National Aeronautics and Space Administration Grant No. Y/NGL-26-008-054. Retrieved March 8, 2022.

Much of the early development in the time-sharing field took place on university campuses.8 Notable examples are the CTSS (Compatible Time-Sharing System) at MIT, which was the first general purpose time-sharing system...

- ^ Crisman, Patricia A., ed. (December 31, 1969). "The Compatible Time-Sharing System, A Programmer's Guide" (PDF). The M.I.T Computation Center. Retrieved March 10, 2022.

- ^ Walden, David; Van Vleck, Tom, eds. (2011). "Compatible Time-Sharing System (1961-1973): Fiftieth Anniversary Commemorative Overview" (PDF). IEEE Computer Society. Retrieved February 20, 2022.

- ^ "Get the facts on IBM vs the Competition- The facts about IBM System z "mainframe"". IBM. Archived from the original on February 11, 2009. Retrieved December 28, 2009.

- ^ "Emulation or Virtualization?". 22 June 2009.

- ^ "Largest Commercial Database in Winter Corp. TopTen Survey Tops One Hundred Terabytes" (Press release). Archived from the original on 2008-05-13. Retrieved 2008-05-16.

- ^ Phillips, Michael R. (May 10, 2006). Improvements in Mainframe Computer Storage Management Practices and Reporting Are Needed to Promote Effective and Efficient Utilization of Disk Resources (Report). Archived from the original on 2009-01-19.

Between October 2001 and September 2005, the IRS' mainframe computer disk storage capacity increased from 79 terabytes to 168.5 terabytes.

- ^ Hitachi AP10000 - VOS3

- ^ "BMC AMI Ops Automation for Data Centers". BMC Software. Retrieved 13 March 2023.

- ^ "IBM mainframe solutions | Optimizing your mainframe". Retrieved 2022-09-23.

- ^ "Mainframe Modernization". Retrieved 26 October 2012.

- ^ "Automated Mainframe Testing & Auditing". Retrieved 26 October 2012.

- ^ "CA Technologies".

- ^ Bergin, Thomas J, ed. (2000). 50 Years of Army Computing: From ENIAC to MSRC. DIANE Publishing. ISBN 978-0-9702316-1-1.

- ^ Sanger, David E. (1984-02-05). "Bailing Out of the Mainframe Industry". The New York Times. p. Section 3, Page 1. ISSN 0362-4331. Retrieved 2020-03-02.

- ^ Also, Stewart (Mar 8, 1993). "IBM still has brains to be player in client/server platforms". InfoWorld. Retrieved Dec 26, 2013.

- ^ "IBM 4Q2009 Financial Report: CFO's Prepared Remarks" (PDF). IBM. January 19, 2010.

- ^ "Stewart Alsop eating his words". Computer History Museum. Retrieved Dec 26, 2013.

- ^ Cureton, Linda (11 February 2012). The End of the Mainframe Era at NASA. NASA. Retrieved 31 January 2014.

- ^ Lohr, Steve (March 23, 2008). "Why Old Technologies Are Still Kicking". The New York Times. Retrieved Dec 25, 2013.

- ^ Ante, Spencer E. (July 22, 2010). "IBM Calculates New Mainframes Into Its Future Sales Growth". The Wall Street Journal. Retrieved Dec 25, 2013.

- ^ Press, Gil. "From IBM Mainframe Users Group To Apple 'Welcome IBM. Seriously': This Week In Tech History". Forbes. Retrieved 2016-10-07.

- ^ "IBM Mainframe Ushers in New Era of Data Protection" (Press release). Archived from the original on July 19, 2017.

- ^ "IBM unveils new mainframe capable of running more than 12 billion encrypted transactions a day". CNBC.

- ^ "IBM Unveils z15 With Industry-First Data Privacy Capabilities".

- ^ "Announcing IBM z16: Real-time AI for Transaction Processing at Scale and Industry's First Quantum-Safe System". IBM Newsroom. Retrieved 2022-04-13.

- ^ High-Performance Graph Analysis Retrieved on February 15, 2012

- ^ Transaction Processing Performance Council Retrieved on December 25, 2009.

- ^ The "Top 500" list of High Performance Computing (HPC) systems Retrieved on July 19, 2016

- ^ The Graph 500Archived 2011-12-27 at the Wayback Machine Retrieved on February 19, 2012

- ^ Resource consumption for billing and performance purposes is measured in units of a million service units (MSUs), but the definition of MSU varies from processor to processor so that MSUs are useless for comparing processor performance.

- ^ World's Top Supercomputer Retrieved on December 25, 2009

- ^ Ishii, Kouichi; Abe, Hitoshi; Kawabe, Shun; Hirai, Michihiro (1992), Meuer, Hans-Werner (ed.), "An Overview of the HITACHI S-3800 Series Supercomputer", Supercomputer ’92, Springer Berlin Heidelberg, pp. 65–81, doi:10.1007/978-3-642-77661-8_5, ISBN 9783540557098

- ^ "Cell Broadband Engine Project Aims to Supercharge IBM Mainframe for Virtual Worlds" (Press release). 26 April 2007. Archived from the original on April 29, 2007.

External links

[edit]- IBM z Systems mainframes

- IBM Mainframe Resources & Support Forum

- Univac 9400, a mainframe from the 1960s, still in use in a German computer museum

- Lectures in the History of Computing: Mainframes (archived copy from the Internet Archive)